I’m accumulating a lot of notes about these topics. Some of this is outdated. Don’t take anything here too seriously.

My Blog Posts

Resources

-

Geohot’s tinygrad

-

Comprehensive book on deep learning using Apache MXNet.

-

Need regularized images for a classifier training set? Check out my Simple Tool For Creating Training Image Sets.

-

TensorFlow’s Playground - A brilliant interactive neural network for educational purposes. Superb.

-

YOLO Real-time object detection.

-

SSD Single Shot MultiBox Detector object detection.

-

Datasets

-

Deconvolutional Image Segmentation - Image is convolved into classification and then deconvolves back to classify each pixel. All done in one DNN.

-

Deep Reinforcement Learning Bootcamp Lectures

-

Here’s a nice low-level numerical demonstration of backpropagation.

-

Superb clear and thorough educational material on neural networks.

-

Jason Brownlee has some great resources on his website. Some particular ones I liked.

-

Gradient Descent and a practical example thereof.

-

Although Andrej Karpathy seemed to have given up before achieving a full textbook, this excellent lesson on the core of backpropagation is worth reading.

-

Neural Network Feature Visualization - A pretty good and thorough overview of research to peek in the black box.

-

El Reg has a surprisingly educational intro to neural nets. Pretty traditional stuff.

-

A good introduction to Capsule Networks.

-

Measuring the tendency of CNNs to learn surface statistical regularities (as opposed to what you really want it to understand).

-

Intro to GANS. Also their applications. Original GAN paper.

Semantic Segmentation

-

DeepLab-v3+ Google’s semantic segmentation architecture 2018.

-

TensorFlow’s guide to semantic segmentation.

-

BiSeNet - Bilateral Segmentation Network paper.

-

Long list of resources related to semantic segmentation.

-

Goog’s cloud stuff.

-

Overview of most of the quality metrics for semantic segmentation. The important one they miss is ROC/AUC.

-

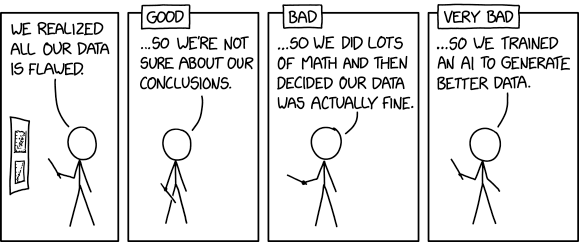

Good/bad/not-good/not-bad metrics

Frameworks and Libraries

OpenAI API Usage

First you need to get their magic libraries. It seems to install with no sudo or hassle.

pip install openaiHere’s a command line test to see if your keys are in order.

wget -O- --header="Authorization: Bearer $OPENAI_API_KEY" \

--header="OpenAI-Organization: $OPENAI_ORG_ID" \

"https://api.openai.com/v1/models"Note that the API key you’ll get will 48 character gibberish string.

However that won’t work without prepending it with sk- (just like

org- prepends the organization ID).

Taken from this interesting course by Andrew Ng.

import openai

import os

from dotenv import load_dotenv, find_dotenv # apt install python3-dotenv

_ = load_dotenv(find_dotenv()) # read local .env file

openai.api_key = os.getenv('OPENAI_API_KEY')

def get_completion(prompt, model="gpt-3.5-turbo",temperature=0):

messages = [{"role": "user", "content": prompt}]

response = openai.ChatCompletion.create(

model=model,

messages=messages,

temperature=temperature,

)

return response.choices[0].message["content"]mytopic = 'Computer Nerdery'

mytext = "It's nice that we can use backticks like this because AsciiDoc doesn't care about them."

prompt = f"""Create an illustrative example of "{mytopic}" using some input text.

Input text ```{mytext}```"""

response = get_completion(prompt)

print(response)Some interesting strategies for "meta-content" to guide the response without being the response.

def get_completion_from_messages(messages, model="gpt-3.5-turbo", temperature=0):

response = openai.ChatCompletion.create(

model=model,

messages=messages,

temperature=temperature, # this is the degree of randomness of the model's output

)

# print(str(response.choices[0].message))

return response.choices[0].message["content"]

messages = [

{'role':'system', 'content':'You are friendly chatbot.'},

{'role':'user', 'content':'Hi, my name is Isa'},

{'role':'assistant', 'content': "Hi Isa! It's nice to meet you. \

Is there anything I can help you with today?"},

{'role':'user', 'content':'Yes, you can remind me, What is my name?'} ]

response = get_completion_from_messages(messages, temperature=1)

print(response)Here’s a custom chatbot example that takes orders for a pizza restaurant.

def collect_messages(_):

prompt = inp.value_input

inp.value = ''

context.append({'role':'user', 'content':f"{prompt}"})

response = get_completion_from_messages(context)

context.append({'role':'assistant', 'content':f"{response}"})

panels.append(

pn.Row('User:', pn.pane.Markdown(prompt, width=600)))

panels.append(

pn.Row('Assistant:', pn.pane.Markdown(response, width=600, style={'background-color': '#F6F6F6'})))

return pn.Column(*panels)

import panel as pn # GUI

pn.extension()

panels = [] # collect display

context = [ {'role':'system', 'content':"""

You are OrderBot, an automated service to collect orders for a pizza restaurant. \

You first greet the customer, then collects the order, \

and then asks if it's a pickup or delivery. \

You wait to collect the entire order, then summarize it and check for a final \

time if the customer wants to add anything else. \

If it's a delivery, you ask for an address. \

Finally you collect the payment.\

Make sure to clarify all options, extras and sizes to uniquely \

identify the item from the menu.\

You respond in a short, very conversational friendly style. \

The menu includes \

pepperoni pizza 12.95, 10.00, 7.00 \

cheese pizza 10.95, 9.25, 6.50 \

eggplant pizza 11.95, 9.75, 6.75 \

fries 4.50, 3.50 \

greek salad 7.25 \

Toppings: \

extra cheese 2.00, \

mushrooms 1.50 \

sausage 3.00 \

canadian bacon 3.50 \

AI sauce 1.50 \

peppers 1.00 \

Drinks: \

coke 3.00, 2.00, 1.00 \

sprite 3.00, 2.00, 1.00 \

bottled water 5.00 \

"""} ] # accumulate messages

inp = pn.widgets.TextInput(value="Hi", placeholder='Enter text here…')

button_conversation = pn.widgets.Button(name="Chat!")

interactive_conversation = pn.bind(collect_messages, button_conversation)

dashboard = pn.Column(

inp,

pn.Row(button_conversation),

pn.panel(interactive_conversation, loading_indicator=True, height=300),

)

dashboard

messages = context.copy()

messages.append(

{'role':'system', 'content':'create a json summary of the previous food order. Itemize the price for each item\

The fields should be 1) pizza, include size 2) list of toppings 3) list of drinks, include size 4) list of sides include size 5)total price '},

)

#The fields should be 1) pizza, price 2) list of toppings 3) list of drinks, include size include price 4) list of sides include size include price, 5)total price '},

response = get_completion_from_messages(messages, temperature=0)

print(response)Gaussian Naive Bayes Classifier

Using: scikit-learn

The following is handy for building a classifier model from a dataset.

Data is features_train and labels are labels_train for the

training data. Once the model is built, new classifications can be

calculated with new data.

>>> import numpy as np

>>> features_train= np.array([[-1,-1], [-2,-1],[-3,-2],[1,1],[2,1],[3,2]])

>>> labels_train= np.array([1,1,1,2,2,2])

>>> from sklearn.naive_bayes import GaussianNB

>>> classifier= GaussianNB()

>>> classifier.fit(features_train,labels_train)

GaussianNB(priors=None)

>>> classifier.predict([[-.8,-1]])

array([1])

>>> classifier.predict([[5,4]])

array([2])

>>> classifier.predict([[0,0]])

array([1])And to find out how well a classifier is doing, check it on a test

set. Make predictions of the features that produced labels_test and

then compare them.

from sklearn.metrics.classification import accuracy_score

correct_ratio= accuracy_score(labels_test,predicted_labels_test)SVM

Support Vector Machines are weirdly named, almost to the point of foolishness. The "margin" is the width of the space around the dividing (classifying) line generated by the SVM algorithm. I believe that the points that constrain and limit this margin, the ones touching the margin, are the "support vectors", like they’re supporting this line somehow. I think the algorithm is supposed to be thought of as a machine to generate these support vectors, thus the margin, thus the dividing/classifying line/vector.

This Support Vector Classifier (SVC) example uses the same data defined in the Gaussian example.

A "kernel" in this business is a function that maps a low dimensionality input to a higher dimensional space with the hope that a linear classifier can cut a line or plane through the resulting mess somewhere. This is a way of cheaply introducing non-linearity into a system that a linear classifier can still slice up. Possible kernels available to use include the following.

-

linear

-

poly

-

rbf - radial basis function

-

sigmoid

-

precomputed

-

A DIY callback.

Other important parameters. * c - Controls the trade off between smooth decision boundary and classifying training points correctly. Higher c means more training points are classified correctly at the risk of overfitting. * gamma - Defines how far the influence of a single training example reaches. Lower values mean that each value has a far reach.

>>> from sklearn import svm

>>> classifierSVM= svm.SVC() # Or SVC(kernel='linear')

>>> classifierSVM.fit(features_train,labels_train)

SVC(C=1.0, cache_size=200, class_weight=None, coef0=0.0,

decision_function_shape=None, degree=3, gamma='auto', kernel='rbf',

max_iter=-1, probability=False, random_state=None, shrinking=True,

tol=0.001, verbose=False)

>>> classifierSVM.predict([[2,2]])

array([2])

>>> classifierSVM.predict([[-5,-5]])

array([1])Entropy For Decision Trees

entropy = sum(Pi*log2(Pi))Where Pi is a fraction of a certain kind of classification. All items homogeneous, then entropy is 0. A 50/50 split, then maximal entropy at 1.

Decision trees maximize information gain.

information_gain= entropy(parent) - [weighted average](entropy(potential children))Decision Trees

Unlike SVM, this classifier is named so well it needs little further elaboration.

>>> from sklearn import tree

>>> tree_clf= tree.DecisionTreeClassifier()

>>> tree_clf.fit(features_train,labels_train)

DecisionTreeClassifier(class_weight=None, criterion='gini', max_depth=None,

max_features=None, max_leaf_nodes=None,

min_impurity_split=1e-07, min_samples_leaf=1,

min_samples_split=2, min_weight_fraction_leaf=0.0,

presort=False, random_state=None, splitter='best')

>>> tree_clf.predict([[2,2]])

array([2])

>>> tree_clf.predict([[-4,-4]])

array([1])One parameter that may help is min_samples_split. This keeps a tree

from splitting when there are very few items in a branch. The default

is 2 which means that any branch with multiple items can be split.

Neural Networks

I wondered if there was an analogous biological process to backpropagation. It looks like the answer is inconclusive.

Forget About Neurons

…originally motivated by the goal of having machines that can mimic the brain. …[the reason for learning is] they work really well… and not, certainly not, just because they’re "biologically" [air quotes!] motivated.

Due to all these and many other simplifications, be prepared to hear groaning sounds from anyone with some neuroscience background if you draw analogies between Neural Networks and real brains.

It is often worth understanding models that are known to be wrong (but we must not forget that they are wrong!)

Terminology

-

Perceptron - inputs are binary and produces a single binary output. As it is a step function, it is difficult to fine tune so not so good with learning in incremental iterative techniques.

-

Sigmoid neurons - input values are between 0 and 1. Includes a weight and a bias. The output is calculated with the sigmoid function,

1/(1+e^-z). Also known as "logistic neurons" using the "logistic function". It is the quintessential activation function though others are possible. This activation function also has helpful properties for the necessary differentiation of neural networks. This kind of neuron/operation takes only 1 input and is an example of an activation function which can add non-linearity.def sigmoid(z): return 1.0/(1.0+np.exp(-z))def sigmoid_derivative(z): sigmoid(z)*(1-sigmoid(z)) -

Multilayer Perceptrons - (MLP) Basically just a neural network with different layers, confusingly, made up of sigmoid neurons, not perceptrons.

-

Loss Function - Says that our training algorithm has done a good job if C(w,b) is near 0. It is sometimes called a cost function. This is really a proxy for the thing you really want (e.g. images classified correctly) the difference being that this is something that can be tractably optimized. Also known as the "objective function", or "quadratic cost function" or "mean squared error" or "MSE". Here is the MSE function,

C(w,b)=(1/(2*n))*sum((y(x)-a)^2), or shorter,np.mean((y-a)**2). There is also "MAE" which is mean absolute error. There can be other cost functions, for example, the cross-entropy cost function (aka log loss),C=(-1/n)*sum( y*ln(a) + (1-y)*ln(1-a) ). And there are others. Note that the loss is in terms of the values used — if you are trying to predict SAT scores, a loss of 5 might be fine but if you’re trying to predict odds something is classified a certain way, then .05 may not be acceptable. See "accuracy". -

Accuracy - Where loss (see above) is about how close the function was using the actual numeric scale involved, accuracy involves (I believe) only classification problems and it is simply the ratio of items classified correctly. Where this will deviate from loss is when most data work fine, but some are disastrous. You can then have a high accuracy, but those failures drag down the loss.

-

Stochastic Gradient Descent (SGD) - A technique to find minima. Rather than considering all of the training set as a basis for where to look for the lower cost function, a few (orders of magnitude less) training inputs are chosen randomly to represent the entire known set.

-

Delta rule - This is the specific concept that establishes a strategy for updating weights in gradient descent. It is the reason that a when a change in a weight produces a large change in cost function, that weight is adjusted more aggressively. (I could imagine the complete opposite being plausible but that is just not how it’s done.) Specifically, the formula is

deltaW[j][i]= eta*(target[j]-y[j])*x[i]. -

Training Set - The data that the model is trained with. If the model gets these wrong, then you know you’ve failed.

-

Validation Set - Often the training data is segregated into two parts. The first part is used to do the actual training but a smaller reserved portion can be used to check if there is overfitting in between iterations of model adjustment. It’s like a mini synthetic test set.

-

Test Set - This is the data you you will use to do a final check on the model. It may not even be available to you if it is part of some contest.

-

Batch Size - Instead of trying a set of inputs and seeing how that goes, it is common to try subsets of the training data in (hopefully parallelized) batches to allow the system to incorporate all of a large training collection. With the right hardware (GPUs, multiple CPUs) this can speed things up enormously. Larger batch sizes can be quicker if they are handled with parallelism, however common batch sizes are 32, 64, 128, 256, 512, generally whatever can comfortably fit in memory. Larger batch sizes can be helpful to improve accuracy also, however as the batch size increases its power as a sample approaches the true nature of the population — so there are diminishing returns. Use a lot of data and use the biggest batch size that is comfortable for your hardware. Breaking into batches turns the input vector into an input matrix.

-

Bias - Good at generalizing, stubborn about fitting new training data. Consistent.

-

Variance - Good at fitting but perhaps overfitting and missing the generalizations. Accurate. More on these terms here interestingly enough.

-

Epoch - Each pass through all of the training data. Ideally the model weights would be assessed and corrected to perfection on the first attempt, but since this is not realistic, multiple such adjustment calculations are done with the training data. Each of these epochs ideally improves the model.

-

Regularization - Refers to techniques designed to reduce generalization error, even at the expense of training error. Augmenting the data to provide some simple invariance is a simple example. Another simple idea is early stopping — once you’re at a point where improvements are minor, they’re more likely to be overfitting anyway. Fancier techniques calculate an adjustment to add to the loss value to penalize large discrepancies in neuron weight and bias parameters. It is thought that if some neurons are doing way more than others, that it may be a sign of overfitting. Basically it tries to incentivize simplicity in the model and prevent overfitting. Also dropout layers can, in theory, help with noise and overfitting. This basically entails training with various different random neurons turned off during the training steps to ensure that particular neurons are not being depended on too heavily, which may indicate overfitting.

-

Softmax function - Similar to the "pie chart function" except that values are each run through an

exp()function first. Here’s Wikipedia’s example in Geogad syntax.[1 2 3 4 1 2 3] exp dup sum / -> [.2036 .0643 .1747 .4748 .0236 .0643 .1747]Here is a graphical look at a normal pie chart and a softmax pie chart, i.e. a pie chart with all its values run through exp(x) first. It turns the slightly larger into much larger yet, and the slightly smaller into much smaller.Note that if you were to scale those inputs, the result would be different numbers but the same pie chart. For machine learning, this function really is about nonlinear activations, the ease which the derivative of exp() is computed, and handling possible negative values (e.g. between -1 and 1) conveniently too. The name implies that it is not a "hard" max where the output vector would just be [0 0 0 4 0 0 0] for the example above. You can’t take one of the zeros and figure out what it was before it was overshadowed by the hard max. But with soft max, you can reverse the operation and figure out what the inputs were.

Variables

In machine learning variable names are often pretty stable and it will often be assumed that their meaning is obvious.

-

X - inputs.

-

y - The actual known good target used during training. Often a vector with many known values.

-

y-hat - The value of the prediction the model exists to produce.

Convolutional Neural Networks

-

Different types of convolutions nicely illustrated.

-

Kernel Size is the small region that is sampled from the original image (or whatever data). So a result pixel may be a convolution or some kind of summary of data in a 3x3 patch of the original. The kernel size is 3x3.

-

Stride refers to how the convolution process indexes, or aims, the kernel at the original image. The whole idea is that each of the entire target set of pixels is computed using a kernel sized patch. Where that patch is focused on the input image is controlled by the stride. A stride of 1 moves one pixel over each time. This implies that the result and the source image will be pretty much the same size. You might shrink the result image by 50% by having a stride of 2, for example.

-

Padding is the term used to describe how the edges are handled. The edge pixels can be extended (which may give them undue importance) or set with a special value, or wrapped (like a torus).

-

Channels can refer to parameters where the convolution takes a different number of layers than it produces as a result. So maybe the source image has RGBA and the result image is distilled down to just a single greyscale channel.

-

An atrous convolution is also called a dilated convolution or a convolution with holes ("à trous" literally is French for "with holes"). Basically the kernel size is larger than the full data that could be extracted from such a kernel. So if you have a 5x5 kernel size but only extract 1st, 3rd, and 5th pixels on the edges and the center one, you are drawing from only 9 pixels spread in a 25 area to calculate your result pixel. Obviously this makes no sense if you’re not in a hurry, but if you are, it’s a way to ingest more of the source data without using any more processing. This may be beneficial where context is super important.

-

Transposed convolution is sometimes called "deconvolution" but that may be mathematically imprecise (that would be a lossless reversal operation). This is where you take a input and get an output whose sizes would match the pure lossless deconvolution. However, there’s some necessary loss of information. Still this can be very handy for encoding/decoding networks like semantic segmentation where an original size image must be synthesized. One illustrative configuration is a dilated convolution with no stride. This will utilize each input pixel but output something scaled up.

Back Propagation

The critical mathematical breakthrough in neural networks isn’t necessarily the network, rather it’s a clever way to automatically adjust the weights of the neurons/perceptrons so that the outputs turn out to be the values they should be, i.e. error is minimized. This is done in three steps. First the inputs are propagated through the network. During training this produces an end value which can be compared against the known true value (the whole premise of "supervised learning" is that you know the answers for some training inputs). The amount the system differs from what is known to be the correct answer is the error. The trick is to figure out how much each of the weights of each neuron (each node in the NN DAG) contributes to that error. This is done in the second step which is back propagation. Back propagation figures out how much each neuron’s weight contributed to the error and therefore also how the weights of the neurons can be adjusted to reduce this error. The third step is to simply update the weights to reflect this new understanding of the system. With a hopefully improved model that should produce a smaller error, the whole process is run again. This is repeated until the error no longer can be reduced.

It turns out that backpropagation is a dynamic programming optimization technique because the intermediate partial derivatives are computed once and used repeatedly in the adjustment of all network parameters (weights, biases) saving considerable computational expense. This allows the networks to become more complex and therefore more expressive.

This post implements a very small and simple example of a neural net with back propagation. The essential code looks something like this.

X= np.array([ [0,0,1],[0,1,1],[1,0,1],[1,1,1] ]) # Input data set. y= np.array([ [0,1,1,0] ]).T # Output data set. syn0= 2*np.random.random((3,4))-1 # Hidden weights. syn1= 2*np.random.random((4,1))-1 for j in xrange(60000): l1= 1/(1+np.exp(-(np.dot(X,syn0)))) # Input Layer. l2= 1/(1+np.exp(-(np.dot(l1,syn1)))) # Hidden Layer. l2_delta= (y-l2)*(l2*(1-l2)) # Back prop intermediate l1_delta= l2_delta.dot(syn1.T) * (11 * (1-l1)) # Back prop intermediate syn1 += l1.T.dot(l2_delta) syn0 += X.T.dot(l1_delta)

In back propagation, the question that must be answered for each variable weight in the network is how much does a particular weight contribute to the magnitude of the final error? Or put another way, when changing only a particular variable in the whole complex system by a certain amount, how much does that change the overall error? By answering this, the contributory effects of each weight variable can be ascertained and sensibly adjusted. Amazingly a good way to think about this question is with calculus in mind. The change in final error per (with respect to) the change in some contributory variable is a derivative. Each variable weight in the network graph must be considered in a derivative of the final error. dE/dw1, dE/dw2, etc.

Chain Rule

In calculating the error contributions of each weight (dE/dwN) the graph can have many edges between the final known error value and the node with the weight of interest. The chain rule is used to differentiate composite functions which allows the process to proceed from the known final error node step by step propagating backwards through the graph until all nodes are covered.

d(f(g(x)))/dx = f'(g(x))*g'(x)h(x) = (sin(x))^2 # Example composite fn to find derivative of.f(x) = x^2 # Can be broken in to part 1...

g(x) = sin(x) # ...and part 2.

f'(x) = 2x # Simple derivative of part 1.

g'(x) = cos(x) # Simple derivative of part 2.h'(x) = f'(g(x)) * g'(x) # Chain rule.

h'(x) = 2*sin(x) * cos(x) # The complex derivative.Here’s another excellent example from this nice slow explanation of the chain rule as applied to neural networks. (I’ve adjusted the notation, so it could be pedantically wrong, but it makes the point.)

f(x) = e^( sin( x^2 ) )

u = sin(v)

f(x) = e^u

v = x^2

f'(x) = e^u * u'(v)

u'(v) = cos(v) * v' = cos(x^2) * 2x

f'(x) = df/dx = e^(sin(x^2)) * cos(x^2) * 2xThis shows the chain rule being used twice, once for f' and again for u'.

Also the brilliant C. Olah has a fantastic and extremely clear explanation of back propagation (and more!).

Gradient Descent

Also called steepest-descent. This is a numerical algorithm to

efficiently find a least-squares fit for non-linear equations.

Simplified it looks something like this.

X[i] = X[i-1] - learning_rate*derivative_of_f(X[i-1])This technique can perform badly if it has to work through a long narrow shape. For example, imagine a ball rolling back and forth across a bathtub before it finally makes it to the drain, the system’s minimum.

There are actually other algorithms that do pretty much the same thing with different trade offs. For example, the Gauss-Newton algorithm. Another is the Levenberg-Marquardt algorithm which is used, for example, by GNUPlot’s fit function.

Here’s a good simple explanation of gradient descent.

Misc

It seems like the job of optimizing the execution time of trained neural nets with architecture changes could be a job for neural nets.

— John Carmack

Recurrent Neural Networks

- LSTM

-

Long Short Term Memory

- GRU

-

Gated Recurrent Unit

Resources

Applied to Secondary Structure of Proteins

- Q3

-

100 * residues_correctly_predicted/total_residues Worries only about helix, beta sheet, and coil (or, it seems to me "other").

- Q8

-

involves fancier elements.

History

If you tell me precisely what it is a machine cannot do, then I can always make a machine which will do just that.

PHD

"Profile network from HeiDelberg" link "We have trained a two-layered feed-forward neural network on a non-redundant data base of 130 protein chains to predict the secondary structure of water-soluble proteins." Burkhard Rost 1993!

"For networks, such a strategy is prohibited by the limitations of computational resources."

-

Q3 = 70% (Which is frankly not bad for 1993 and only training on 130 proteins!)

DSC

"Discrimination of Secondary structure Class" link Another ancient technique. 1996 Still 126 protein training set!

-

Q3 = 70.1%

JPRED

JPred: a consensus secondary structure prediction server. link

-

Q3 = 72.9% in 1998

Other Preds included PREDATOR, MULPRED, ZPRED, others?

RaptorX

RaptorX: a Web Portal for Protein Structure and Function Prediction link

DNSS

A Deep Learning Network Approach to ab initio Protein Secondary Structure Prediction ieee link 2014 Spencer M, Eickholt J, Cheng J Used CUDA on GPUs.

-

Q3 = 80.7%

CNN Example

Next-Step Conditioned Deep Convolutional Neural Networks link From Google as part of Google Brain Residency. Feb 2017 - I’m not sure it’s really "published" (arXiv).

-

Q8 = 71.4% (Probably couldn’t find an improved Q3.)

Padded to 700 residues. Whatever.

Still seem to be training on CB513 data set which seems to have only 5534 proteins. Whatever.

RNNs + some other stuff

Protein Secondary Structure Prediction Using Cascaded Convolutional and Recurrent Neural Networks PDF 2016-04-25 This seems very complex. But maybe that’s what’s needed.

-

Q3 87.8% on CASP10, 85.3% on CASP11

MLflow

One thing is consistent across all methodologies relating to machine learning practices and that is that they are all a complete mess. The data is huge and hard to wrangle, the models are also huge and hard to wrangle, the output can be hard to interpret, tuning is a black art, different resources need to be usable (GPU, cloud, TPU, etc), documentation and record keeping are usually bad, dependencies for setups are insane, etc, etc. MLFlow is styled as a way to bring some consistency to the scene to ameliorate some of this.

The system seems divided into major "components" (their word).

-

MLflow Tracking - Logs specific parameters and metrics about experiments. There is also something called "artifacts" which seems to be a way to stick any old file into the vault for safe keeping.

-

MLflow Projects - A configuration of some data science operation wrapped up in a YAML file called

MLproject(reminding me ofMakefile). Then when one is present you canmlflow run $PATHand it will find this setup file and know enough to reconstruct the situation and run it. -

MLflow Models - This is a way to store actual models. All the zillion different frameworks create different "flavors" of MLflow Model. The format of such a "model" is a directory containing a a special descriptor file called

MLmodel, possibly YAML too (examples I’m seeing at certainly look like it). -

Model Registry - A centralized way to store models and tools to deal with them. It looks like this is kicked off by logging (I think with Tracking mentioned in this list) something like the following

mlflow.myModelFlavor.log_model(). Once it is logged, it can be "registered".

Usage

It seems like the main thing one does with MLflow specifically are commands that look like this.

mlflow run . # Run the configured project in this directory.

mlflow run . -P max_epochs=10 # Add your own override hyper-parameters.Other parameter (-P) overrides possible include:

max_epochs, gpus, batch_size, num_workers, learning_rate,

accelerator, patience, mode, monitor, verbose.

It seems a big part of the whole added value is having Conda go crazy and build whatever baroque system you need just before the thing runs. Obviously that can get extraneous after the first run so maybe you’ll need to start doing this.

mlflow run . --no-condaOr maybe Conda is pretty smart and can check that its requirements are present very quickly.

To get started with the logging here is about the minimum code modification you need (shown with PyTorch):

This seems to be the minimum MLflow business in a model.

import mlflow.pytorch

...

mlflow.pytorch.autolog() # In main before anything interesting happens.If you want to see what MLflow observed while running the project, you can use:

mlflow uiAnd then check out: http://localhost:5000

Other Systems With Similar Features To MLflow

There are systems that might be worth knowing about for comparison and understanding the ecosystem of ML management.

-

Weights & Biases - Experiment tracking, reports, collaborative dashboards, dataset and model versioning, tables, interactive data visualization, hyperparameter optimization. And last but not least "Pricing".

-

DVC - "Open-source Version Control System for Machine Learning Projects by iterative.ai". Looks like we use this too. Terrible name. "Data Version Control"? But also experiment management.

-

TorchServe seems like a management system for PyTorch specifically. That’s all fine but it does seem like you’d want to keep open the ability to try other frameworks. Still, it does seem like this system knows about MLflow and can work with it.

-

Horovod - Not sure this is directly equivalent but certainly heavily related. Seems to be a Linux Foundation project for what that’s worth.

-

Facebook’s FB Learner Flow

-

Uber’s Michealangelo

-

Google’s TFX

-

Amazon has SageMaker which seems awfully similar. There may be other Amazon things like this too.