Blender is a beast. It is one of the most impressive feats of FOSS ever created.

Contents

Resources

-

My blog post saying nice things about Blender.

-

My blog post integrating my programming language with Blender.

-

https://www.blender.org/ - Official website

-

Blender.org is now making exceptionally good and clear videos methodically explaining everything sensibly. Here is the playlist.

-

Blender source code. Looks like they know how to host their own Git repo. Good job!

-

Diagram of source code layout.

-

Modifier Encyclopedia - An incredibly helpful visual catalog of millions of Blender modifier features.

-

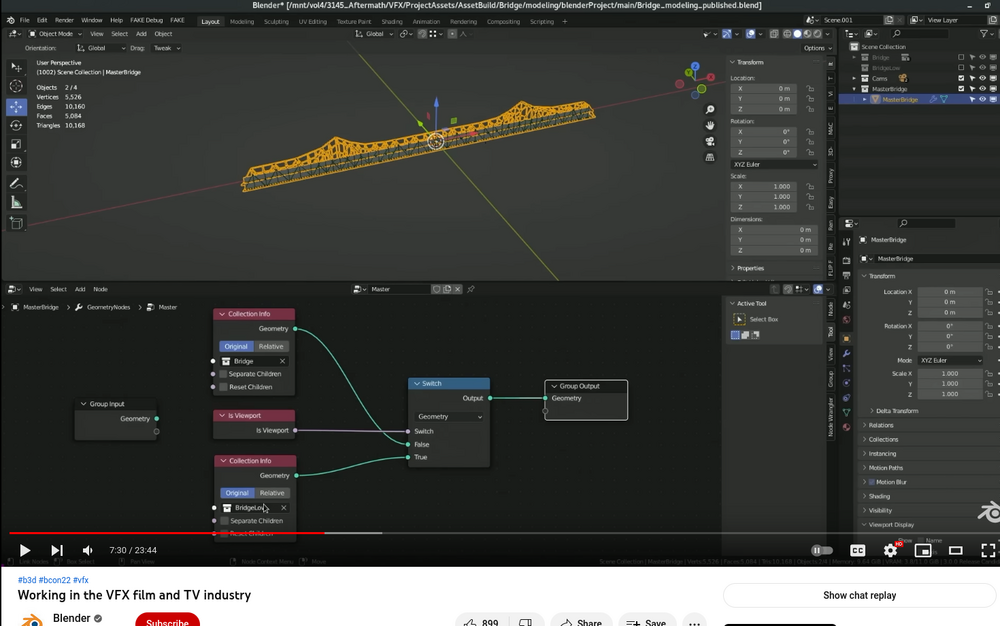

GC Masters high quality videos.

-

Good high-quality written guides.

-

A superb tips video with 100 really well thought out, properly demonstrated tips. Just to see so much of the Blender arcana being properly used is worth the 2 hours it takes to watch this. Ya, this guy is a boss.

-

Art Station - modeling showcase. Great for general CG inspiration.

-

Paper models from Blender models? Yes, there is an add-on for that!

-

HDRI - High Dynamic Range Images - used for setting a lighting base so that the reflections seem plausible. Ideally you’d take such a reference image during your shoot on location, but these are useful for faking that.

-

sharetextures - CC0 Textures and 3dModels.

-

Poliigon - textures, and other stuff.

-

If you really, really have to have a giant keyboard with a numpad and the complexity of an airplane cockpit, this Blender keyboard is cool.

-

Minecraft rigs. Possibly another source.

-

Ever get some weird file like

wtf.3mfand you want to open it in Blender? I have had some luck with this web-based conversion tool. Obviously assume snooping.

Technical And Architectural Modeling

-

I’m pretty impressed with this Construction Lines add-on — here’s the author’s site. This focuses on construction lines but also seems to cover things obvious to CAD people like rational copying and moving with base points. Not free but it is worth $7 just to use to illustrate what CAD users find frustrating about Blender.

-

Clockmender’s CAD functions also show a frustration with normal Blender. Here is Precision Drawing Tools from the same source. Looks like TinyCAD is joining this project.

-

tinyCAD Mesh Tool Add-on - other people are frustrated by Blender not living up to its potential. Has some simple useful geometry helpers. Couldn’t find this in stock add-ons — suspect it’s for 2.8+. Still interesting.

-

Mechanical Blender is trying to bring sensible technical features to Blender. Looks early (or dead) but worth watching.

-

A nice site showing the potential of architecture in Blender.

-

This is the best video I’ve seen demonstrating good techniques and practices for dimensionally accurate technical modeling.

-

Measureit is an amazingly powerful add-on that allows full blueprint style dimensioning. This video, a continuation of the technical modeling video just mentioned, is the best comprehensive demonstration of it (in 2.8+).

-

Another superb demonstration of technical modeling in Blender is this video showing how to model a hard surface item directly from accurate measurements.

-

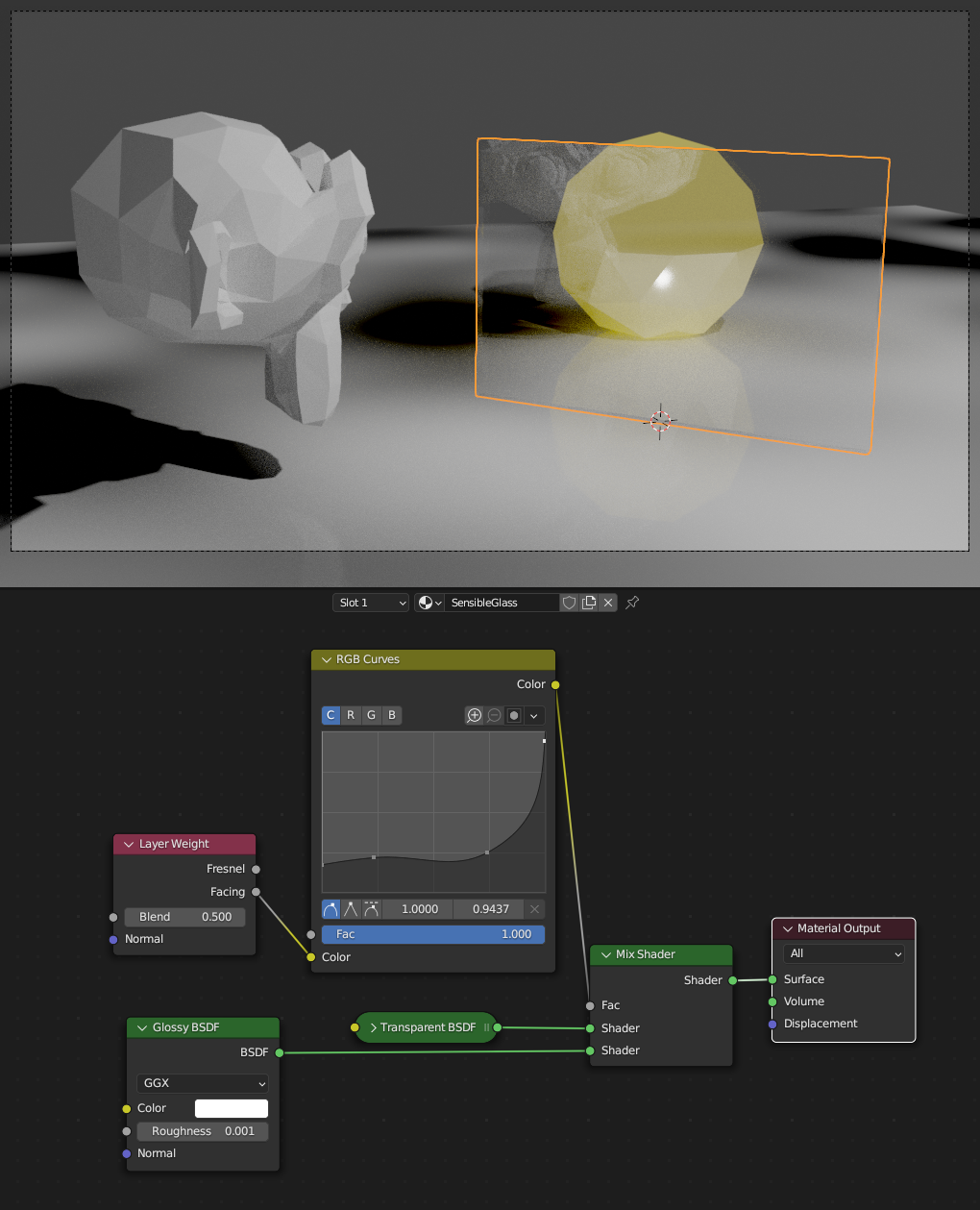

Here’s a good list of materials with their densities and IOR and other interesting properties: https://physicallybased.info/

Instruction

The only problematic thing about Blender is that, like many of the best tools for professionals, it is horrendously difficult to learn. Fortunately there are some good resources to help.

-

Udemy Comprehensive Blender 3D Modeling Course - worth every penny of $10.00

-

Aidy Burrows has the best video I have ever seen for reminding yourself about how important features of Blender (2.8+) work.

-

Interested in 2d animation? This well produced video by Dantti exhaustively covers all grease pencil features by demonstrating them all.

Screencast-keys is a non-standard addon, found here, that will highlight what keys are pressed so that observers can follow along. I think it could be helpful for knowing what key you accidentally might have pressed. Note I haven’t tried this.

Making Illustrative Animated Gifs

Making tutorials or trying to post on BlenderSE? Want fancy illustrative gifs? Look for "Animated GIFs From Screen Capture" in my video notes.

Models

Why start modeling from scratch when you can use someone else’s model that they want you to use? I don’t yet know which of these sources suck and which ones are good, but I’m listing them here for completeness. I got the following list from here.

-

CGTrader - Huge collection of high quality models in all categories. Mostly $, some free. A good place to start looking for models, especially if you are ready to pay for them. Account created.

-

Sketchfab has a lot of models and it’s even possible to import them directly from Blender using this plugin. Details discussed in this video.

-

Free3D - Blender specific section.

-

Free3D - Good diverse selection. Good selection for free, also a $ section (despite name).

-

GrabCAD - Extensive collection of models. Many engineering and technical models. Seems mostly free.

-

Clara.io - Ok collection of all kinds of random stuff. A lot of low-poly simplistic stuff. Seems more focused on the model geometry and not the rendering. Seems entirely free.

-

3dsky.org - Large collection. All types but emphasis on interiors. Some $, some free.

-

Blender Market - Not much free but Blender oriented. $$

-

Autodesk Online Gallery - Impressive collection, of course, but expect the Autodesk hassle and $$.

-

Cults - 3d printer baubles. Seems free.

-

TurboSquid - Huge collection. Often huge $$$.

-

ArchibasePlanet.com - Interiors mostly. Seems free.

-

Archive 3D - Mostly interiors. Some other stuff. Seems free.

-

3DExport - Good variety. Seems like a reseller. Slow site. $$.

-

Sweet Home 3D - Mostly low-poly architectural models. Seems good for house renovation. Seems free.

-

All3dfree.net - Architectural interiors. Seems free.

-

Artist-3D.com - Confusing site. Favors Autodesk formats? Diverse themes. Some free? Hard to tell how to acquire models.

-

3D Resources by NASA - High quality space models, mostly of actual space stuff. Free.

-

CAD Blocks Free - Seems oriented to models in native CAD program formats (e.g. AutoCAD). Pop up heavy.

-

Car Body Design - As the name implies, cars. Mostly fancy and exotic. This is actually a big resource for designing cars. Free.

-

Viz-People - Cars and interior design. Mostly $, some free.

-

model+model - Household and interior design. Some free, mostly $.

-

Unity Asset Store - Large resource of all kinds of models leaning towards game assets. Mostly small $, some free.

-

3Delicious - General collection, heavy on interior design. Looks free.

-

3DModelFree.com - Interior design. Ostensibly free.

-

Bentanji - Seems like only interiors. Confusing site.

-

Three D Scans - Some high quality free classical sculpture scans. Not a huge selection but good if you need a high quality practice piece.

-

Photobash - Commercial "online resource for Royalty-Free Photography, Masked Images and 3D Models" high quality stuff but not costless.

Textures

-

Textures.com - price scales with quality.

-

LilySurfaceScraper - Addon to import texture files from URLs and set up the shader nodes. Quick demo.

-

Solar System Scope - nice hi-res space textures. Free.

-

AmbientCG.com - also known as cc0textures.com. Seems quality and free. Try with the Lily Surface Scraper addon.

Installation

It used to suffice to just sudo apt install blender and a Debian

system was happily ready to use Blender. But today, it really is not

sensible to use a pre-2.8 version and unfortunately Debian will

probably be mired in that for a long while. So you probably will need

to go to https://blender.org/download and download their package.

Click the "Download Blender 2.83.2" button or whatever version is

current. You can then find the real and proper link down at the

message: "Your download should begin automatically. If it doesn’t,

click here to retry." It’s helpful to know that if you’re trying to

install it remotely.

This technique avoids locally saving the archive and might be helpful too for cutting through fluff:

cd /usr/local/src

URL="https://mirror.clarkson.edu/blender/release/Blender2.83/blender-3.5-linux-x64.tar.xz"

wget -qO- $URL | sudo tar -xvjf -

cd ../bin

sudo ln -s ../src/blender-3.5/blender blender3.5

sudo ln -s blender3.5 blenderNow when you type blender, a proper modern version should start up.

Make sure /usr/local/bin is in your $PATH.

Set Up And Configuration

Here are some things I like to change in a default start file.

-

Get rid of the cube. Or not. It’s kind of idiomatic tradition at this point. Just learn to press "ax<enter>". But getting rid of it is an option. It does actually serve a purpose to alert you to the fact that you have a brand new untouched project.

-

Put the default camera and light in a collection called "Studio" and turn off its visibility. This makes it easier to delete it if you really don’t needed but also easy to see the default view if that’s helpful.

-

Get rid of

.blend1files. They seem superfluous if you have good habits. (I have never used one.) See Preferences → Save & Load → Save Versions. I’d keep it — why not? — if I could put the path in/tmpor something like that. This guy also was annoyed at Blender’s unhousebroken incontinence and wrote an addon to specify a litter box. Maybe overkill. -

Nerf F1 with a key reassignment to prevent opening browsers intended for help documentation. Uncheck Preferences → Keymap → Key Binding → type "F1" → Window → View Online Manual.

-

n shelf open. If you’re going to hide one, the t shelf is near useless.

-

Rotation point around 3d-cursor (".6").

-

Vertex snapping, not increment.

-

Viewport overlays → Guides → Statistics. On. (Or right click version in lower right.)

-

Start with plan view. Most of my projects start off with some sensible orthographic geometry. Another good reason is that while it is not the iconic Blender default cube typical start view, it is easier to reproduce. So "`8".

-

Output properties → Dimensions → Frame End. 240 (250 at 24fps is just stupid.)

-

Output properties → Output → Color. RGBA.

-

Output Properties → Output =

/tmp/R/f(R for render.) This will create (including parents)/tmp/R/f0001.png, etc. -

System → Memory&Limits → Undo Steps. Change from 32 to 128.

-

System → Memory&Limits → Console Scrollback Lines. Change from 256 to 1204.

-

Scene Properties → Units → Unit System = Freedom. Actually I’ve been having better luck with mm recently; still not a default.

-

Show Normals size .005 (or .05 with mm). Find this setting by going into Edit Mode (you’ll need that cube!) and then going to the viewport overlays menu that’s next to the "eclipse" looking icon on the top right bar.

-

Change all default generation sizes from 24" to 1". This may not stick and I don’t know how to make it permanent. :-( At least in mm, 24 is about 1"! :-)

-

Clipping values are usually a bit narrow by default. n menu → View Tab → View Section → Clip Start/End. I’m going with 1mm and 1e6mm. Using something less that 1mm seems to cause full time rendering confusion.

-

Perhaps fill out some custom 2d material properties. Even a couple of templates for "Color-FilledStroke" "Color-OnlyStroke" or something like that.

-

Add to quick q menu: Save Copy

-

Add to quick q menu: Preferences

-

Preferences → Animation → F-Curves → Default Interpolation → Linear

-

Preferences → Keymap → Preferences → 3d View → Extra Shading Pie Menu Items

-

Preferences → Input → Keyboard → Default To Advanced Numeric Input (allows stuff like "gx10/3" and useful on-the-fly math).

-

Rebind number keys to not hide collections! Go to Preferences → Keymap → Name and enter "Hide Collection". Uncheck the lot.

-

Preferences → Input → Default to Advanced Numeric Input - the plus side is you can do stuff like "gx100/25.4" and it will do a sensible thing. The bad part of this is that minus is a literal subtraction and no longer a shortcut for "oops, I got unlucky choosing the sign of my numeric operation - please fix that".

Addons I like.

-

3d View: MeasureIt

-

3d View: Precision Drawing Tools - perhaps fix the nomenclature file to be better.

$ grep xed /usr/local/src/blender-2.93.3-linux-x64/2.93/scripts/addons/precision_drawing_tools/pdt_msg_strings.py PDT_LAB_DEL = "Relative" # xed - was "Delta" PDT_LAB_DIR = "Polar" # xed - was "Direction" -

Add Mesh: Extra Ojbects

-

Mesh: mesh_tinyCAD

-

Import AutoCAD DXF

-

Import Images as planes

Other Addons to consider.

-

3d View: Stored Views

-

Object: Bool Tools

-

Object: Align Tools

-

Add Mesh: Bolt Factory

-

Mesh: 3d Print Tool Box

-

Interface: Modifier Tools

-

Mesh: F2

-

Mesh: Edit Mesh Tools

-

Export AutoCAD DXF

-

External: CAD Transform for Blender Mine is named:

lcad_transform_0.93.2.beta.3A good demo. Note that this addon hides over on the left in the menu activated with "t"; it is labeled as "CAD" with a green cube.

I also had a very elaborate start up procedure for doing video editing setup. See below for that.

I found that I prefer non-noodley noodles in the node editor. Here’s how to make sure that happens.

Edit → Preferences → Themes → Node Editor → Noodle Curving → 0

Disabling Emoji And Unicode Conflicts

Since Blender exhaustively uses every possible key combination, you can’t have obscure ones that you never use lurking around in some miscellaneous interface features. My window manager is pretty good about never needing anything that doesn’t use the OS key, but I recently discovered that [C][S]-e seems to be bound to a new-fangled system feature of "ibus". (This is interesting because Gimp seems to know how to override it when using this combo for "Export".)

It looks like the way to cure this is to run ibus-setup and go to

the "Emoji" tab. Click the "…" button; click the "Delete" button;

click the "Ok" button.

It might be smart to get rid of the Unicode one too (which is [C][S]-u by default).

Maybe these could be rebound to the OS key if they’re needed some day. I’m not sure that’s even possible but it is what makes sense.

3-D Modeling

X is red, Y is green. Right hand coordinates, Z is up and blue.

Vertices and edges are fine (though weird that you can’t just spawn those without enabling the Extra Objects addon), but faces are kind of weird. There’s something kind of vague about them. They can have 3 edges (tris), 4 (quads), or a whole bunch. So what’s going on? This official Blender design document explains exactly how it all works.

Units

Here in the USA many things are measured in Freedom Units. Blender can play along. Go to the properties tabs and look for the "Scene Properties" tab which will be a little cone and sphere icon. The second item is "Units". Choose "Imperial" and "Inches" and things should be fine.

Importing From CAD

Sometimes I need to get CAD models imported into Blender and that isn’t always easy. One important trick is running the models through FreeCAD. Try exporting to STEP files from the CAD program and having FreeCAD import that and export STL files. Blender can then import those pretty well.

Window Layout

Full official details are here and actually helpful.

The old way to split areas into subdivided areas: drag the upper right corner down (horizontal split) or left (vertical split). To combine areas, make sure they are the same format (e.g. one big pane doesn’t go right into 2 horizontally split panes) and drag the upper right corner up or to the right.

In 2.8+ things seem much easier to arrange. Just go to the edge of the viewport you’re interested in fixing and look for the left-right or up-down arrow icons. Once those are visible, you can use RMB to bring up a menu that will allow you to "Vertical/Horizontal Split" or "Join Areas". Also look at the "View → Area" top button menu for another easy way to split. This allows you to customize easily without the drama of old Blender.

A huge tip for people with multiple monitors is that you can detach windows. In old Blender this is done with the same drag of the upper right corner, just hold down Shift first. Which way you drag doesn’t matter—the window is just cloned with its own window manager decoration.

In 2.8+ there is a similar technique with [S] and dragging the plus to the left or something like that. You can also use the "Window" button on the top row menu to create a "New Window" — you may need to do a lot of shuffling after that though. Another way is to use the "View" button on the top row menu and then "Areas" (which is what these Blender function regions are called) and then "Duplicate Area into New Window". This will usually duplicate the wrong window but at least it only gives you one area to reset the way you want it.

Mouse Buttons

-

LMB

-

Select (in 2.8+). Click multiple times to cycle through any ambiguous selection.

-

[S]-LMB - expand selection explicitly.

-

[C]-LMB - expand selection inclusive or "Pick Shortest Path". E.g. LMB click one vertex and then [C]-LMB a few vertices over and all in between will be selected.

-

[A]-LMB - Select edge rings and face loops. The axis depends on which component is closest to mouse pointer when clicked.

-

[A]-LMB - (OM) Bring up a selection box for ambiguous selection. For example if there is an object inside of another object, holding [A] while LMB clicking in the general direction will bring up a menu of the possible objects.

-

[S][C]-LMB - expand selection inclusive but entire area on 2 axes. In Shader/Node editor (with Node Wrangler) this inserts a "Viewer" node into the graph to preview or diagnose what that step is producing.

-

-

MMB

-

(Middle Mouse Button) rotate (orbit) view. In draw mode, accepts drawn shapes.

-

[C]-MMB - scale view (zoom/move view camera closer)

-

[S]-MMB - pan view (translate), reposition view in display or, as I like to think of it, "shift" the view

-

[S][C]-MMB - Pan dolly a kind of zoom along your view

-

[A]-MMB - Center on mouse cursor. Drag to change among constrained ortho views.

-

-

RMB -

-

Vertex/edge/face context menu (EM). Object context menu (OM).

-

[C]-RMB - Select intermediate faces to mouse cursor automatically when certain EM geometry is selected. So for example, you can get A1 on a chessboard, hold [C] and right click on A8 and get the entire A column. Also, if you’re in some kind of edit mode where you’re extruding over and over again, [C]-RMB will extrude to the current mouse position. In Shader Editor with Node Wrangler, this brings out the edge cutting scissors.

-

In Shader Editor with Node Wrangler, this does a quick connect from one node to another to the implicit hook up point.

-

[C][A]-RMB - In draw mode, starts a lasso which will encircle items for deletion. In Shader Edit mode with Node Wrangler this does a semi-automatic hook-up presenting you with a choice dialog of the possible hook-up points; so another step, but finer control.

-

-

Scrollwheel - A mini 1-axis track ball on your mouse! Brilliant!

-

SWHEEL - Scales time line.

-

[C]-SWHEEL - Pans the time line.

-

[A]-SWHEEL - "Scrubs" through time line, i.e. repositions current frame.

-

Keyboard Shortcuts

There are 1000s. Here I will try to enumerate the ones I’ve encountered. Also remember that you can go to Preferences and select the "Keymap" section and learn a lot about what the currently configured bindings (and possibilities) are. Probably best to not go too wild with changing those unless you really know what you’re doing. Note that the tooltips often have the keybinding for the operation and an extra interesting hint is that if you turn off tool tips in the Prferences → Interface you can get them at any time by holding Alt and hovering over the feature. F3 searching also shows the keybinding when available.

-

[Shift]

-

In draw mode, constrains lines and shapes to orthogonal axes or equal dimensions. Does some other mysterious smoothing thing with freehand draw mode; see [A] for constraining freehand drawing.

-

While performing transformations that can use a mouse input (g, r, s, etc), shift will slow down motion.

-

Holding shift will slow down how fast values are changing when modifying a value by moving a slider.

-

-

[Control]

-

Snapping. Hold [C] or use toggle snap property button. In draw mode, holding [C] causes drawing actions to erase per the erase tool’s settings.

-

Plus LMB in video editor will select all strips after your cursor. This also works for keyframes in timeline type interfaces.

-

-

[Alt]

-

When drawing in draw mode, constrains to orthogonal axes - a bit janky. Draw mode shapes become centered at the start point, even lines.

-

When making an ambiguous selection in object mode (perhaps others) it will cause a "Select Menu" to appear where you can choose which of the objects you were after. Useful for things like nested objects inside of another.

-

Loop selecting with LMB in edit mode.

-

Scrub timelines with MMB; need to double check what modes this works in.

-

-

F1 F1

-

"Help" - Good to nerf this with a keyboard reassignment if a normal program’s idea of a normal web browser is not going to work for you (ahem).

-

[S]-F1 - File browser.

-

-

F2

-

Rename selected (OM). Only last (bright orange) if multiple selected.

-

[S]-F2 - Movie clip editor.

-

[C]-F2 - Batch renaming in the outline (search and replace). (Hmmm… Not working for some reason… Search for "Batch Rename" in F3. Or interestingly it looks like Blender is reading the keysym at a very deep low level; this means that you must use the genuine original Control key instead of a perfectly sensible remapped key. Thankfully this seems the only situation where this is a problem.)

-

-

F3

-

Open search form/menu.

-

[S]-F3 - Texture node editor. Press again for shader editor. Again for compositor.

-

-

F4

-

File menu including Preferences.

-

[S]-F4 - Python console.

-

-

F5-F8

-

In theory these are reserved to be defined by the user.

-

[S]-F5 - 3D viewport.

-

[S]-F6 - Graph editor. Press again for drivers.

-

[S]-F7 - Properties.

-

[S]-F8 - Video sequencer.

-

-

F9

-

Bring up modification menu for last operation. E.g. adjust bevel segments.

-

[S]-F9 - Outliner.

-

-

F10

-

[S]-F10 - Image editor. Press again for UV editor.

-

-

F11

-

View render.

-

[S]-F11 - Text editor.

-

[C]-F11 - View animation.

-

-

F12

-

Animate single frame.

-

[C]-F12 - Animate all frames of animation sequence. Note that this does not work if you use a remapped CapsLock as your Ctrl. There are a couple of bindings like this where the remapped control is not good enough for some weird reason and this is one of them. Use the native Ctrl key and it will work.

-

[S]-F12 - Dope sheet.

-

-

PgUp,PgDn = Page Up, Page Down

-

PgUp,PgDn - In NLA editor, moves tracks up or down.

-

[C]-PgUp - Change top view port configuration tab (Layout, Modeling, Sculpting, UV Editing, etc.)

-

[S]-PgUp - In NLA editor, moves tracks to top (or bottom).

-

-

"."

-

Pivot menu. Like the one next to the snap magnet icon. With something selected in the outliner, "." in the 3d editor will hunt for it in the model. With something selected in the model, "." from the outliner will find it in the outliner.

-

[C]-. - Transform origin, which means fully edit the origin point as if it were an object independently of its object’s geometry. Extremely powerful and useful!

-

-

","

-

Orientation.

-

-

[`]

-

Ortho view pie menu removing the need for a numpad.

-

-

"~"

-

Pie menu. For what normally? Solves no numpad for views.

-

[S]-~ - Fly. (No idea what this means.)

-

-

[/] - Zooms nicely onto the selected object to focus attention on it. Press again to return to previous view. In Node Wrangler, inserts a reroute node (basically a bend or bifurcation in an edge).

-

[=]

-

[S]-= Organizes nodes when using Node Wrangler.

-

-

arrows

-

Used with "g" movements, moves a tiny increment (sort of one pixel).

-

<LRarrows> - In animation modes, go to next and previous frame.

-

[S]-<LRarrows> - In animation modes, go to first and last frame.

-

[S]-<UDarrows> - In animation modes, go to next and previous keyframe.

-

[C]-<LRarrows> - Move by word (Text Mode).

-

-

[space]

-

Start animation.

-

[S]-space - Menu.

-

[C]-space - Toggles current viewport to fill entire workspace (or go back to normal).

-

[C][S]-space - Start animation in reverse.

-

[C][A]-space - makes current viewport fill entire workspace with no menus.

-

-

[backspace]

-

Resets to default value when hovering over a form box field.

-

[C]-backspace - resets the single component of a property to default values (for example just the X coordinate rather than X,Y, and Z).

-

-

[tab]

-

Toggle object and edit mode. [C]-tab - full mode menu (pose, sculpt, etc). When splitting viewport windows, tab dynamically changes between vertical and horizontal splits in case you have second thoughts. In draw mode if you’ve just created two points for a line leaving the yellow dots, tab will go back to the edit mode to give you another chance at that endpoint. In dope sheet, locks current layer.

-

[C]-tab - Opens a pie menu for selecting from all of the modes (Edit, Object, Pose, etc.). Very useful for more complex things. Also opens the graph editor from the dope sheet.

-

-

[home]

-

Zoom extents.

-

Beginning of text (Text Mode).

-

[C]-home - Set start frame.

-

-

[end]

-

[C]-end - Set end frame.

-

End of text (Text Mode).

-

-

[0]

-

[C][A]-NP0 - Position camera to be looking at what your viewport is looking at. Wish I knew how to do this without a NumPad. Ah, how about n menu → View → View Lock → Lock Camera To View.

-

-

[1]

-

Applies to all number keys! In Object Mode the behavior of the number keys is essentially a bug. It’s fine for small projects where nothing is even noticed, but for a large project, you’re likely to demolish your entire project in a way that can’t be restored with "undo". What it does is hide all collections but the nth collection (first for 1, second for 2, etc). This may seem minor and unimportant but if you have dozens of nested collections right away the ordinal order of which number goes with which collection is ambiguous. My experiments show it is first counting only the top level collections. Only after all the top level connections are put into a number will nested collections start being assigned numbers (a breadth first search, not a depth first search). Note also that it is easy to get unintuitive ordering: for example, if you rename your second collection

Cand your third collectionB, 3 will preserve the visibility ofBwhich may not be ideal. Actually, how things are ordered in the Outliner is, to me and others, a complete mystery. What is completely inexcusable for keys so easily mistyped, is that there is no way to undo it. You must manually recreate the visibility layout you had established, perhaps painstakingly, throughout your project. Sometimes [A]-h — in the outliner only! — can be beneficial, but since this unhides everything, you’ll need to go through and hide the things you really wanted hidden; this may be preferable to unhiding the things you want to see and less likely to overlook a nested thing (you have to decide if you want a subtle small correct nested subcomponent thing missing from your render, or a stupid construction-only reference prop to sneak in). The best way to handle this is to disable these bindings. Search for Preferences → Keymap → Name → "Hide Collection" and uncheck all of those. If that seems like essential functionality would then be missing, just use the much easier to remember and more sensible [C]-h which brings up a menu of collections to hide so you don’t even need to guess what goes with what. And normally it’s more sensible to use [S]-1 to just toggle that first collection’s visibility. After all if you are interested in using the number keys for hiding collections, you can’t possibly have more than 10 anyway! -

Vertex select mode (Edit Mode).

-

[S]-1 - Adds vertex select mode to any others active. Multiple modes are valid.

-

-

[2]

-

Edge select mode (Edit Mode).

-

[C]-2 - Subdivide stuff in some fancy automatic way. This makes a cubic based sphere when done ([C]-2) with the original default (or any) cube selected.

-

[S]-2 - Adds edge select mode to any others active. Multiple modes are valid.

-

-

[3]

-

Face select mode (Edit Mode). In Object mode something happens, not sure what, but stuff disappears. 2 seems to undo it.

-

[S]-3 - Adds face select mode to any others active. Multiple modes are valid.

-

-

[a]

-

Select all. When editing an object or mesh with snapping active (so possibly [C] being held too) pressing a when the orange snap target circle is present will "weight" that snap point; an example of how to use this is to press a when snapped to one edge endpoint, then again over the other endpoint, allowing you to snap to the midpoint.

-

aa - Deselect all.

-

[S]-a. Add object to selection.

-

[C]-a - Apply menu, to apply scale and transforms, etc (OM).

-

[A]-a. Select none. (Similar to "aa" or "a + [C]-i".)

-

-

[b]

-

Box select (EM). Box mask (SculptMode).

-

[S]-b - Zoom region, i.e. zoom view to a box.

-

[C]-b - Bevel edge. Bind camera to markers (timeline). When a camera is selected, sets render region border box; related to Output Properties → Dimensions → Render Region. In the time line if you have a marker selected, [C]-b attaches the currently selected camera to the marker so you can switch cameras in the animation.

-

[C][S]-b - Bevel a corner. When "Bool Tools" addon is enabled, you can select the helper object and then the one you’re serious about (in that order) and this will bring up the quick boolean operator menu.

-

[C][A]-b - Clear render region.

-

[A]-b - Clipping region, limit view to selected box (i.e. drag a selection box first). Repeat to clear. Good for clearing off some walls on a room so you can work on the interior of the room.

-

-

[c]

-

Circle (brush) select (EM). Clay (Sculpt Mode).

-

[S+c] - Center 3d cursor (like [S]-s,1) and view all (home). Crease (SculptMode).

-

[C+c] - Copy. Note that this can often be used to pick colors (and other properties) by hovering over a color patch and then pasting it elsewhere. [C+S+c] - In Pose Mode, brings up the bone constraint menu which can be handy when copying an IK rig to an FK rig etc; select the from, then shift select the to, then [C+S+c] and choose Copy Rotation (or transform maybe) and [C+a] in the constraint window to apply it permanently.

-

[A+c] - Toggle cyclic (EM of paths).

-

-

[d]

-

Hold down d while drawing with LMB to annotate using the annotation tool. Holding down d with RMB erases.

-

[S]-d - Assign a driver (Driver Editor).

-

[C]-d - Dynamic topology (Sculpt Mode).

-

[S][C]-d - Clear a driver (Driver Editor).

-

[S]-d - Duplicate - deep copy.

-

[A]-d - Duplicate - linked replication.

-

-

[e]

-

Extrude. [C]-RMB can extend extrusions once you get started. Continue polyline and curve segments in draw mode. Brings up the tracking pie menu in the Movie Clip Editor’s Tracking mode? Set end frame to current position while in timeline. Also in the dopesheet, when pressed while hovering the pointer between some key frames, this may allow you to move all of the selected ones on the pointer side of your current position; so, set your frame position cursor, select the keyframes to consider moving (perhaps [a]), put the pointer over the side you want to move, then pres [e], then adjust the position of that side’s keyframes all at once (a demonstration). Note that [shift+t] is similar but squishes them as you move reposition.

-

[S]-e - Edge crease.

-

[S][C]-e - Interpolate sequence in Draw Mode - pretty useful actually since this needs to be done a lot and it’s buried deep in the menus (at the top).

-

[C]-e - Edge menu. Contains very useful things like "bridge edge loops". Graph editor easing mode.

-

[A]-e - Shows extrude menu. If you hover over the gradient of the "ColorRamp" node and press [A]-e, you’ll get an eyedropper which can be used to populate the ramp’s value; can be clicked multiple times for multiple colors.

-

-

[f]

-

Fill - Fill. Creates lines between vertices too, e.g. to close a closed path. See j for join. In node editor "f" will automatically connect nodes, as many as selected. In a brush mode such as sculpt, weight, texture, etc., f changes brush size.

-

[C]-f - Face menu. Hmm. Or maybe a find menu???? Weight value, which is one of those things like brush size in weight painting mode.

-

[S]-f - Brush strength. Try WASD for controlling?

-

[A]-f - In Edit Mode for bones, switches the direction of the bone, the tail and tip exchange places.

-

-

[g]

-

Grab - Same idea as translate/move. I think of it as "Go (somewhere else)" or maybe "grab".

-

gg - Pressing gg (twice) edge slides along neighboring geometry.

-

[S]-g - Select similar — normals, area, material, layer (grease pencil) et al. — menu.

-

[C]-g - Vertex groups menu (EM). Create new collection dialog (OM) — though I can’t figure out how to actually do anything with this. In the Shader/Node editor, combines selected nodes into a node group.

-

[A]-g - Reset position of object. Remove bone movements in Pose Mode.

-

[C][A]-g - Shader/Node editor ungroup grouped nodes.

-

-

[h]

-

Hide selected. In draw mode, hide active layer. Disable strips in the VSE and NLA editors. In the node editors it will collapse (hide) the node to be as small as possible (see [ctl+h]).

-

[S]-h - Hide everything but selected. In draw mode, hide inactive layers.

-

[C]-h - Hooks menu. In a node editor with a node selected this will trim down the node so that it just shows relevant link connections (as opposed to the full "hide").

-

[A]-h - Reveal hidden. In draw mode, reveals hidden layers. In VSE and NLA, unhides tracks

-

-

[i]

-

Inset (EM) with faces selected. Press i twice for individual face insets. Insert keyframe (OM/Pose). Inflate (Sculpt Mode).

-

[C]-i - Invert selection.

-

[A]-i - Delete keyframe (OM/Pose).

-

-

[j]

-

Join (EM). With "fill" between two opposite vertices of a quad, you get the edge between them but the quad face hasn’t changed. Join will break that quad face up. The subdivide function can do the same thing - access at the top of the EM context menus (RMB). Mysteriously change slots in image editor during render preview.

-

[C]-j - (OM) Joins two objects into one. Also joins grease pencil strokes. In the Node Editor, puts nodes in frames.

-

[A]-j - Triangles to quads (inverse of [C]-t).

-

-

[_k _]

-

Knife tool. Hold [C] to snap (e.g. to mid points). Snake hook (SculptMode).

-

-

[l]

-

Select all vertices, edges, and faces that are "linked" to the geometry the mouse pointer is hovering over. Or edges the same way. Layer (Sculpt Mode).

-

[C]-l - Select linked geometry, i.e. everything connected. In Object Mode, it brings up the Link/Transfer Data menu; this allows you to send objects from one scene to another, and other similar things.

-

[S]-l - In Pose Mode, add the current pose to the current Pose Library (as some kind of one frame "action" or something).

-

[A]-l - In Pose Mode, browse poses in the current Pose Library.

-

[S][A]-l - In Pose Mode, brings up a menu of poses in the Pose Library so you can delete one, i.e. pretty safe.

-

[S][C]-l - In Pose Mode, rename the current pose. Why not F2? Don’t know.

-

-

[m]

-

Move to collection (OM). Move grease pencil points to another layer (EM). Add marker (timeline). Mute a node in node editor to disable its function temporarily.

-

(EM) Merge menu. Collapse vertices into one. Important for creating a single vertex. Can be done "By Distance" which will collapse vertices that are very (you specify) close. Formerly [A]-m (remove doubles/duplicates), now it seems just m in Edit mode brings up the merge menu. Using "By Distance" will mostly do what the old one did.

-

[Shift+m] - Like plain [m] moves to a collection this is more like a copy to another collection — the original stays in the initial collection and shows up again in a different one.

-

[Ctrl+m] - Followed by the axis (e.g. "x" or "y", etc) will mirror the object immediately. No copy. Mirrors cameras too if you need reversed images. Rename marker (time line). Works on points in grease pencil edit mode.

-

[Ctrl+Shift+m] - Curve modifier menu (noise, limit clamping, etc) in graph editor. Quickly does selection mirror in edit mode; use the "extend" in the F9 control to add the other side, not just switch them over.

-

[Alt+m] - (EM) Split menu (by selection, faces by edges, faces&edges by vertices). Like Separate but keeps the geometry in the same object. Think of opening a box. Can also pull off bonus copies of edges if they’re selected alone. Clear box mask in sculpt mode.

-

-

[n]

-

Toggle "Properties Shelf" (right side) menus.

-

[S]-n - Recalculate normals. (If you need to see them, display normals with "Overlays" menu just over the Properties Shelf.) Recalculate handles in path EM.

-

[C]-n - New file.

-

[A]-n - Normals menu.

-

-

[o]

-

Proportional editing - note that the influence factor is controlled with the stupid mouse wheel.

-

-

[p]

-

Separate (EM), e.g. bones. Pinch (SculptMode). Set preview range (dope sheet, timeline).

-

[S]-p - In Shader/Node editor puts selected nodes in a frame.

-

[C]-p - Parent menu.

-

[A]-p - Clear preview range (dope sheet, timeline).

-

[C][A]-p - Auto-set preview range (dope sheet, timeline).

-

-

[q]

-

Quick favorites custom menu. Use RMB on menu actions to add them to the quick favorites menu.

-

[C]-q - Quit.

-

[C][A]-q - Toggle quad view. This means 4 viewports showing different ortho sides. Note that in quad view, for some reason Measureit annotations are invisible.

-

-

[r]

-

Rotate.

-

rr - Double rr goes to "trackball" mode.

-

[S]-r - Repeat last operation.

-

[C]-r - Loop cuts. Note that number of cuts is controlled with PgUp and PgDn. You can do partial cuts by hiding the faces (h) that form the boundary.

-

[A]-r - Reset rotation value of an object. Remove bone rotations in Pose Mode.

-

-

[s]

-

Scale. Set start frame to current position while in timeline.

-

[S]-s - Snap/cursor menu. Smooth (SculptMode). Save As from image editor.

-

[C]-s - Save file.

-

[S][A]-s - (EM) Turns an object into a sphere! (Does it need to be mainifold?)

-

[S][C][A]-s - Shear.

-

[A]-s - Resets scale? In Edit Mode it subjects selected geometry to the Shrink/Fatten tool which does pretty much what that sounds like. Remove bone scale changes in Pose Mode. In image editor saves the image. In movie tracking unhides the search area. In grease pencil edit mode, changes thickness of strokes where the points are selected. In path editing with handle selected, changes extrude width. In the Node/Shader Editor this swaps the input points of the current node.

-

-

[t]

-

Toggle main "3D View" tool shelf (left side) menus. Interpolation mode (dope sheet, graph editor).

-

[C]-t - Triangulate faces (inverse of [A]-j). In path editing with handle selected, changes tilt/twist of the curve. In the VSE, [C]-t changes the display from minutes:seconds+frames (02:15+00) to simple frames, matching every other default time display.

-

[S]-t - Flatten (Sculpt Mode). When lights are selected, they will follow the mouse cursor; works for multiple, select all lights by type to get all lights. See [e] in the dopesheet mode to see how this can be used to stretch/compress keyframe locations.

-

-

[u]

-

UV mapping menu (EM). In draw mode it brings up the Change Active Material menu. In grease pencil edit mode, turns on bezier curve editing.

-

-

[v]

-

Rip Vertices (EM?). In grease pencil edit mode, makes selected points their own segment, detaching from the original. Probably that’s the general functionality of this too. Graph editor handle type. Node editor the mouse buttons affect the nodes, but if you want to scale the background preview image, try v and [A]-v.

-

[S]-v - Slide vertices (EM?). This constrains movement along existing geometry.

-

[C]-v - Paste. Or maybe Vertex menu depending on context.

-

[A]-v - Rip Vertices and fill (EM?). In node editor scales (out?) background preview image.

-

[S][C]-v - Paste but with inverted sense somehow. (A good example. Another good one at 17:40 too. I’ve seen examples of this working in setting keyframes with a mirrored pose.

-

-

[w]

-

Change selection mode (box, brush (circle), freeform border). In draw mode it is rumored to bring up the context menu. I’ve had problems with this not working very similar to what is described here; using RMB for the context menu works just fine.

-

[S]-w - Reconstruction pie menu in Movie Clip Editor’s Tracking mode? Use with the bend tool to do the bending.

-

[C]-w - Edit face set (SculptMode).

-

-

[x]

-

Delete. Draw tool in sculpt mode. Swap colors (c.v. Gimp) in Image Editor and Texture Paint.

-

In operations, constrain to x axis or with shift constrain other two.

-

-

[y]

-

In operations, constrain to y axis or with shift constrain other two. In draw mode, brings up Change Active Layer menu, which includes a New Layer option.

-

-

[z]

-

z - View mode.

-

[C]-z - Undo.

-

[S][C]-z - Redo.

-

[A]-z - Toggle X-ray (solid, but transparent) mode.

-

[S][A]-z - Toggle display of all overlay helpers (grid, axes, 3d cursor, etc).

-

In operations, constrain to z axis or with shift constrain other two.

-

This video has a lot of tips about shortcuts for the node editor.

Numpad Number Keys On Numeric Keypad

Since you have all those stupid useless keys sitting there that you never use, you might as well use them, right? Thought the Blender devs. Well I had a similar thought which was to get a less idiotic keyboard that didn’t have all that extraneous cruft. But Blender is really keen on being the one piece of software that justifies stupid keyboards. But there are tenuous workarounds.

The numpad numbers tend to change the view.

-

np1 - Front

-

np2 - Down

-

np3 - Side

-

np4 - Left

-

np5 - Perspective/Orthographic

-

np6 - Right

-

np7 - Top

-

np8 - Up

-

np9 - Opposite

-

np0 - Camera

-

np/ - Isolate selected by zooming to it and hiding everything else.

-

np+ - Zoom in. [C]-np+ in grease pencil edit mode selects more points near the ones selected.

-

np- - Zoom out. [C]-np- in grease pencil edit mode selects fewer points near the ones selected.

Strange And Uncertain Features

I’m still trying to sort out these features but am noting them here so they don’t get completely forgotten.

-

[Space] - Brings up the "search for it" menu. Just type the thing you want and that option is found often with its proper key binding shown. Looks like this has all changed a lot with 2.8+.

-

[C]-n - Reload Start-Up File (object mode) OR make normals consistent (edit mode)

-

[C]-b - draw a box which, when switched to "render" mode will render just a subsection

-

[C]-LMB - In edit mode, extrudes a new vertex to the position of the mouse target. Can be used like repeatedly extruding but without the dragging.

-

[C]-MMB - Amazingly, this can scale menus. For example to make them more readable or make more of it fit.

-

[A]-C - Convert. This converts some fancy object like a metaball or a path or text to a mesh. Or covert the other way from mesh to curve.

Edit Mode Keys

-

p - Separate geometry from the same mesh into multiple objects. The "loose parts" option makes each air gapped structure its own object.

Object Mode Keys

-

L - Make local menu.

-

[F6] - Edit object properties. Useful for changing the number of segments of round objects when you don’t have a mouse wheel.

Here is a necessarily bewildering key map reference from blender.org.

Note that if something like [A]-LMB tries to move the entire application window because that is how the Window manager is set up, it’s worth the effort to change that over to the Not-Exactly-Super key. For me on Mate, I go to System → Preferences → Look and Feel → Windows → Behaviour → Movement Key. Fixing that helps a lot with Blender.

Node Editing Key Bindings + Node Wrangler

The second most common thing said in Blender videos (after "Apply transforms") is "enable Node Wrangler". Unfortunately this bumps up the complexity of key bindings by quite a bit. I have found the official documentation for Node Wrangler to be lacking (for example, this page doesn’t mention "m" to mute nodes). Part of the problem is that some of the bindings are being handled by Node Wrangler and some are just part of the Blender interface without the addon. Since I don’t care whose turf war is responsible for the functionality, I’ll just try to note the useful bindings I use when editing nodes.

-

[h] - Hide node. Toggles Collapse of the node’s compact form.

-

[m] - Mute node. Temporarily disables the node.

-

[s] - Scales selected nodes. The useful part of this is

sx0(orsy0) followed by [enter] to align. -

[/] - Insert a reroute point. See [Ctrl][x].

-

[Ctrl][x] - Remove node preserving through connections. Good for getting rid of a reroute node you accidentally inserted.

-

[\] - Link active to selected.

-

[backspace] - Reset node to default settings.

-

[Alt][x] - Delete unused or muted nodes.

-

[Shift][p] - Put a frame around the selected nodes. Note that you can label the frame with [F2].

-

[Shift][s] - Substitutes node with a different one. (Oops. This is now deprecated. Look for a native version soon.)

-

[Shift][Ctl] LMB - Create a temporary short circuit to the final output for previewing operations.

-

[Ctrl][Shift][t] - With a (Principled BSDF, maybe others) shader selected, this will do a full "texture setup"; this will allow you to go select texture maps and it will put them all in the right boxes (an example).

-

Views

-

/ - Toggle global/local view

-

[Home] - View all

-

[S]-F - Fly mode to AWSD controls (also E&Q for up/down, escape to exit)

-

. - View selected (Maybe only numpad)

-

5 - Toggle Orthographic/Perspective

-

1 - Front

-

[C]-1 - Back

-

3 - Right

-

[C]-3 - Left

-

7 - Top

-

[C]-7 - Bottom

-

0 - Camera

-

[A]-h - Show hidden

-

H - Hide selected (also note [C]-LMB on the eye in the Outliner hierarchy)

-

[S]-H - Hide unselected

-

[A]-M - Merge - Makes two vertices the same. Or at least in the same place.

Note that the number keys are 10 number pad number keys; if the number keys want to change layers you may need to set "Emulate Numpad" in File → User Preferences → Input tab.

In Blender 2.8 some new useful interface features appear. Now you can go ahead and use the limited number keys (assuming you don’t have a stupid number pad) to select vertex, edge, or face editing. So how do you get quick access to viewports? Use the "`" backtick key to bring up a new style wheel menu from which you can use the mouse or numbers to select the view you want.

Simple Coloring

Often when modeling I would like something more helpful than everything showing up default gray. This can be done by going to the little down arrow box to the right of the "shading" menu which is located above the "n" menu on the upper right. Then you can choose "Random" and the objects will be colored in random different colors instead of default gray. That’s often enough for my simple needs.

Clipping Seems Stuck

Sometimes you’re looking at a model and it disappears and it becomes very difficult to find. This can happen when the clipping planes are set such that the whole model is cut out. To fix this, bring up the tool menu with "n" and then look for the "View" section and adjust the "Clip" values. Setting "Start" to 0 is a good way to try to get things back to visible.

Overlay Stuck Turned Off

Sometimes you want to see a grid and axes and all that good stuff. This one is related to the clipping problem because that may act up too. Maybe you only see the 3d cursor. You go to the overlays pull down and it all looks like it should be on. This tends to happen when I import something from someone else — especially if there was a conversion from some other kind of modeler. What may be going on is that the scale of the model is truly enormous. The solution can be as simple as changing the model’s units to something sane and scaling it to fit. Maybe press Home if you lose it when it shrinks back down. This can be quite mysterious to import, say, a flower and it’s the size of a 10 story building and the overlay is on and fine, just smaller than an aphid and practically invisible to the interface.

Scaling/Panning Seems Stuck

Sometimes it seems like you can’t zoom or pan the view. The trick here

is to get the 3d-Editor window and go to View→Frame Selected

(formerly View Selected). This has a shortcut of . on the

numpad (if you have one). That’s super frustrating so this tip can

be very important.

Another solution that may be easier is to be in object mode (perhaps by pressing tab) and then press the "Home" key. This resets the view stuff. It would be nice to figure out what’s really going on there but persistent confusion may exist.

Background Images For Reference

A very common workflow technique is to freehand sculpt 3d assets on top of (or in front of, etc) a 2d reference image. These images don’t hang around for final rendering and are not part of the model per se. They are just available as helper guides to put things in roughly the right place so they look good with respect to reference material.

New 2.8 Way

The new system in 2.8+ is set up for a special kind of "empty" to contain an image. These can be created by inserting [S]-a "Image". This gives you a choice of "Reference" or "background". It seems that background images will show other objects in front of them while reference images may still be persistently visible. This has some subtleties explained here.

Here are the three kinds of image objects.

-

Reference - Can be at any orientation. It is basically an empty with a scaled image instead of axes or arrow or some other marker. Like all empties, it doesn’t render.

-

Background - Very few and subtle differences from the reference image empty. The main one I found is that it won’t render the back side of the rectangle while a reference empty will. Also, solid mesh objects will be shown over the background image even if that empty is closer to the camera or viewer — this puts the image always in the background. A -1 scale will flip the images.

-

Images As Planes - Can do anything a plane can do, but with your image slapped on it as a texture automatically. This means that this plane with its image is renderable where the other types are not visible at render.

In the Empty’s settings you can specify a check box if you want it to be visible in Perspective, Orthographic, both, or neither. Orthographic can be helpful if you have a front photo, a top photo, and a side photo and want the others to go away when you switch views.

If two empty images share the same distance from the viewer and are superimposed, the one that is rendered is the one closest overall (in perspective). You can see this by putting two images in the same place and g sliding one up half way. Then change the view position to look from high, then low and the order will change.

Background HDRI Images

Sometimes you try to render something shiny (or anything actually) and it looks very computery because there’s nothing normal out in the wider world reflecting naturally off the object. This is especially problematic with isolated objects which look pretty unnatural just floating in space. To help cure this, you need to tell Blender’s "world" about what sort of ambient background is out there.

You could do worse than to start at Poly Haven and go to their HDRI section. Pick one that is somewhat like the mood of the scene you’re going for and download it.

Note that this file can be a .exr or .hdr file. Best to stick to

OpenEXR which was created by ILM and seems

wholesome. Blender created the OpenEXR Multilayer format which is an

extension of that which is slightly less universally supported and

probably not necessary if you don’t know you need it. All of these

seem compressed but lossless.

To get Blender to start using this HDRI as a background: Go to the World Properties tab. Click Color and under Texture choose Environment Texture. This will then provide a file box where you can specify the path to your file.

If you want to adjust how your image is sitting on the background open up the Shader Node Editor. Choose the World pull down (next to the editor type icon). Then add two nodes: a texture coordinate node (from Input section) and a mapping node (from Vector section). Connect the Texture Coordinate’s Generated output into the vector input of the mapping Node. Connect the mapping node’s vector output to the hdr image node’s vector input. By playing with things in the mapping node, especially the Z rotation, you can get what you need. This process is explained in this video.

HDRI stands for High Dynamic Range Image and is often made of images taken with a camera at multiple exposure settings so it can provide more information about what the scene is doing in different conditions (no regions washed out or too dark). Here are some good examples.

This kind of image can be assembled with Gimp — here’s a decent beginner guide. And a more in depth guide.

It should be possible to make the dynamic range "high" with Gimp. To

make stitched panoramas, you can look into

Hugin. Compiling it is no fun but it

seems to be available with apt install hugin.

In a pinch, there are some low res environment images that come with

Blender that are used in the Material Preview mode. These can be found

in your installation at.

/${MYPATH}/blender-3.5.0/3.5/datafiles/studiolights/world

Old Way - Pre 2.8

Make sure the "Properties Shelf" is on with "n". Look for "Background Images" down near the bottom — you might have to scroll. Click "Add Image". Select your image from the file system. The rest is pretty self-explanatory once you find it. If nothing shows up, you may not be aligned to the correct view. Pressing 7 will show a reference image set to display on "top" views.

If that still doesn’t work, you are probably in "Perspective" mode even though you pressed 7 and it sure doesn’t look like it. Double check that it does not say "Top Persp" in the top left corner of the modeling window — it should say "Top Ortho". To toggle, make sure the mouse is hovering over the modeling window and press 5.

Remember that the control settings can be specific to a window. For example, you may have a top down view that is blocked by your model. You set the top down view property to "front" and nothing happens. But if you set that property in the side view, it will be thinking that’s what you want only in that window if you change to top view. To have the window showing your top view take those properties, you have to go there and press "n" to open the properties and set it there.

Origin And 3D-Cursor

I find the distinction here can be tricky to get used to.

The origin is the tri-colored unit vectors with a white circle around it. The 3d-Cursor is a red/white circle crosshairs. Position the 3D-Cursor by LMB; note that it should stick to the things (e.g. faces) sensibly. Note that this is less obvious in wireframe mode. When in "Object Mode" in the tool shelf, there can sometimes be an "Edit" submenu; in that can be found a "Set Origin" pull down. This includes "Geometry to Origin" and "Origin to Geometry". Also "Origin to 3D Cursor" and "Origin to Center of Mass".

-

[S][C][A]-c - Bring up menu for origin management (pre 2.8 - see below)

-

. - Move origin to 3D-Cursor. In 2.8+ this sets the pivot point in a handy way.

-

[S]-C - center view on something pleasant and move the 3-d cursor to origin

-

[S]-S - Open snap menu, handy for putting the 3d cursor to selected or grid, etc. One of the best techniques for positioning the cursor is to use [S]-S and then "Cursor To Selected" which will put it perfectly in the middle of the face.

In 2.8+ to reposition the origin of your objects, select the object in object mode, click the "Object" button on the bottom menu, choose "Set Origin", and pick the thing you need such as "Origin to Geometry". Or in Object Context Menu (RMB in OM) look for "Set Origin" - also easy.

3d Cursor

In old Blender (pre 2.8), when you clicked with the most natural LMB action, the 3d cursor was placed. Although this only is true now if in the cursor tool mode ([S]-space space), clearly this action is thought to be important. What the hell is it good for?

-

It is where new objects will show up.

-

The "origin" can be moved to it.

-

It can be where things rotate if you set — pivot point (button right of display mode button) → 3d Cursor

-

Optionally where the view rotates — "n" menu → "View" Section → "Lock To Cursor" checked — This is worth doing!

To set the 3d cursor’s location more specifically than random LMB madness, there are a couple of different options.

-

If you want a rough position, the LMB will work, but in v2.8+ make sure you’re in the Cursor mode and not, say, the Select Box mode.

-

In the "n" menu under the "View" tab, the "3D Cursor" section has a form box for explicit entry of X,Y,Z location.

-

Often you want the cursor placed with respect to some geometry. [S]-s brings up the cursor placement wheel menu. Doing [S]-s and then 2 will put the cursor on the selected geometry. Note that by doing this and checking the "n" menu’s coordinates (see previous item) you can take measurements and find out locations. Another example to clarify this useful use case is if you want the cursor at the "endpoint" of some other edge in the model.

-

Note that when you get the cursor on some existing geometry, you can go to the View→3d Cursor section of the "n" menu and put math operations in the location box. So if you want to move the cursor up from a known endpoint you can [S]-s,2 select the endpoint, then go to the Z box for 3d cursor location and put "+2.5" after whatever is there to move the cursor up 2.5 units.

Some technical details:

C.scene.cursor_location # Query

C.scene.cursor_location= (0,0,1) # Set

C.scene.cursor_location.x += .1 # Increment X in a controlled wayTo see where this comes in handy, see measuring distances.

Measuring Distances

What if you simply want to know the distance between two points in your model? Not so easy at all with Blender! (Well, at least according to this very bad answer to the question.)

It looks like in 2.8, there is now a prominent feature to measure stuff! Yay! How this got overlooked until now is a complete mystery.

To use it check out [S]-space + m. (This was formerly [A]-space I believe.) LMB hold on the first point to measure. Then drag to the second point. If you mess it up, you can pick up the ends and reposition them. You can also use [C] to constrain the endpoints of the measurement to snap points.

If these little measurement lines hang around as dashed lines, it can be tricky to delete them. Click on the ends of the unwanted phantom measurement ruler and then del or x.

MeasureIt Tools

There is an included add-on that is pretty nice which creates dimension line geometry. With the new native "Measure" tools, this add-on (and other similar ones) become a lot less important. However, if you need to actively communicate dimensions as in a shop print, this add-on is still excellent.

Generally to use it, you simply select two vertices or 1 edge in Edit Mode and click the "Segment" button. Sometimes tweaking the position is required. See troubleshooting below.

Troubleshooting MeasureIt

Don’t see MeasureIt in the "View" tab of the "n" menu? Maybe the addon is not enabled. Look at Edit → Preferences → Add-ons and check the box for "3D View: MeasureIt".

An annoying flaw that has frustrated me is the addon’s visibility is off by default!! Note the "Show" button — if you click that it will make the measurements from the addon visible. Clicking it again will "Hide" the measurements. It’s frustrating because you assume the default to show the stuff if you’ve bothered to use it, but that’s not how it works.

Note that for some annoying reason MeasureIt annotations are invisible in quad view! This is problematic because if you’re doing something technical that could make use of MeasureIt, you’re also more likely to be using quad view. As a reminder for how to turn it off, try [C][A]-q to toggle and ` for individual view selection.

You can see the dimension lines but they are all crazy and not at all properly automatically lined up with the mesh or axes or anything discernible. If you expand the settings (the little gear icon) on one of the measurements in the "Items" list, it will have a checkbox for "Automatic Position". Obviously you want automatic positioning, so turn OFF automatic position! I found that to cure alignment issues. Maybe then you’ll need to play around with "Change Orientation In __ Axis" to really put the aligned dimension where it should be.

If you’re trying to make a final render with the MeasureIt dimension

lines included, you will be disappointed. The way it works is that the

measurement lines are created in their own separate transparent image.

One thing to keep in mind is to export RGB*A* (not RGB), especially if

you’re just looking at the measureit_output in the Image Editor

(check the "Browse Image" thingy to the left of the image name to see

if there’s a second image from MeasureIt hiding in there).

Importing the scene render’s final image and the MeasureIt overlay image into layers in Gimp, you can combine them for what you’re after. Or use ImageMagic

composite measureit_output.png coolpart.png coolpart_w_dims.pngKeeping the undimensioned one can maybe be used nicely to toggle the dimensions on and off with JavaScript.

DIY Python Measurments

My technique is as follows.

-

Select the object of the first point.

-

Tab to be in edit mode.

-

"a" until selection is clear.

-

Make sure vertex mode is on.

-

Select the first point.

-

[S]-s to do a "Cursor to Selected".

-

In a Python console type this:

p1= C.scene.cursor_location.copy() -

Select the second point in a similar way.

-

[S]-s to do a "Cursor to Selected" again.

-

In a Python console type this:

p2= C.scene.cursor_location.copy() -

Then:

print(p2-p1)

Also in Edit mode, under "Mesh Display" there is checkbox for showing edge "Length" info. But that has its limitations too if the points are on different objects. Note that this can show comically incorrect values! The problem is (may be) that an object was scaled in object mode and the transformation was not "applied". Try [C]-a to apply transforms. I was able to go to object mode, select all with a, and then [C]-a and then apply rotation and scale. Details about this problem.

Volume Measurements

Sometimes you want to design something complicated and know how much concrete or 3d printing pixie dust the thing will require. Blender has an extension called Mesh:3D Printing Toolbox which can do this. Just go to user preferences, "Add-ons" and check it. Then you’ll get a tab for that. You can then click volume or area for a selected thing and go to the bottom of that panel to see the results.

Align

Most people align things by just eyeballing it; this is wrong. But Blender doesn’t always make it easy to do perfectly. And perfectly is the correct way!

Align Objects

The object alignment is pretty easy. There is a whole Align addon that ships with Blender. It can be useful and I think mostly obvious how to use it. But you can also just copy transform coordinates if you only have a couple.

Align Mesh

Let’s say you get a mesh from some random source and it has had its transforms applied but they’re not quite right. Maybe you have a ship scene where the ship is rocking in the rough sea but you want to model new things parallel to the deck — how do you make this model’s mesh change so that all the deck points are in the same world Z? You can’t even use "Local" coordinates because they’re parallel with the ocean and not the deck.

The trick is using the Align View tool to get a foothold. Here are the steps.

-

Go to Edit mode and in face select mode, select a face that should be in one of the major planes, i.e. not misaligned.

-

Use the View → Align View → Align View to Active → Top menu item to get a view that’s looking straight down on this misaligned face.

-

[Shift+s,2] to put the cursor on that face.

-

Tab back to object mode and [Shift+a,m,p] to add a plane.

-

The plane will still be out of alignment! But if you go to the F9 "Add Plane" post hoc menu you’ll find an Align pull down. Set that to View. Now this plane should be aligned with the geometry on your original object that you want to align.

-

There is probably some way to copy transforms directly (Align Tools addon?) but I won’t remember that as easily as simply parenting the target object to the alignment plane (select them in that order and [Ctrl+p]).

-

Then select only the plane and zero out its rotation angles. The target object should come along and now be aligned.

-

You must unparent ([Alt+p]) the target object (keeping transforms of course) before deleting the helper plane.

The target object should be properly aligned now!

Align Mesh

Layers

Those little 2x5 grids in the menu bars are layer slots. To change the layer of an object, select it and press "m". This brings up a layer grid to select where you want it. To view multiple layers you can click on the boxes in the grid using shift for multiple layers.

Grid

The basic grid seems like the kind of thing that should be controlled in user preferences (like Inkscape) but it is not. Turn on the "n" menu and look for the "Display" section. There is a selection for which axis you want the "Grid Floor" to be (usually Z). Then adjust the scale and size.

Objects

Objects can be dragged around and placed in different hierarchical arrangements in the "Outliner". I’ve had this sometimes get stuck and it’s pretty strange, but reloading can cure it.

Having a good object hierarchy can make operations easier since it allows finer control of hiding or excluding from rendering.

To create a different object that is not part of an existing mesh, use [S]-d for "duplicate" and then hit the "p" key which will bring up the "separate" menu allowing the "Selected" object (or "All Loose Parts") to be made into their own objects.

The complimentary operation is to join objects. For example, if you input some reference lines on a boat hull and each is its own object, you can’t put faces on them. You must join them to the same object first. In object mode, select both of the objects and pres [C]-j and they will be part of the same mesh.

Parenting

Parenting is a very important concept that allows objects to be organized. I used it less than I should have for a long time because the metaphor was confusing to me — children are not normally the first to appear in a family followed by the parent. But in Blender, selecting objects to make a parent child relationship, that is exactly how it is done.

For me a much better metaphor that conveniently starts with the letter p, makes the selection order and one to many aspects seem natural is to mentally replace the Blender verb "parent" with "piggyback". Or "piggyback on to". As an added bonus, "piggyback" implies a physical connection that is usually relevant in so-called parented objects. While it’s true that multiple passengers don’t usually ride the piggy at the same time, it’s totally plausible if the piggy is strong enough. (Certainly more plausible than a child having only one biological parent - ahem.) This dictionary definition of the verb piggyback pretty much exactly sums up what Blender parenting is: "to set up or cause to function in conjunction with something larger, more important, or already in existence or operation".

Each object can be thought of having one single slot for a parent.

Just like if you move a file into a directory, you make that directory

a parent of the file. There is an analogy to

mv fileA fileB fileC parentdir since the last item in the arguments

becomes the parent for all the other items. Of course the better

analogy would involve a directory and subdirectories since all items

can themselves be a parent. To get the terminology straight, imagine

that files/subdirs are parented to the directories that contain

them.

It is possible to assign a single parent object (the "carrier") to multiple child objects (the "riders"). As long as the parent object is selected last (called the "active object", glowing the brightest orange) the parent operation will parent each of the other selected objects to it.

One of the options in the [ctl+p] parenting menu is the "Object (Keep Transform)" option. This is subtle and often optional for most operations. The basic idea of it is that if an object is transformed because of its parent, when you try to parent it to a different object the question is if it will revert back to the basic object properties of the child first or if it will get a kind of "apply" to keep the previous parent’s influence and only then follow the new parent. An example would be if two characters were going to hand each other an object. The object would be parented to A’s hand and at the moment of hand off, you want it parented to B’s hand. What you probably don’t want is that when parenting to B, the object returns to where A’s hand was at the start of the scene where the object was originally modeled (and its transforms applied) and originally parented to A. That’s where it would go if it were no longer under A’s influence, but with Keep Transform, you can accept it’s new position/rotation/scale as the basis for this child object.

If you want to temporarily suspend parenting effects, with the parented object selected you can go to the "Active Tool and Workspace settings" tab (which looks like a screwdriver and a wrench) and choose "Options"; then one of the "Transform" options will be to "Affect Only… Parents". Check that and the parent can be adjusted with the children staying fixed.

For bones, the general strategy is that distal bones piggyback on the proximal bones by default assuming the bones were extruded distally. For example, the tib-fib (shin bone) piggybacks on or is parented to the femur (thigh bone). There the femur is the parent, selected last during the parenting operation. The idea of "bone parenting" as a specific parent mode is when you want some other object (e.g. a robot’s form/shape mesh or an animal’s skin) to be piggybacked on a specific bone as opposed to the whole armature.

Simple Things Not So Simple

Simple Points And Lines

With Blender it is strangely easier to model a cow than a simple Euclidean line. Seriously, just getting a simple line is strangely challenging. I’m not the only one who ran into this (here and here).

As far as I can tell, you must create a complicated entity (like a plane) and remove vertices until it is as simple as you like. There may be a better way to get started, but I don’t know it.

Use [A]-m to merge vertices to a single point!

Once you have chopped something down to a line (or even a single vertex), you can extend that line to new segments by Ctrl-LMB; this will extend your line sequence to where the live pointer is (not the origin thing).

Another similar way is to select the point to "extend" and press e (for "extend") and then you can drag out a new line segment. This is less precise in some ways because it is not right under the mouse position. However, this technique can be very helpful to press e and then something like x then + then 3 which extends the line sequence to the positive X by 3 units. Not putting the sign can result in absolute coordinates. Press Enter when you’re done.

It looks like there is now an add-on that addresses this nonsense. Go to Preferences → Add-ons and search for Add Mesh: Extra Objects. This will give you many things like Mesh → Single Vert → Add Single Vert. Also Curve → Line (though it may be better to extrude that "Single Vert"). There are a lot more too. Worth activating!

Trim Mesh Vertices

AutoCAD had a command trim and another called extend and they were

incredibly useful. Blender is weirdly deficient here. However, if

you’re experienced enough, there is a shrewd idiomatic Blender way.

Basically you need to scale the vertices in Edit Mode to zero.

Mostly this is sensible when your trimming is aligned to the

coordinate system and then you can select an axis at a time. For

example, to make a bunch of points with random heights all have the

same Z value (e.g. flatten a mountain) just select them and [s,z,0].

Tubes, Pipes, Cables, Complex 3d Shapes

A very common task I have when modeling is something like a racing bicycle’s handlebars or its brake cables or a curved railing or drain p-trap, etc. Any kind of free-form piping or tubing. Of course there is a way to do this in Blender and luckily it’s not too horrifically difficult.

Round

If you just need a normal round pipe or cable, it seems this is now easier than ever. Just create the pipe’s path with Add → Curve → Bezier. Then go to "Object Data Properties" whose icon should look like a curve and is right above the Materials icon. Open up the "Bevel" and choose "Round". You can adjust the "Depth" setting to change the thickness of the pipe; in theory this is the "radius of the bevel geometry" but be careful, because in practice I couldn’t quite get that to make sense.

Custom Profile