Concepts

-

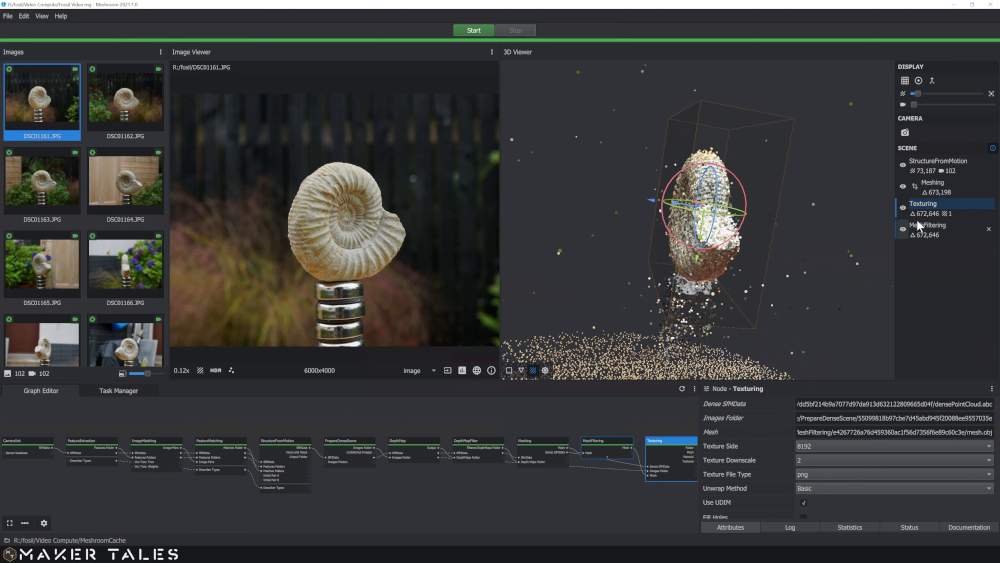

A good video showing full meshroom workflow from photos to low-poly Blender models.

Camera Tips

I liked the thoroughness of this resource.

-

Some misc settings to think about turning off: auto-rotate, GPS, auto-shutdown, sensor shaking clean, image stabilizing switch on lens. Also they say, "turn off AF beep when using live view on Canon" for some reason.

-

Images size. The skull test set that I had good luck with were 4640x2610 (which is 2.4X bigger than HD). However, I was able to do a perfectly good reconstruction with the images whittled down to 800x800; this is useful to see if the setup is plausible at all. If it looks ok with small versions, it may be worth trying the full size. Yes, just checked the Jericho skull test with the 57 images mogrified down to 800x800 — it too less than 5 minutes and came out better than it did before! So check a scaled down version first!

-

Zoom adjusts the angle of view. Keep zoom the same. I think this means basically don’t turn the lens. The guide I show above says, "DO NOT ZOOM your lens while shooting the subject. Zoom to one setting and lock it down!"

-

Focus adjusts the focal plane. Use manual focus mode. Focus is different than zoom and the shot must be focused. The guide says, "Keeping the same focus and zoom will ensure your photos are consistent. You may need to adjust the focus when you move your camera to different heights. Just make sure you don’t change any of the other settings!"

-

RAW.

-

ISO - 100 or less But make sure a low iso number is paired with excellent lighting. This improves the signal to noise ratio - literally blurry pixels.

-

High F stop - I’ve seen F10 This controls the depth of field keeping all depths in focus. Avoid blurring from any source.

-

Polarizing lenses can be helpful to remove glares, but they will reduce the incident light.

-

Long shutter time - so definitely a tripod - and remote or timer Under 1/80s a tripod is probably needed.

-

Avoid reflections. See also "cross polarization" if you need to get fancy.

-

Make sure you get physically close shots, close enough for overlap.

-

Blurred images cause serious problems.

-

Not sure about background. Maybe white? Maybe complex background might be good? Maybe asphalt or wooden deck? Probably contrasting with subject is best.

-

Some subjects are just not good with photogrammetry.

-

Flat walls - add some tape

-

Avoid reflections and glare, cars and machine parts

-

-

Very small subjects - 40mm , 50mm, 60mm lenses are ok for very small items because they’ll allow you to get in very close. A normal 18mm - 35mm lens will have trouble filling the view with a focused subject. Discussed here.

Studio Setup

-

Don’t forget to put a machinist scale or ruler or something with a very well calibrated size in the shot.

-

I tried using giant white sheets of packing styrofoam. They work great for light diffusing but I felt they had too much texture to be properly overlooked by the feature detection algorithm. A black background is recommended though contrast is probably more important. Avoid green screens or other colorful surfaces unless you want the reflected hue lighting your subject. You can buy whiteboard material that is a very smooth and featureless bright white on one side and a reasonably smooth black on the other.

-

Try to arrange the foreground and background to have very different brightness levels.

-

Turn table marks every 4.5deg allow 80 shots per rev (or 40 skipping). If you have a long thing, like a shoe, maybe take more photos when looking at the narrowest view so that the software has more to work with.

-

Avoid shooting where backlit windows will be behind subjects.

-

I wonder if flour dusted on to reflective surfaces would work. Others have suggested make-up or coffee dust.

-

Here are some commands to move the photos from the camera to a computer.

gphoto2 -L # Aka --list-files. gphoto2 -P # Aka --get-all-files. chmod 644 IMG_*JPG # Idiotic DOS file system. Blah. gphoto2 -r /store_00020001/DCIM/100CANON # Aka --rmdir, and this seems to be the one to clear.Or capture directly to a computer with

gphoto2 --capture_image_and_downloadif you’re doing a lot of this. -

Tape up any reflective surfaces whose geometry is very important to you.

-

Take photos of the empty set!!! Before changing the camera position when aiming toward a turntable setup at a new angle, take some reference photos - called a "clean plate" in the jargon.

Software

Meshroom

A nice video showing a complete photogrammetry workflow based on Meshroom.

Yay! Meshroom is working for me now! They now have a Linux version that actually works for me. I’ll leave what I wrote about this software below so that we can remind ourselves how much the situation has recently (2021) improved. But I had no trouble now.

The download and run process was simply

wget https://www.fosshub.com/Meshroom.html?dwl=Meshroom-2021.1.0-linux-cuda10.tar.gz

tar -xvzf Meshroom-2021.1.0-linux-cuda10.tar.gz

cd Meshroom-2021.1.0-av2.4.0-centos7-cuda10.2/ # CentOS looking stuff, safely ignored.

./MeshroomPretty much exactly like you’d hope.

Then go to File → Import Images. Select a bunch of photogrammetry

appropriate images. They should load. Then press the green Start

button. Sit back with your htop and nvtop and enjoy.

If that worked great, great. If not, Take a very hard look at how many of the images didn’t get matched. They should have little green marks by the image icons and not red marks signaling that the features couldn’t be matched. The problem is that it doesn’t try hard enough to make the matches. To bump up this effort, select the ImageMatching node and change its Method to Exhaustive. Note that this will of course take much more time. But having the process actually use your input images does wonders for the results!

Some tips I discovered that may improve things.

-

Don’t forget to remove any clean plates from the image set if you took them.

-

ImageMatching

-

Method: Exhaustive

-

-

FeatureExtraction

-

Describer Types: sift, dspsift, akaze. Multiples OK. Seems to carry over to other nodes. Need a better understanding of feature matching algorithms? This overview is good.

-

-

SFM -

-

min matches: 250 Or higher!!! The 250 was helpful for 800x images, but with my 6000x images, I had to bump it up to 750. So maybe it could go even higher and eliminate a lot of editing and background noise false positives. On a 6k image, there’s no excuse for it to not find 50k matches and pick the strongest 1k.

-

Force lock intrinsics: yes

-

Other check boxes: no

-

Maybe try choosing start images though how to optimize this is unclear; perhaps big triangulation angle while still keeping matchable features in the shots.

-

-

Depthmap

-

Downscale: 1

-

-

Mesh Filtering

-

Smoothing Iterations: 8

-

Here’s what I’m trying to do to subtract out the clean plate images

(photos taken of the scene without the turntable or subject, basically

the stuff I’d like to always be 100% ignored). I have each tripod

height set named as A_0042.JPG and then B_0067.JPG and so on. So

this processes the B set. I have a set of 2 or 3 clean plate images

called cleanplateA1.JPG that I compute the median of..

$ S=B

$ convert cleanplate${S}*JPG -evaluate-sequence median median${S}.JPG

$ for F in ${S}_*JPG; do echo $F; \

convert ../median${S}.JPG $F -compose MinusDst -composite ../clean_only/${F%%.JPG}-clean.JPG; \

doneAnd then I edited some files in Gimp and was astonished to find that one of those edited files could be imported. But not a second. It could be any of the files! But the second through N would fail (silently!) to load. I hunted this down to being some kind of EXIF tag that Gimp seems to add that completely discombobulates Meshroom. The answer… well, an answer was to copy the EXIF information from the original photos. Something like this.

for F in ../ORIG/*JPG; do

echo $F; B=$(basename $F)

exiftool -tagsfromfile $F ${B%%.JPG}*

doneMeshroom Problems

Of course this is far from perfect. First of all I’m confused why my

Meshroom-2021.1.0-av2.4.0-centos7-cuda10.2 seems to be all compiled

binaries.

$ file Meshroom

Meshroom: ELF 64-bit LSB executable, x86-64, .....Looking at the source on Github it all seems to be Python. How can I dig into the source of what is really going on with the x86-64 executable?

Installing the Python version from source seems impossible. It asks for KDE keyrings and immediately stops on the first dependency (pyside, whatever that is).

And the reason I need to do deep troubleshooting is that when I took 118 photos of 6000x4000 pixels and had ImageMagick reduce them down to 800x533 the whole pipeline ran just fine. The results were decent. I ran the set at full size and it came out a mess. I then edited out background confounders using Gimp. And… the photos would not load. The program would silently ignore my edited photos (listed in a warning in console, but no explanation). I can find no EXIF tag that seems out of place, and no other hints of what Gimp seems to be doing to corrupt these images.

Here’s a similar bug report with no helpful resolution.

Old notes:

Meshroom - A very slick looking piece of software that has some problems. Like compiling against Nvidia drivers that can’t possibly be present in a normal Debian system. Like not telling you that’s a problem until halfway through. Like telling you your card is not sufficient for the job when your card clearly is. Like telling you this 30min into the job. Like the fact that compiling from source leaves you missing mystery dependencies that the internet has not heard of — WTF is "geogram"? Like everything works great on Windows. Like the Windows version is reported to work on Wine even when the Linux version fails. Like I couldn’t get the Wine version working because I didn’t have an IPv6 route. WTF??

MVE

Multi-View Reconstruction Environment

-

Simon’s web site with excellent related research papers.

Features Structue-from-Motion (SfM) algorithm, Multi-View Stereo implementation, export tools, surface reconstruction tools. C++ "…suitable for reconstruction amateurs."

This package is excellent composed. No stupid CMake or dependency dipshittery. This software just does the math. Nice. Doesn’t seem to use the GPU. Good! I’m tired of Nvidia’s compatibility/proprietary nonsense.

The User’s Guide is sensible and complete.

You may need this if you want to run the GUI.

apt install qt5-defaultI found the GUI helpful to have a quick look at the results but I think it would be not hard to skip using the GUI at all. This approach is totally scriptable.

Here’s the workflow - seems quite sensible and can be easily wrapped up into a script.

$ S=/tmp/MySceneDirectory

$ makescene/makescene -i <image-dir> $S # Prepare a "scene" from an image set

$ sfmrecon/sfmrecon $S # Structure From Motion Reconstruction

$ dmrecon/dmrecon -s2 $S # Multi-View Stereo computes Depth-Map Reconstruction

$ scene2pset/scene2pset -F2 $S $S/pset-L2.ply # Scene's point cloud union converted to point cloud

$ fssrecon/fssrecon $S/pset-L2.ply $S/surface-L2.ply # Floating Scale Surface Reconstruction

$ meshclean/meshclean -t10 $S/surface-L2.ply $S/surface-L2-clean.ply # Cull doubles, strays, etc.We are advised to try out other off-piste components like Poisson Surface Mesh Reconstruction, pictures here.

Here’s a full script.

#!/bin/bash # Scene directory. S=/tmp/photo3d/ # Supplied directory where images are. Default could be a symlink. IMGIN=${1:-${S}current} # Timestamp. Because this may take a few attempts. TS=$(date +%Y%m%d-%H%M%S) # Basename to call this scene. NAME=$(basename ${IMGIN})-scene-${TS} S=${S}${NAME} MVEPATH=/home/xed/src/mve/ MVEAP=${MVEPATH}apps # Prepare a "scene" from an image set echo "-----------------------------------" date +%Y%m%d-%H%M%S >> $S/log echo ${MVEAP}/makescene/makescene -i $IMGIN $S >> $S/log ${MVEAP}/makescene/makescene -i $IMGIN $S # Structure From Motion Reconstruction echo "-----------------------------------" date +%Y%m%d-%H%M%S >> $S/log echo ${MVEAP}/sfmrecon/sfmrecon $S >> $S/log ${MVEAP}/sfmrecon/sfmrecon $S # Multi-View Stereo computes Depth-Map Reconstruction echo "-----------------------------------" date +%Y%m%d-%H%M%S >> $S/log echo ${MVEAP}/dmrecon/dmrecon -s2 $S >> $S/log ${MVEAP}/dmrecon/dmrecon -s2 $S # Scene's point cloud union converted to point cloud date +%Y%m%d-%H%M%S >> $S/log echo ${MVEAP}/scene2pset/scene2pset -F2 $S $S/pset-L2.ply >> $S/log ${MVEAP}/scene2pset/scene2pset -F2 $S $S/pset-L2.ply # Floating Scale Surface Reconstruction echo "-----------------------------------" date +%Y%m%d-%H%M%S >> $S/log echo ${MVEAP}/fssrecon/fssrecon $S/pset-L2.ply $S/surface-L2.ply >> $S/log ${MVEAP}/fssrecon/fssrecon $S/pset-L2.ply $S/surface-L2.ply # Cull doubles, strays, etc. echo "-----------------------------------" date +%Y%m%d-%H%M%S >> $S/log echo ${MVEAP}/meshclean/meshclean -t10 $S/surface-L2.ply $S/surface-L2-clean.ply >> $S/log ${MVEAP}/meshclean/meshclean -t10 $S/surface-L2.ply $S/surface-L2-clean.ply echo "Try viewing with:" echo "meshlab $S/surface-L2-clean.ply"

Bundler

This may be useful as an alternate component to replace MVE’s

sfmrecon if a different approach is needed. Although now that I look

at that source code of mve’s sfmrecon, it uses the name "bundler" a

lot. So maybe this is already being done, or maybe it’s just very

compatible or similar.

Might need some Nearest Neighbor magic.

apt install libann-devIf you have the Ceres library, go ahead and enable it in

src/Makefile.

USE_CERES=trueOther than that it is properly organized, with only relevant easy-to-install technical dependencies.

The library it complains about, libann_char is built and put in it’s

own ./lib directory. You might need to run with something like this.

LD_LIBRARY_PATH=./lib ./bin/bundlerOpenMVG

openMVG - No installation instructions, but none needed - compiled without drama!

git clone https://github.com/openMVG/openMVG

cd openMVG/src

mkdir build && cd build

git submodule update -i

cmake ..

make

python software/SfM/SfM_SequentialPipeline.py /tmp/imagedir/ /tmp/mvgresults

apt install meshlab

meshlab /tmp/mvgresults/reconstruction_sequential/cloud_and_poses.plyThen export to obj and import that cloud file into Blender.

This all worked great but it stops short of trying to put together a

mesh from the vertices. So you’ll have to fill in the edges yourself

or use the data to support some direct geometry creation by hand or

with other software. It turns out that MVE can import data from

OpenMVG using MVE’s makescene program.

Regard3d

Has an especially cogent description of the concept of photogrammetry and how this software executes that.

Says it requires Windows or Mac, but also elsewhere mentions Linux.

Unpacks the 7z archive into current directory though it’s a small mess — look for src and

version.txt if you need to clean it up.

Seems to mention OpenMVG - maybe uses it as an internal component.

Here were dependencies I needed.

apt install libopenscenegraph-3.4-dev libmetis-dev libvlfeat-devAh, it’s a no go for Linux:

I got to the part where it flailed looking for vague OpenMVG headers

that find could not find in the (working) OpenMVG source.

MicMac

Stands for Methodes Automatiques de Correlation, nothing to do with Macs.

Here’s its website.

I had a bitch of a time getting it compiled which I documented sufficiently here.

The fact that the issue was an obvious flagrant programming bug (but one that was not easy to properly sort out - easy to kludge) and 3 years old but not fixed is not a good sign for this software.

After wasting way too much time on that I finally got a running executable. I tried to run https://micmac.ensg.eu/index.php/Gravillons_tutorial [the basic default tutorial].

And…. it died immediately with a runtime error.

$ ../mm3d Tapioca All ".*.JPG" 1500

EXPR=[^(.*)bin/([0-9]|[a-z]|[A-Z]|_)+$]

To Match=[/tmp/micmac/build/src/CBinaires/mm3d]

------------------------------------------------------------

| Sorry, the following FATAL ERROR happened

|

| No Match in expr

|

------------------------------------------------------------

-------------------------------------------------------------

| (Elise's) LOCATION :

|

| Error was detected

| at line : 363

| of file : /home/xed/t/micmac/src/util/string_dyn.cpp

-------------------------------------------------------------

Bye (press enter)This tutorial never tells us what the options mean. I hunted down what that number meant but then forgot it and decided I wasn’t going to hunt it down again. The option format was designed by people who do not know and appreciate proper unix programs. The whole thing was a massive baroque mess.

Not ready for prime time and doesn’t seem on pace to be.

COLMAP

COLMAP - Prebuilt binaries for Windows and Mac… Ahem. Trying to compile I get the absurd "…gcc versions later than 7 are not supported!"

Blender Addon

Here’s a Blender add-on that nicely imports various photogrammetry files.

While not exactly doing full photogrammetry per se, Blender has some astonishing abilities that are very similar. It seems a lot of this is based on tracking cameras from tracking features in video. This allows Blender to reconstruct a 3d environment where the camera makes sense. This is done by the "camera solver" which should sound familiar to this topic. Check out my Blender notes.

Meshlab

Useful for playing with point clouds and converting them to something Blendable.

apt install meshlabData

-

A big list of image sets possibly suitable for photogrammetry.

-

Some photos of a skull can be found here

-

Colmap has some datasets of buildings.

-

OpenMVG has some data too. Buildings.

-

MVE has some data too.