Back in March I wrote the following.

"I don’t like to say too much about my accomplishments before I accomplish them so if I manage to get through this program I’ll have much more to say about it."

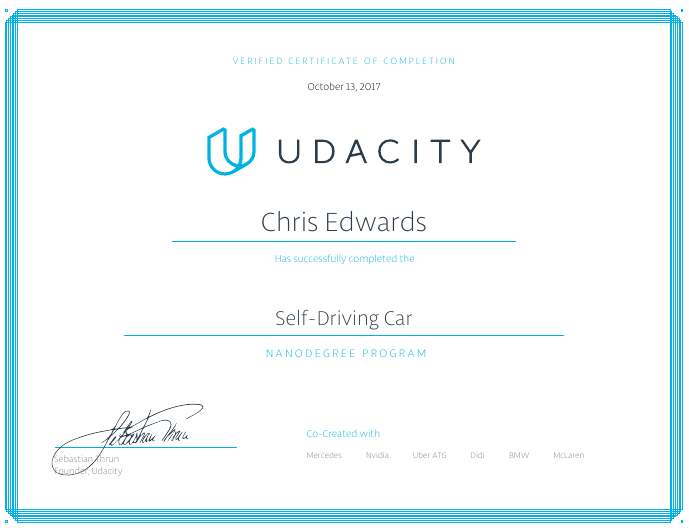

True to my word, that time has come. I have completed the third and final section of the Udacity Self-Driving Car Engineer NanoDegree course. I started in mid February with (I’m told) the fifth cohort. I worked quickly, skipped ahead, and have now just finished among the first cohort, the first people to complete this training. Here is a brief overview of each three month term.

Term 1 focused on two major topics that were both very valuable to me, computer vision and machine learning. Those are not just each big topics, they are huge. They do necessarily go together for autonomous car purposes and getting all of the necessary knowledge in place was certainly no easy educational challenge. In fact, the big problem with this whole course is that to learn about computer vision or machine learning usually requires graduate level specialization at a university. To apply this to autonomous vehicles usually requires graduate level specialization at one of a handful of elite technical universities. To get some condensed straight information about how to most practically apply these difficult topics to autonomous vehicles was extremely helpful to me. Here’s an earlier write up of an impressive project from Term 1.

Term 2 focused on what I call "rocket science". This included sensor fusion and all flavors of Kalman filtering. That stuff is just straight up 1960s NASA slide rule rocket science. Udacity founder, Sebastian Thrun, is the author of Probabilistic Robotics so there was quite a bit on probabilistic motion. Basically more rocket science. There was also some control theory which was a lot more practical than my university exposure to it (but somewhat less practical than my machine shop exposure to it). This was also the term where C++ was to be emphasized. In addition to the heavy topics, this could have been an additional stumbling block but it was pretty easy for me. My contra-Java obsession with C++ finally paid me back a tiny bit; maybe one day it will pay me back in real money.

Term 3 seemed to have a slightly different style. It was less about hardcore fundamentals and more about topics that one needs to know about. There was some pretty detailed material showing how one could do path planning, for example. I found that interesting but didn’t feel like that’s how I would do it. It’s not how I did it with my SCR entry notable for its quality racing lines. There were two interesting "elective" topics (only one was required). The first was functional safety and basically the paperwork guff that the automotive industry feels is most helpful in baking safety into the design process. I’m not knocking it. Like the ISO9000 guff that was popular when I was a factory engineer, I am both horrified by this stuff while simultaneously admiring its reasonableness. I went ahead and looked at all of the lessons for this just to get familiar with it (my notes on it, for example) but ultimately I chose to do the other elective project. That project was "semantic segmentation". Learning about this has pretty well convinced me that this scheme will be the heart of my planned self-driving vehicle. It’s pretty amazing. I’ll probably write more about it separately.

Finally, at the end of term 3, there was the grand group project. The theme here was "system integration". This was envisioned to be the showcase attraction of the whole course, actually programming a real self-driving car. I led a group consisting of two guys in Germany, one in RSA, and one up the road in LA. Luckily everyone on my team was high quality and we were able to work well together. I even took the opportunity to become an expert Git user despite my traditional misgivings. A lot of the difficulty in this project involved the mundane ordeal of getting a setup that worked. For example, ROS, the Robot Operating System, was not impressing me with efficient minimalism. Many people were forced to compound all of that by using VMs to run the necessary Linux running the necessary ROS on their Macs. Although I didn’t have that problem, there were strange issues with the Unity game engine simulator which caused all kinds of mischief. Anyway in the end we were able to get our simulated ROS-controlled car to drive around in the simulator and properly recognize (and stop for) stoplights in the camera feed using a machine learning classifier. Yay! You can see videos of the project if you’re interested.

The performance of the real car, however, was less inspiring. Udacity had a lot of early development problems getting the car ready for action. They also had to work out complex scheduling. From our point of view, we barely understood what the car was even supposed to do because its mission in real life bore no resemblance to the virtual simulated mission. This was especially painful to me because my main autonomous vehicle area of interest is simulator calibration (for example and here’s another example). There were also issues involving different libraries available in the simulator VM and on the car. When we got our two minutes of drive time, the car basically did its lap around a small parking lot and ignored the fake stoplight prop. In our defense, they had changed the camera quite a bit from the material we had been given to train with (nope, the dashboard never moved with respect to the camera) and, the whole operation was done in the dark half an hour after sunset. Of course we had no idea that was a possibility and no way to train to spot this particular fake stoplight in the dark. I think the real car testing was an ambitious idea that had some necessary extra difficulties for us as the first students to try it out. I’m confident that they will refine the course and it will continue to improve.

Although this course was advertised as requiring approximately 10 hours per week it was later acknowledged that this was rather optimistic. I spent at least double that. Considering that and the $2400 price tag, this was not a casual learning experience. I actually felt more engaged for this course than I did for my engineering degree from a traditional university. I would even say that this course featured more challenging material. I felt like the lessons were really well designed and presented. For me, on-line education is so superior to traditional live lecture performances that it’s hard for me to imagine how the latter still exist. (And I work for a charismatic university professor!)

I’m very happy with my decision to take this course. I learned things which I wanted to learn and things I didn’t know that I didn’t know, things that are indeed important to my goals. I feel like if I talked to anyone who was building an autonomous vehicle at this time I would never be completely in the dark about what they were talking about. I feel like I understand the state of the art in this field better and I better understand some people’s AI optimism even if I still do not share it. I even feel like I can immediately apply many of the course’s lessons to my day job; machine learning and vision are useful in many fields. Overall I have been delighted with this experience.