When I watch an uninspiring movie (like you may have just done!), I often find that a lot can be salvaged if it is packaged with bonus features that explore how the thing was made. On this page I have shared details of our project’s creation for people who find such things interesting — mainly my future self who I know will!

Modeling And Assets

I get the feeling that smart computer graphics artists, or at least those on a deadline, try to steal as much as they can. They do not want to create their own assets ab initio. However we — mostly because of me — tended to go full control freak and synthesize things from scratch. Here’s a breakdown of the assets we used and where they came from.

External

-

I would like to highlight the superb skeleton model we used as being especially excellent. Had E not found it, we may have been dissuaded from the whole concept. Although its "profile picture" (shown below) was not very impressive, as it turned out, our prototypes were so spectacular where the skeleton was concerned that we knew we had a winner.

-

The hand model — what we called "skin" for reasons related to our project — was taken from some very generic human model. It was not very good and a lot of the problems I had animating it related to the fact that the model itself was just not right (fingers too slender on a big hand, etc). I ended up heavily re-sculpting it.

-

I found the flower on a free asset site and I wish I hadn’t. It had terrible mesh geometry that we groused about the whole time. I had to clean it up extensively. The whole idea for the petal drop came from it not being properly attached to the model in the first place! I did all the rigging which was very simple and E completely redid all the texturing, which was not.

-

The ball, the scene box, the camera, were all provided in the contest kit. We didn’t use any of the animations or textures in the contest kit.

-

There was also the entire LBS shading system which we purchased specifically for this project and is covered in more detail below.

Internal

-

Art frame and graphics - E created a decorated frame and showed off an excellent texture. This is just the artwork without that render texture.

I felt the texture was so good that the frame deserved some serious investment. I like the purity and versatility of vectors and I composed all of the frame artwork in Inkscape as SVG. I got design ideas by looking at tons and tons of heraldry. My hope was that the elements would have a somewhat antique feel and have a somewhat coherent look.

(As you can see the 6th image down in the first column is the coat of arms of Sir Linux Nerdalot.) The graphic work is not perfect, but I’m pretty happy with how it came out. During our last couple of renders, we realized that we were seeing pixel artifacts from the artwork masks. Thanks to the miracle of SVG, we jacked up our masks from 2048 to 16384 pixels wide and the quality went up dramatically. SVG - the way to go!

-

Hourglass - I modeled the hourglass using the reference painting. Nothing complicated about that until E started to work on texturing it. I think the resulting transparency textures look fantastic. E was able to rig it so that the sand actually drops which I think is pretty cool. It works well with its texture and really looks like the sand is flowing.

-

Flower vase - I modeled the vase using the reference painting. E also rigged the thing to have the water level go down. Again the transparency textures are not easy and E did a great job with them.

I rigged the tulip to wilt

-

Meat - Amusingly we had an entire model for what was between the skin and bone. I kind of think we should have carved out more screen time for it, but the way we have it works. E created the first meat model but I redid most of it because I wanted it to behave better while animating. I made heavy improvements to the skin model too. Turns out we didn’t really have the meat visible while the hand was active. But we actually could do some pretty cool stuff with that setup!

-

Slab - Seems easy and that’s what I thought at first. But because the camera angles are all preset — and badly for our scene — I had to keep making adjustments to it. And then there’s the small matter of the ball needing to make an exit in the corner. So I had to break off a chunk. I’m happy with how it came out. E did a nice job of texturing it.

-

Sand - We had some more complex ideas at first, but I realized we could just cheat. I started with a cone and did some proportional edit mode poking here and there to make the base look kind of natural. But flowing sand doesn’t assume weird shapes really; it falls in a cone! The animation of it is all done with clever keyframes, notably changing the scale of various axes (Z axis to grow the pile, X axis to funnel it, etc). I think that animation was one of my better tricks.

Rigging And Animating

When I started animating this, it looked terrible and I was worried that I wouldn’t know the correct way to detect and correct the problems. Which made me think the crazy thought, why not just build a real one and film some hand action in it? And that’s exactly what I did!

It turns out that real hands doing this real thing are very boring. If I had a mocap glove on or something and really captured this accurately, it would come out looking boring. I actually had to deviate from reality a bit to liven things up! Still, I think seeing those clips was very helpful to getting the animation decent.

The hand animation of the final video is not great, but for my first time doing it, it’s ok. I made a lot of progress very quickly. Here I’m experimenting with rough animation of the first rig E created from scratch. It kind of works, but I could see it was hard to work with. You can tell this is very early work because we haven’t even deleted the message that came with the contest kit.

Here some bad animation is being flagged. Note the broken wrist.

It turns out that your hand can almost do that but it is not the natural thing to do. This was a pose solved by the inverse kinematics solver — mathematically valid, but it didn’t quite agree with human physiology best practices.

And here you can see that I mostly fixed it.

Rigify

After using the initial rig that E created for a while, I thought it would be worth seeing if I could redo it. E seems to be better at rigs from scratch, but I know the official Blender add-on called Rigify. This allows for high-quality rigs to be automatically generated for some common applications (human/biped, horse, dog, etc). One amusing aspect of this is that you really need a whole body rig at first and you can delete unused parts later, which is what I did.

Here I’m checking the new rig with the old one to make sure the new system is size matched pretty close.

And here you can see more of that test showing how the old one (Smurf blue) had some very incorrectly sized arm bones that actually caused quite a bit of trouble getting proper poses. The new (pink) model works much more smoothly.

Definitely the controls on the Rigify rig were an improvement over our first attempt. Still I have to give credit to E for making a functioning "hand-crafted" inverse kinematics hand rig from scratch.

Rigid Body Physics

One of the important judging considerations was: does the ball follow the laws of gravity? Well, in our scene, yup. Pretty much. Though we kind of opted out of using too much of that. I have to say that getting this right was one of the biggest pains in the whole contest. I’m confident in saying that it wasn’t just me too. The contest template file had the physics animation set to be active between frame 1 and 250 (let’s not even think about the fact that the contest stated that the frames would be zero through 449). The problem with that is that the ball has to drop in around frame 113 and leave around 330.

So when you split that up like we did, and the physics function correctly for the drop in, but not for the drop out — I want to scream! So that was a very rotten trick. Also that baked animation stuff kept causing me grief. Every time we reloaded an update from our repo, these settings — and no others! — were lost. Super annoying. You also have to scrub through some vague (to me anyway) range to get the physics to recalculate and get its math sorted out.

Another fun thing is the "Animate" property. You might, like I did, think that means you want the physics system to take over and animate the ball using gravity physics. Nope. The opposite. When "Animate" is checked, you are free to animate. Great. But peek into this rabbit hole with me a bit deeper and see that I have to put a keyframe on that animate property. Yes, that’s right, I am animating the Animate checkbox. Let that sink in, Inception fans.

Hey, we had a lot going on and fighting with the physics every step of the way was getting tedious. In the end since I have physics applied for maybe 30 frames, I simply hand animated a ball to match the proper gravity physics and then turned off that whole mess.

In theory I could add some unnatural lateral force or get the bone shapes to have convex hull colliders or something. Instead, I cheated and just added this fun little wedge right under my exit ball which would fall on it and roll to the exit like a perfect golf putt.

This shot is really showing off the shader work, but that little wedge was just to nudge the physics ball along. Ultimately when I just went with static baked physics, this wasn’t needed any more. But I’m showing that we definitely did use the rigid body gravity physics! Besides, it’s fun to see all the weird tricks we used.

I totally can see doing cool things with the rigid body physics system but when you have a lot going on, it’s hard to integrate easily. I imagine Blender will be improving the physics usability soon (ahem).

Then there was the contest to fuss with. Would you say this ball has "exited"? Tough call. It’s definitely off camera because the camera position at this frame cuts off the angle.

Oh well. We can just do what we can do. But there was a lot energy spent stressing over dumb things like what exactly the contest rules meant by "exit".

Decay Effect

I was really happy how well we worked as a team. As noted, E made a prototype armature for the hand that I replaced with a better one. With the decay effect, the roles were reversed. This is my testing prototype of decay animation.

Note that it swells up before using animated boolean difference to eat through the skin. (Did you know bodies swell up? Oh yes, I researched it!)

E didn’t use this system at all but created a much better system. To be honest, I don’t even know how the new system works. But it looks great! Here’s a prototype for the new system being tested.

Here’s a test shot of another strategy E tried which used Blender’s rock generator feature.

The mesh actually looks quite good, but apparently this approach causes more serious conflicts with the rendering vision we had.

Texturing And Lighting

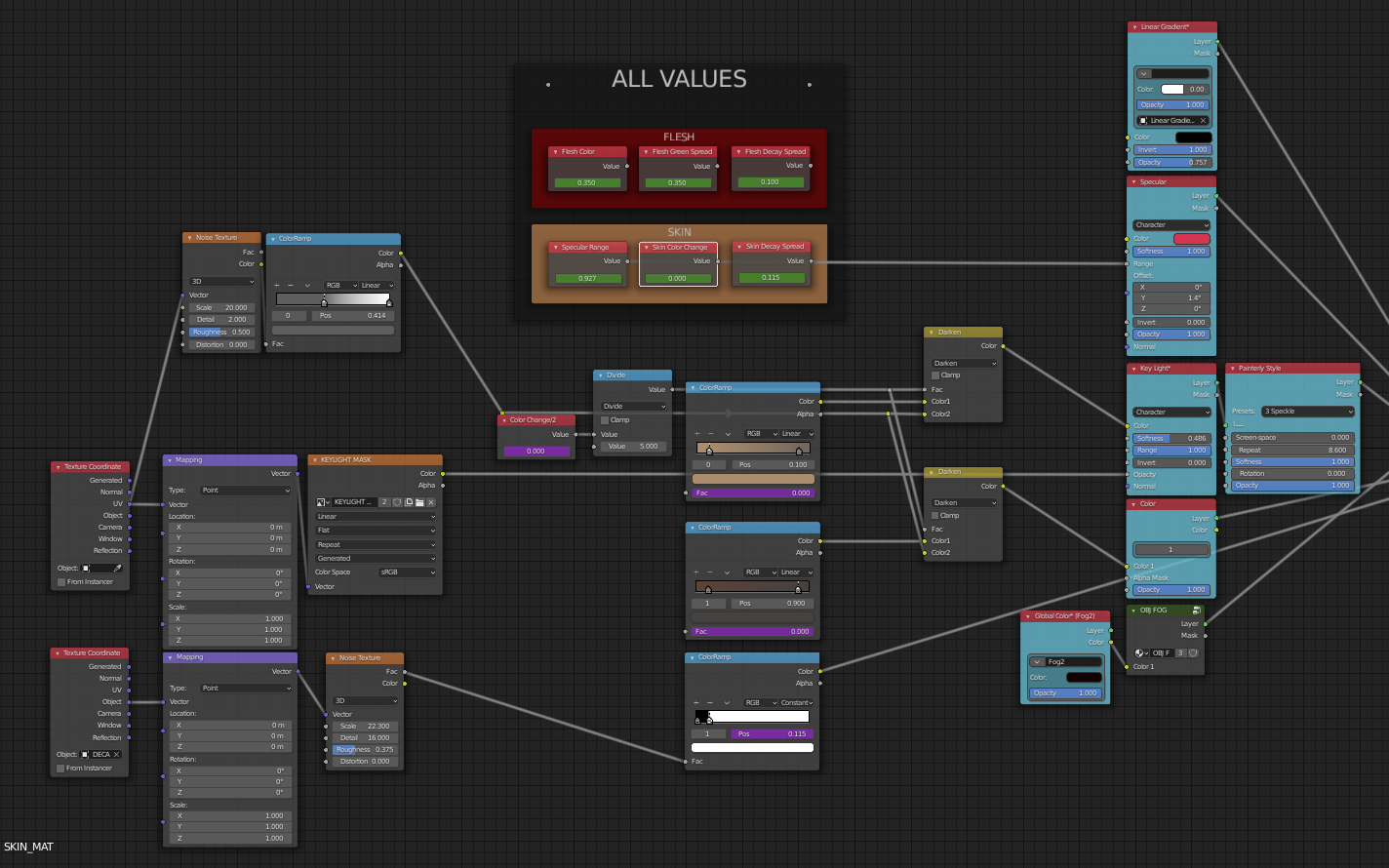

E did all the texturing and all the lighting. I don’t think the lighting was too involved — simple static lights. But the texturing certainly was a very serious undertaking! This is some of the crazy texture node setup that E created to get this texture effect. Pretty impressive.

There were several passes over different scenes that were composited.

Really, it was quite a feat! I’m just happy we were able to cut out the Cycles rendering engine because it can really slow development down if you don’t plan rendering jobs very carefully.

Lightning Boy Studio Shader

From the beginning of the project, E had been interested in using fancy rendering techniques that make the resulting artwork look less like computer generated stuff and more like hand drawn works. Sometimes this is referred to as "toon shading". No, it’s not perfect, but that’s an avant garde direction — to render with as high fidelity to a human drawn style as possible. This is really the next frontier beyond simply "realistic". I think it’s fun that the 17th century Dutch painters that inspired our theme were doing everything they could with traditional brush painting to get a result that looked computer generated, while we were doing everything we could to use computers to get a brush painted look.

It was suggested that we consider buying some of the process already worked out a bit. So we bought the Lightning Boy Studio Shader for $30. This is produced by some Canadian animators who are trying to recover some of their obviously heavy investment in their hand-drawn looking animation work. I must say that I was skeptical, but when we got it, I was immediately able to do some very cool things. Some amazing stuff. Totally worth it.

Here are some tests I did with LBS.

That’s actually showing some bad weight painting causing bad deformation of the middle finger.

As you can see, this is a very neat trick Blender can do.

E immediately also got some great results and took over that operation.

Combining the decay effect with the render, and it’s starting to come together!

I like this preview showing the effect applied to test objects, including Blender’s obligatory default test monkey.

Here is a a test video rendered on the 24th.

Here is a a test video rendered later on the 24th.

(If a browser doesn’t like the codec we used for testing, just do

mplayer <paste those mp4 link URLs> from the command line.)

Here’s a fun problem we ran into: we would render the same .blend files and our results would be different.

We immediately thought the files must not be the same somehow, but md5 hashes showed that we were doing it right. Hmm. Pro Tip: Coordinate your Blender version at the project start! It turns out that I was using a manually installed long-term stable (LTS) version while E was using Steam to install Blender. Steam of course uses the bleeding edge latest greatest. (The Debian stock one is a joke; I had to abandon it when they were still stuck on a pre 2.8 version that might as well be a different program.)

Ball Reflection

If you look at the ball in all the preview renders you’ll see that none of them actually reflect their surroundings. It turns out that Blender has multiple rendering engines and we’re using the one that is fast (33 minutes to render the whole 15s clip, about a billion pixels), but doesn’t support fancy reflections or panoramic cameras. The LBS shader system we used also complicated reflection issues.

With a day to go, E was able to somehow get a spherically distorted render from the perspective of the ball and map it onto the ball. Here’s what that looks like and you can see it makes a huge difference.

This shows the difference between this technique and what a lot of people tend to do which is use a generic outdoor scene mapped on the ball. When you know to watch for it in the renders, you’ll see it.

Here’s what it looks like when something goes wrong and that layer goes missing. Reminds me of the Loc-Nar.

Sound Design

The contest guidelines said, "Sound design is highly encouraged." Not only am I no expert at sound design and still half deaf in my right ear, that official guidance is pretty vague! For one thing, I am getting the feeling that they will put music over the whole thing. In my head I’m envisioning Yakety Sax or electronica circus music — basically the worst choice possible for our scene. And then there’s the obvious contention with the previous and next entries; I’m envisioning a car alarmed theme and a first person shooter theme surrounding our entry. What I’m saying is that I’m not optimistic that our subtle austere scene is really going to get the proper context it will need for sound. That, however, is the contest’s problem. I thought it would be fun to try to do a good job with the sounds — and it was!

Let’s start at the public library — where they incredibly let you just walk out with their stuff! I was looking through their CDs and I found some sound effects CDs — which I proceeded to walk out with. Here is a very good pro tip: when listening to such a collection, do not read the titles! Try to write down what you think it sounds like. That brings us to the first sound, the sand.

-

Sand - I had actually tried to record some oatmeal being poured, but it came out too sharp sounding. But on the effects disc I heard the perfect sound of sand pouring out! I also could imagine it sounding like very, very boring skiing. I was pretty surprised to read the title and find out what my perfect sound effect for sand was. You can listen to the clip and try to guess what it is.

The small sand dumping sound was the same effect but sped up a bit and given generous fades.

-

Ball catch - No magic here. I have a .750" steel ball bearing which I dropped into my hand and recorded. I also tried my trackball which I think I used in the end.

-

Bell - Though dangerously cliche, this was harder to find than I thought. Eventually I found one that I liked and the post processing was just to quiet it down a bit. These kind of bells ring a long time — longer than our whole scene!

-

Thud - This was the most frustrating effect. It’s frustrating because it sounds kind of wrong and yet it is the exact sound a hand makes falling limp like that. I know because I recorded my own hand doing just that. I probably should have used the concrete slab again since I think it’s the table reverb that is so wrong. Again, thinking smartly about it is different than hearing it smartly.

-

Ball roll - I recorded my steel ball dropping and rolling on the concrete paver I use as a foundation for our 3d printer. That worked great. I kind of wish it was rolling longer than .4 seconds.

-

Wind - Apparently that Big Video Website has a lot of videos of ambient sounds for people to sleep by. The winter storm video I used was 10 hours! After cutting that down to size I found that slowing it 75% really made it work. The final puff at the end is a layered clip of it at full speed with generous fades.

Hidden Quirks

Here’s a fun hidden flaw — it turns out that our shadow on the hand didn’t interact with the skin or meat layer. So the skeleton is shown in the shadow.

We thought it was kind of cool and since we only caught it in the final versions, we knew it was very subtle.

The other very, very subtle flaw you can look for is that we have a keyshape that withers the flower. The error is that the falling petal withers well after the main flower.

This was due to us moving it around a bit to find the right timing. We caught this only after we had rendered the final version that we submitted and didn’t think it was worth messing with. Instead it can be a feature for people who have read this far to appreciate the attention to detail that goes into a project like this.

Tools

The tools we used to create this project were exclusively open source. The only thing not freely publicly licensed was our custom LBS shader setup as mentioned. Here are the tools we used.

-

Blender 2.93.1 - The apex predator of software! Blender Rex! Long live Blender!

-

Linux - we both used Debian Linux exclusively, like we always do.

-

Mercurial - for version control and coordination. I’m going to write an entire blog post about how Git failed us in surprising ways.

-

Inkscape - for SVG graphics work. It really is a fine program but sometimes maddening if you have Blender habits burned into your brain.

-

feh - a great fast image viewer. You can almost play back the animation at full frames directly from full lossless PNG.

-

Audacity - used to record and edit sound effects.

-

Bash - wrote a tidy script to do custom batches/sets of rendering. And essential for just "using a computer".

-

rsync - to exchange render batches.

-

SSH - for remote render job setup and general remote configuration.

-

GNU screen - remote log in sanity and to keep remote render job connections open.

-

ImageMagic - there’s evidence of IM all over this page!

-

Gimp - to prep textures and masks, and to curate references.

-

ffmpeg - for making video previews quickly and fuss-free. Well, mostly fuss-free; I had to write a script to create sequential frame name symlinks because ffmpeg can’t seem to assemble frames 1,3,5,7…etc.

-

vlc - checking video. Really needed the

--no-video-titleoption! -

mplayer - double checking video.

-

byzanz-record - used for making animated gifs for testing, like screen capture but with animation.

The one auxiliary system we used that is not publicly licensed was to communicate — that is Discord. Discord isn’t the greatest program in the world, but thanks to all other messaging/conference software, it sure seems like it by comparison!