Some of my other relevant notes are here.

X Window System

This is a note describing how to use the X Window System between two hosts. More importantly it is an investigation into why that might not be such a great idea and what alternatives are available.

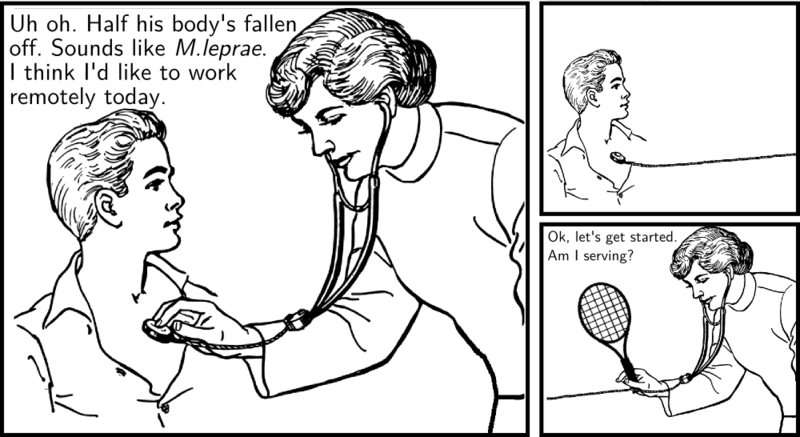

(Inside joke.)

Overview

The "X Window System" is the pedantic name for the graphical

interface technology (generally) used by Linux (I will abbreviate this

to "XWS") . It consists of two major pieces of software. First is a

server which accepts requests to do things to a display that the

server has control over. Second, is the low-level C library called

Xlib. This is the library that programs use to interact with the X

servers. Programs written with Xlib functions are called clients.

These clients connect to a running X server and make requests for it

to do the desired things.

A classic example of an X Window System client is xclock. This

program uses Xlib functions to contact a server and, if successful,

request that it draw an analog clock on a display that the server

controls. It then periodically makes subsequent requests to the server

to redraw the clock so that it shows the correct time.

This is why the applications you, the user, are running are generally called clients as far as the XWS. is concerned even if they provide server functionality with respect to other protocols (where they might rightly be called servers).

Note that the XWS does not specifically mandate or endorse a

particular window manager. A window manager is a program that is

just another client using Xlib which can contact and interact with a

running X server. Its function, as its name suggests, is to manage the

properties of other windows (X clients) that may be running on the

display. The protocol provides for some special consideration for

window managers, but in principle they are just X clients like any

other program that uses Xlib. The nice thing about this is that it

allows one to choose among many different window managers that are

available. KDE and Gnome are the major ones that compete for mass

appeal while specialty ones like ratpoison are for people with a

clear idea about what they want to do with their displays.

Network

The XWS uses Xlib to talk to an X server. The most common situation of this is when a user spawns a process that uses Xlib to make graphical requests to the X server which is running on the same system. In this simple case, the user, the program doing the user’s business, and the X server which moderates the display are all on the same physical machine.

But the designers of the XWS were under the heavy influence of new and exciting networking technologies. They figured that if you want to make a print server, you wouldn’t tie it to just one machine (the way the overwhelming majority of printers are actually setup). They immediately thought of a network with its arbitrary interface. Their main concern at the time was interoperability of displays and proprietary systems. But they also envisioned the possibility of producing graphical displays on systems that weren’t running the programs that generated what to plot.

More modern X systems seldom use the open network port (TCP 6000). This is for two reasons. First, to just have an X server port open invites all kinds of terrible security mischief where an attacker can basically display arbitrary things on a user’s display (like "Enter your password now:") and then capture a user’s interface events (like the key presses of a password being entered). The second reason that this port is usually no longer open is that modern X systems tend to use direct Unix sockets now to mediate client/server requests and connections. By skipping the overhead of a networking stack designed for arbitrary traffic, the fully local system can be more responsive.

However, this does not mean that X servers are incapable of receiving connections from remote clients. The modern way to use X remotely is to tunnel the traffic to the X server in a secure protocol.

Tunnelling Over SSH

Since an attacker who can eavesdrop on the network traffic of an X session basically can control the clients and server to the same extent that the legitimate user can, remote traffic must be protected. In any case where some arbitrary networking protocol must be wrapped in an additional layer of security, the first thing to think of is SSH (Secure SHell). SSH is normally for creating a secure log in terminal on a remote machine, but it’s modular design allows it to wrap any arbitrary connection with the same high-quality public key security.

Since protecting X traffic is a complex yet well-used feature, SSH has specific provisions for setting up the correct port forwarding needed for secure remote X sessions to work. There are two actually.

The first and classic way to have SSH handle X traffic is to log in

with -X like this:

$ ssh -X user@remotehost.xed.chThis should set up the necessary things that X clients on the remote host need to forward their traffic over the SSH connection to where there server is (generally where the user is sitting).

The problem with -X is that it uses the X Server "SECURITY"

extension which is somewhat fussy in how it does business. It is

designed to prevent a remote user from having trouble from malicious

users on the same system.

There is another way which is less restrictive and does all the SSH

forwarding magic but without worrying too much about the SECURITY

extension. This is the -Y option.

$ ssh -Y user@remotehost.xed.chNote that using this option implies that you trust pretty much everything the remote host is doing. If it is your box or one used only by your office, it should be fine. This is the recommended option for having the least trouble with connecting and using X clients remotely.

If I log in without -X or -Y and try to run a graphical program, I

get Error: Can't open display:. Once I add that, SSH creates a

.Xauthority file and also sets the DISPLAY environment variable

which helps the X clients know where to connect. As far as the clients

are concerned they’re connecting to a local X server but SSH is

actually tunneling that traffic to the remote site (remote to the

program, local to the human user).

Compression

When you think of all the dots on your monitor and all the key press and mouse events that constantly go on during a normal interactive session, you should start to get the feeling that there may be a lot of information involved in accomplishing a remote X session. There is no magic. If a program running on a remote machine changes a dot on the local display, that dot must be part of a message. Worse, that message is encrypted which adds some additional overhead.

Modern computers tend to have more processing power than they need and if you’re running remote X clients, this is almost certain to be true while your computer waits for display information to pass to the server. You can turn on compression of the SSH stream that improves bandwidth at the expense of requiring more CPU time on both computers. This usually fine for the remote user’s (X server’s) host since it is waiting for display information. However, if the client is running on a shared computational node, that could be problematic.

The way to turn on compression is with -C.

$ ssh -C -Y user@remotehost.xed.chIn general, it is better to use it when possible.

Is This A Good Idea?

To me remotely running X clients is like cannibalism, it may sound good in theory and in an emergency it can certainly be very useful, but it is probably best avoided when possible. I find that it is extremely rare when I really need to do graphical computing far away from where I’m sitting. Usually if I have a graphical display in front of me, the clients that best drive that display are also local. What tends to give people trouble is that the data they want to work with is remote.

Alternative - Version Control

Let’s say that you’re working on a project with a graphical editor at work and when you go home you want to continue to work. You think it might be worth running the editor on the remote machine and continue as usual. Depending on the latency to your house this could drive you mad. But even if it doesn’t if the work machine has limited bandwidth which is shared with other users, then you could be negatively impacting their less profligate activities.

In a case like this, the classy solution is to use a version control system like CVS (Concurrent Versioning System) or Subversion or Git or Mercurial. This allows you to check out a copy of the project to your home and work on it and merge the changes back into the main repository at work when you’re ready.

Getting used to working like this has numerous advantages:

-

Allows work to continue when bandwidth is zero (at the beach, for example.)

-

Allows mistakes to be undone.

-

Allows others to work on the same thing at the same time and changes are sensibly handled.

-

Allows progress to be tracked in complex projects.

-

Creates implicit back up copies hither and yon.

Alternative - NFS

Maybe you need something more interactive. Maybe you’re graphing data that is being dumped into a set of files on the remote machine. If you can create a Network File System mount you can work on the remote files from far away with the only bandwidth incurred being the necessary remote data. NFS is a natural way to distribute data in work group, but it generally is not very secure and not advised for general purpose long distance remote exporting.

Alternative - SSHFS

SSHFS may be useful if NFS seems attractive, but you face the following NFS related problems:

-

Don’t have the authority to run an NFS server remotely.

-

Must go through firewalls.

-

Need security.

As long as you have the ability to log in with SSH (as you would if you were tunneling X) you can use SSHFS. Basically SSHFS is a virtual file system using Linux’s FUSE feature. This allows user space programs to interface with file system features. What this means is that you can mount a remote file system over SSH and see it locally on your machine as if it were a local file. The only network traffic is the file data, the file system management traffic, and the encryption overhead. To use SSHFS do something like the following:

sshfs xed@remotehost.xed.ch:/home/xed/projects/ ~/remotehost_projectsOf course you’ll need to create a mount point directory (here it is

remotehost_projects). But once this mount is established you can

list that directory and all your remote files will appear. You can

work on them with your local graphical software and they’ll be updated

(securely) on the remote system. When you unmount the connection, the

files will cease to be available on your local system but the remote

ones will be as you left them.

|

Note

|

I had a lot of trouble with sshfs seeming to mount but then

producing I/O Errors and weird directory listings. The answer

appears to be to make sure you have a trailing slash on the remote

directory as shown above. |

Alternative - NXserver

Let’s say you really have a problem that really needs a remote machine to produce some graphics and you need those graphics on your local machine. This can be very frustrating if you have a slow connection or a clumsy X client running remotely. NXserver is a proprietary product of Nomachine which basically optimizes the XWS traffic for network portability. It is quite impressive and can really do a lot to help the difficult situations.

Keep in mind, however, it is proprietary and for profit and licensing is not always straight forward in my opinion. The free software world does not sit by long and let a cool thing remain in the hands of the plutocrats who can afford it. Keep an eye on FreeNX which aims to reproduce the impressive results of NXserver as free software.

Oh hey, check it out! Looks like we’ve been saved from NXannoyances by a more recent, less proprietary project.

Alternative - x2go

A new better version of the NX protocol based system.

Alternative - Xpra

Xpra is "screen for X" so says their website. It looks like it can tunnel X connections over SSH and reestablish sessions later.

|

Note

|

Andrey says it requires the x11-ssh-askpass package if a password is required to connect to the server machine, otherwise sshd might block the account for some time after a connection attempt. |

When Is It A Necessary Evil

I very, very rarely tunnel X over a network. I’m more likely to start an X server on a remote host to do something like watch a movie across the room. But there are times when I’ve found no other choice but to run X clients remotely:

-

Proprietary hardware interfaces - Sometimes equipment in our machine room (necessarily remote) requires GUI controls to set or monitor it. This is maddening, but tunneling can solve the problem. This is also closely related to a situation where the client (perhaps a Raspberry Pi) is only 32-bit and the application (perhaps Discord) has no native executable. Obviously the whole situation is suboptimal, but remote X can get you out of such annoyances if you really need it.

-

License issues - Sometimes you want to run a graphical program but you do not have permission to run it locally. You can run it remotely where it is licensed and tunnel the entire display interaction. This is expedient, but over all a rotten strategy to make a habit of. If you have to do it, you have to do it, but thinking out a better scheme or using free software may work better in the long run.

-

Security - Sometimes you want to do something on a specialized server in the machine room that is complicated. It may be possible to do what you need to in a better way, but you’re not sure what the security implications are. An example of this is using a graphical tool like gq to administer an LDAP server. You could try encrypted requests, but if your certificates are giving problems, etc, it might just be better to log in and trust SSH’s security while you sort things out. I think SQL database administration tools can have the same issues. Best to do it right, but if you can’t remote X can save the day.

-

Huge images - Let’s say you have a very fancy program for looking at astronomically huge images living on a remote machine. Maybe it’s a far off telescope collecting terabyte sized images. You may want to fire up the specialty image browser at the observatory so that you don’t have to transmit the entire skyscape over the net connection. It’s pretty rare when the size of your data file is bigger than your entire graphical transaction editing it. But it can happen. Cryo electron microscopy protein structure solving is another plausible use case.

-

Complex multiparty coordination at the server involving complex graphics. The quintessential example is something like Google Stadia, a streaming game platform.

Alternative - TeamViewer

I have not used this but it seems to work fine if you’re ok with onerous proprietary entanglements and the likelihood of some pretty heavy snoopification. The chance of TeamViewer being a joint venture between the CIA and their German intelligence counterpart is pretty high. If that seems like wingnut conspiracy madness, come back after you’ve looked into Crypto AG.

I was able to install this on Lubuntu with the following procedure.

$ cd ~/Downloads

$ wget https://download.teamviewer.com/download/linux/teamviewer_amd64.deb

$ sudo apt install libqt5x11extras5 qml-module-qtquick2 \

qml-module-qtquick-controls qml-module-qtquick-dialogs \

qml-module-qtquick-window2 qml-module-qtquick-layouts \

qml-module-qtquick-privatewidgets

$ sudo dpkg -i Downloads/teamviewer_15.2.2756_amd64.debI don’t know much more about this.

How To Check?

No two remote computing needs are the same. If you’re really interested in how problematic your remote X situation may be, you have to actually check. Since X server events and Xlib calls tend to be user-based real time affairs they can be quite random. In fact, they are literally the way programs which need high entropy (PGP) get it.

To get a good feel for what the network is doing while you’re remotely tunnelling the graphical environment, you can set up a monitor. I used the following program:

/* bytecounter.c * Chris Edwards * Reads stdin and counts the number of bytes. Unlike `wc` this gives * running counts as it receives data allowing you to monitor flows * that do not cleanly begin or end (log tails or tcpdumps, etc). */ #include <stdio.h> int main(int argc, char * argv[]) { int c, /* holds the character returned by getchar */ n=0; /* count */ while ((c= getchar()) != EOF) { n++; if (n%10==0) { printf("\r<%d>",n); fflush(stdout); } } } /* End main */

Compile this with gcc -o bytecounter bytecounter.c and run the

following:

sudo tcpdump -U -s 0 -i eth0 -w - host remotehost.xed.ch |./bytecounterThis will watch over network device eth0 and forward copies of all

bytes transmitted over that device to the bytecounter program that

keeps a running display of what’s going on. This can be very

illustrative.

Results

For the first test I was editing text files. The numbers show the

amount of network traffic generated. The first row shows the traffic

required just to open the editor with no file. The small edit was

basically taking a file containing cal 2012 and doing G:r!cal 2013

in vim or gvim and saving. The big edit involved scrolling through an

8MB file containing the works of Plato until I got to 10% of the

document and then jumping to the end and then exiting.

| Test | ssh -C (vim) | sshfs (vim) | ssh -X (gvim) | ssh -CX (gvim) | nxclient (gvim) |

|---|---|---|---|---|---|

Open Editor |

1.4 k |

0 |

274 k |

101 k |

29 k |

Edit small |

8 k |

39.9 k |

783 k |

222 k |

84 k |

Edit large |

516 k |

3,159 k |

72,874 k |

18,283 k |

5,536 k |

As you can see simple things can probably sneak by with gvim but once the interaction gets busy, the overhead is considerable. NXclient is really pretty remarkable in its efficient use of bandwidth. Also note that the SSHFS method had to download enough blocks to display everything I wanted. This made it (using the editor locally) less efficient than just logging in and doing over the network. And on small edits, the file system overhead is also not competitive. For many other applications, however, sshfs is a great option. It also will have the least latency problems for real time operations.

Next I ran the ICM molecular modelling and analysis suite to get results for a busy graphical application. This didn’t have really any file data and was concentrating mostly on the X server traffic generated by the interface. For this test I started by just loading the GUI version of the program. Then I loaded a hepatitis E virus "3hag" protein and displayed it. Next I had ICM construct the 60 facet icosahedral capsid sphere of this virus (60 times more model).

| Test | ssh -X | ssh -CX | nxclient |

|---|---|---|---|

icm -g load |

2,361 k |

803 k |

19 k |

icm "3hag" |

9,311 k |

2,137 k |

168 k |

icm "3hag"x60 |

No chance |

58,671 k |

752 k |

Again the value of SSH compression is evident. And again the bandwidth requirement of the NXserver is quite impressive.

Final recomendations

-

If you’re in a network constrained environment, such as where a file server is already laboring under its traffic, tunnelling X will only make things worse or much worse.

-

In a multi user environment, routing X traffic over a shared network can be rudely anti-social.

-

In these days of workstations with way more power than needed to drive displays, making remote machines drive them is probably sloppy and unfortunate.

-

Tunnelling to machines in the same physical network (same room, etc) is probably fine from a performance standpoint if there are no other pressing constraints.

When I thought about this issue, I thought about cables. I wondered what the difference was between the bandwidth of monitor cables vs. network cables. If my calculations are correct, HDMI cables can carry the following bandwidth: (1920x1200 60Hz 24bpp = 3,317,760,000bits/second) 415 MB/second.

And gigabit ethernet implies that Cat6 network cables can carry 125 MB/second. This is why HDMI cables have a maximum length of 15 meters and Cat6 can run 100 meters. Just from this standpoint it seemed obvious to me that whatever was going on in the monitor cable probably shouldn’t be sent out over the network. One might argue that X protocol traffic is efficient and vector oriented. This may be true for things that are amenable to such an approach. But for many of today’s modern applications (video, games) this seems unwise to count on. The X protocol is definitely full of ways to write raw buffers of bitmap data (inefficiently not vectors) to the server.

When I thought about the big jumps in network infrastructure speeds (10Mbit, 100Mbit, 1Gbit, 10Gbit) I thought that maybe network speeds are catching up. It does seem likely that they already are sufficient for old school applications. But that’s the problem. Displays get bigger too and applications get busier. However, it does look like the trend is that network speeds are increasing faster than requirements for monitor data. Maybe it will one day be trivial to have a monitor cable’s data tunneled over the internet. While that’s a possibility, I still think it’s best to plan to be economical in one’s use of remote computing bandwidth. When I think of the display walls composed of dozens of monitors, I imagine our appetite for display bandwidth is just getting started.

Creative Control Of Events

Let’s say you needed to automate some kind of video game grinding where the same key sequence is mindlessly pressed over and over, the more the merrier. Is it possible to synthesize keyboard and mouse events from nothing? In theory, yes. Another example is imagine you want to do some work on an AI algorithm that controls a simulation, for example, a driving game. How can you hook up your autonomous driving agent to the driving game’s input?

The arcane details of this topic can be investigated with these random tips.

-

This article has a little cc program that can inject keyboard events.

-

sudo cat /dev/input/event4 -

ls -l /dev/input -

cat /proc/bus/input/devices -

xev -

xdotool works well for synthesizing fake keyboard events and may already be installed.

-

Evemu "…making it possible to emulate input devices through the kernel’s input system."

-

sudo apt-get install libevdev-dev libevdev-tools libevdev2

How To Inject Control

The focus of this exercise will be on getting synthetic steering wheel events into a driving game as if the wheel was manually used. This will be helpful for creating autonomous assists for driving games.

Here is how I have done this (over SSH no less). Start by finding

which event number your wheel is using in the /dev/input

kernel-generated pseudo tree. Here it is event5

$ grep -A8 -B1 Force /proc/bus/input/devices

I: Bus=0003 Vendor=046d Product=c29a Version=0111

N: Name="Driving Force GT"

P: Phys=usb-0000:00:12.1-3/input0

S: Sysfs=/devices/pci0000:00/0000:00:12.1/usb6/6-3/6-3:1.0/0003:046D:C29A.0006/input/input8

U: Uniq=

H: Handlers=event5 js0

B: PROP=0

B: EV=20001b

B: KEY=1f 0 0 0 0 0 0 ffff00000000 0 0 0 0

B: ABS=30007

$ evemu-describe /dev/input/event5 | grep ^N:

N: Driving Force GT

$ evemu-record /dev/input/event5 > /tmp/wheelevents

^C

$ evemu-play /dev/input/event5 < /tmp/wheeleventsAfter the evemu-record you can play with the wheel until pressing

Ctrl-C. Then fire up the game and get it ready to notice the synthetic

input and run the evemu-play. You should see the game behave as if

the wheel is being turned like you turned it when recording.

The next step is to truly synthesize novel events programmatically.

How to Capture Display

This is just not going to be easy. You’ll need to recreate the

business end of something like simplescreenrecorder or Open Broadcast

System. I believe these use the MIT shared memory extensions to X. See

man xshm.

Here’s a Cpp strategy for doing that which integrates with OpenCV.

Python

If the performance works out, here’s a strategy for Python. This uses PIL’s ImageGrab which helpfully does take a bounding box. There is also Python’s mss which stands for Multiple Screen Shots. Full documentation for mss which explicitly says: "…it could be easily embedded into games and other software which require fast and platform optimized methods to grab screen shots (like AI, Computer Vision)" Good examples here including region of interest capture.