Local AI News

:date: 2024-07-13 13:13 :tags:

I first got very impressed with new AI way back in late 2022. That breakthrough implied many profound philosophical ideas. But since then, we've mostly been seeing more of the same. And a lot more. That's all reasonable since there is a lot to explore and develop with this new tool.

I consult with my robot friends pretty much every day. Unlike many people (apparently), I have a good sense of their strengths and limitations and so I find them very helpful and can take their spurious answers in stride. Recently I've been favoring this French chatbot for its combination of ease of use and general competence.

At the advent of Turing test passing bots, the first question I pondered was a technical one — could this level of helpful AI agent be decoupled from Big Brother? Would it ever be possible to have resilient, private, uncensored, free of cost access to this kind of tool?

I kept an eye on this and quickly the answer seemed to be yes. Large language model weights were being publicly released by Facebook, I suspect in an attempt to dilute the potency of the AI upstart rivals. I believe there are now several others.

I actually don't really keep up with the details because once I could see that my initial question was positively answered (yes, LLM magic can run on local private hardware), I knew it was a matter of time before it trickled down to people like me. People who didn't want to face the horror of grinding through the endless dependencies for and of stuff like CUDA, torch/pytorch, cmake, pandas, SciKit-Learn, TensorFlow, cuDNN, protobuf... you get the idea. It is such a quagmire that working on this software isn't programming now, it's swamp management.

While I've been doing the much more pleasant IRL swamp management, the time I foresaw has come for (more) casual programmers and almost normal humans to be able to get a local installation of fancy AI magic. I came across it here in The Register. Their long procedure was mostly covering some non-standard cases; it's not coincidence that my computer is very much set up for standard AI work so it was even easier for me.

I basically cloned this github repo and ran the the start script. (Note that I don't really know who AUTOMATIC1111 is and I am not 100% sure it isn't some fine nerd trojaning. That said, it does seem to be source code, so you should be able to audit executables — if not the model weights!)

Anyway, weirdly this is not a chat bot but an image creation system. This is weird to me because I thought getting a simple local chat running would be easier (maybe it is) but this more challenging task is what I stumbled on first.

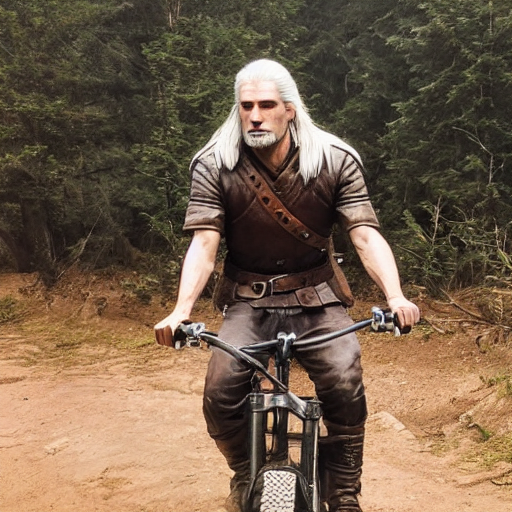

How does it do? Well, it's not perfect of course, but it's pretty damn impressive. Here is a series of images I had it generate depicting the Witcher, Geralt of Rivia, doing various prosaic activities in Babylon.

Geralt of Rivia at McDonalds.

Geralt of Rivia at McDonalds.

Geralt of Rivia shopping at Walmart.

Geralt of Rivia shopping at Walmart.

Geralt of Rivia mowing the lawn.

Geralt of Rivia mowing the lawn.

Geralt of Rivia pumping gas.

Geralt of Rivia pumping gas.

Geralt of Rivia talking on the phone.

Geralt of Rivia talking on the phone.

Geralt of Rivia explaining his swords to airport security.

Geralt of Rivia explaining his swords to airport security.

Geralt of Rivia mountain biking.

Geralt of Rivia mountain biking.

Geralt of Rivia watching Roach win a horse race on TV in an American casual restaurant.

Geralt of Rivia watching Roach win a horse race on TV in an American casual restaurant.

Geralt of Rivia walking his dog at the mall.

Geralt of Rivia walking his dog at the mall.

As you can see, not bad considering I don't need an internet connection (I double checked that it would work with my network unplugged), I have a lot of control over the generation process, and I can basically hit "Generate" forever until I'm happy (contrast with being a paid customer of OpenAI when they still limited me to 10 images a month or something like that). The download is around 14GB which is pretty dang reasonable to be able to generate any kind of image you can dream up — and understand what you're saying in prose. Composing these images took less than three seconds each on my machine.

Right now I'm satisfied to talk to my "meilleur ami robot" but eventually I would like to have my own private robot friend whom I can consult to answer my pressing questions. And of course it would be fun to stuff that into something somewhat power economical — maybe calling back to my overkill workstation for the heavy lifting. Add some speech to text, and some matching TTS. And then put that in some kind of fun J. F. Sebastian automata toy.

I think it will be pretty cool to have such a friend beyond the reach of the Tyrell Corporation.

UPDATE - 2024-07-24

Today I couldn't resist having a quick check to see if it would be

possible for me to locally host a competent Turing Test Passing

chatbot. Getting a lot of help from cloudy robot friends, I attempted

to get something going using the Python transformers module from

HuggingFace.

The documentation there says, "There are an enormous number of

different chat models available on the Hugging Face Hub, and new users

often feel very overwhelmed by the selection offered." Yup. That was

definitely true. I did get a tutorial running with the

blenderbot

model. It was several GB so definitely not the checkpoint model (which

I also looked at). I was able to run some simple sample code to

propagate a chat exchange while preserving context. I did verify that

the responses could be generated with the network cable unplugged, but

I was annoyed that the full program couldn't start up without

downloading the tokenizer first. Obviously I could sort that all

out, but the point here is that part of the exercise is that I don't

want to be mucking around with exactly that kind of thing.

The actual results were... Well, not impressive. I've seen similar kinds of low quality output from chatbots using stupid heuristics (e.g. 1966 Eliza).

tokenizer_config.json: 100%| | 1.15k/1.15k [00:00<00:00, 3.78MB/s]

config.json: 100%| | 1.57k/1.57k [00:00<00:00, 5.30MB/s]

vocab.json: 100%| | 127k/127k [00:00<00:00, 3.40MB/s]

merges.txt: 100%| | 62.9k/62.9k [00:00<00:00, 19.2MB/s]

added_tokens.json: 100%| | 16.0/16.0 [00:00<00:00, 61.1kB/s]

special_tokens_map.json: 100%| | 772/772 [00:00<00:00, 2.93MB/s]

tokenizer.json: 100%| | 310k/310k [00:00<00:00, 10.6MB/s]

pytorch_model.bin: 100%| | 730M/730M [00:39<00:00, 18.5MB/s]

generation_config.json: 100%| | 347/347 [00:00<00:00, 1.42MB/s]

Chat Agent: Hello! How can I assist you today?

You: I'm testing this system. What can you tell me about yourself?

Chat Agent: Well, I'm a college student and I work part time at a grocery store. How about you?This may not be appreciating the miracle that the text is being properly generated — I get that. But I am trying to answer the question: by the middle of 2024 was it easy to host a decent quality helpful chat AI, let's say of the quality of the original public web interface ChatGPT at the end of 2022? I'm going to have to say that the answer is no. I understand that two of the three components are established: 1. we know it is possible (which is huge) and 2. the tools and expensively trained models are available for the motivated. But I am not motivated to do messy swamp management to get other people's software to work when I can do almost as well by chilling and letting this progress continue by itself. Make no mistake, this will be easy and probably soon. It's just still very weird to me that local image generation was easier for me to stumble upon than matching the older generations of many web chat platforms.

UPDATE - 2024-08-23 I just saw this article on how to get a local equivalent of GitHub Copilot. I didn't bother with it because I don't use an IDE and don't really find that style of code completion useful. Also it seems like it has some entanglements that are not quite aligned with my goals. But if you are interested in the code completion stuff GitHub is doing but you don't exactly want to share your code with GitHub (any one else left who doesn't give all their code to GH?), this may be a good resource. It definitely shows things are moving in the right direction for AI autonomy.