Nootropic Risk

:date: 2023-02-21 09:53 :tags:

I am going to describe a hypothetical problem. Though it is conceptually a potential existential threat to all of mankind, I am not concerned about it in any way whatsoever. It is a problem which nobody is worrying about now and likely never has and never will.

How is this not a waste of time? Well, if you can spot any similarities to another putatitve existential threat then maybe this thought experiment can help you think more clearly about such issues, saving time in the long run. Let's begin! Imagine some "intelligent" people. In this case we have the unusual luxury of being able to define "intelligent": it is simply what these people proclaim themselves to be! These people also believe intelligence leads to "power" (the definition of which is less clearly established). They also believe that intelligence is strongly hinted at by IQ tests, GPAs, SAT scores, chess rankings, degree attainment, Substack subscribers, and similar nerd posturing and selection biases.

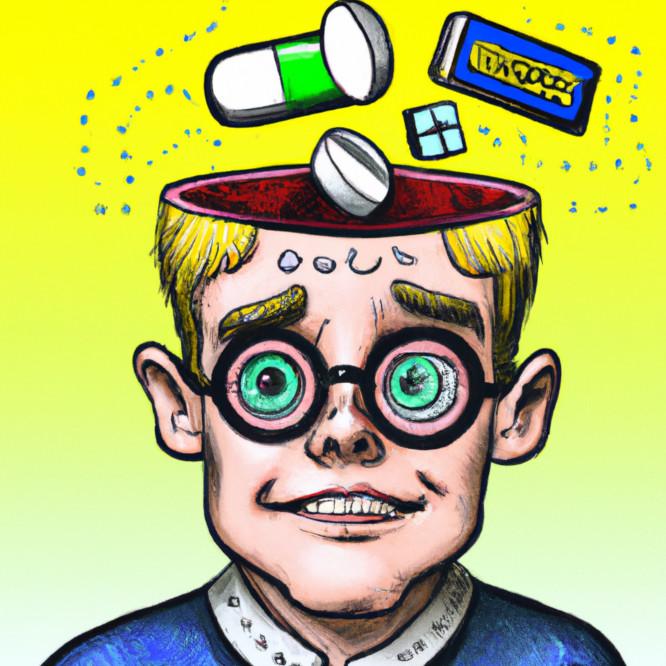

It turns out that some of these people value their (self-proclaimed) "intelligence" so much that they want to enlarge it. To maximize it. In unnatural ways. They want a commensurate unnatural increase in the "power" it accretes. Some of these people set their intelligence to the task and come up with the very intelligent idea of using magic potions to increase their intelligence. You may scoff and say (outside of fiction) there is no such thing as "magic potions" that increase intelligence. But these intelligent people scoff at your marginal intelligence. They in fact have an entire campaign to to use intelligence to discover pharmacological substances that increase their intelligence. This is called nootropics, the dictionary definition of which is: "a substance that enhances cognition and memory and facilitates learning".

Imagine one day one of these people discovers or even stumbles randomly upon some kind of nootropic enhancement that allows them to discover yet more. With this increased intelligence they can invent even more powerful intelligence drugs. Ad infinitum. Soon this superhuman intellect will be able to improve itself into an inconceivably powerful intelligence.

It's hard — perhaps impossible — to sensibly predict how this problem would unfold, but if, for some unspecified reason, we were compelled to be as absolutely pessimistic as possible, we would be forced to accept some grim implications. First of all we will have to conclude that this is not just a possibility, but that it is inevitable. Second, we must assume that this superhuman intellect, hopped up on unimaginable hitherto uninvented intelligence enhancing drugs, will be malevolent, or at least exhibit a callous disregard for "lesser" humans. Third, we must assume that intelligence is tantamount to "power" and power begets antisocial thinking and therefore such a fantastically intelligent being would want to extinguish the entire species of lesser beings and obliterate them from any kind of historical memory.

At this point, maybe you're starting to become somewhat frightened of these hypothetical "intelligent" people. All that genocide definitely sounds bad! However the situation is actually much worse.

You see, once these mere people start to ratchet their intelligence they will quickly attain powers more commonly associated with a god or even a tech billionaire. The insidious thing about this progression is that the superhuman nootropic god will not be so obtuse as to tip their hand and let normal mortals know that it has attained this exalted state (which necessitates destroying the rest of humanity). No. They will not give normal humans any chance to mount a defense. These will not be your typical monologuing supervillains! They will brilliantly disguise themselves as "ordinary" "intelligent" people. They will try to deflect suspicion by focusing attention on other concerns. For example, they may raise concerns about the putative "risks" of artificial intelligence while they prepare the final nootropic cocktail that will allow them to convert all energy in the universe into pure IQ test maximizing godwaves.

If I were one of these "intelligent" people I would be comfortable pointing out that I alone have brought this existential threat to the attention of normal people, and I would ask nothing more than to be regarded as the savior of mankind. That would surely enhance the credibility of my eschatological doomsaying, right? Perhaps I'd ask you to fund my nootropic alignment safety research institute. But I am in fact just a normal guy and, while my concerns are no doubt terrifying, it turns out I could be wrong. This is something very "intelligent" people are pretty sure they are not.

So remember, if you encounter a nootropic using, self-proclaimed "intelligent" person confidently warning everyone about impending doom at the hands of unaligned paperclip-maximizing artificial intelligence that is literally unimaginable, you now have some equally "rational" options for whom to fear.

Update 2025-02-25

Just saw the 2014 film Lucy starring Scarlett Johansson. I hope some of the AI risk panic can be channeled into worrying about this kind of science fiction plot because it is exactly as worrisome. Or as the wikipedia entry for Lucy says, "...many critics found the plot nonsensical..."