Yesterday I posted Part 1 of my analysis of the NTSB's final report on the March 19, 2018 fatal collision of an Uber Advanced Technology Group (ATG) research autonomous vehicle into a pedestrian.

You may have noticed something unusual about Part 1 — I did not explore any technical issues related to autonomous driving. In my main post on the topic from last year and in Brad’s recent analysis there is some obvious attention to the technology.

I felt it was important to properly separate the two important relevant issues.

-

Are idiot drivers terrible or the worst problem ever?

-

Why exactly are autonomous vehicles not yet immaculate?

As I showed yesterday, the root cause of the fatal crash was an idiot human driver who happened to work for an autonomous vehicle research team. If an engineering team is designing a new kind of blender, we can’t say much about the safety of the blender if an intern mixes too many margaritas, gets drunk, throws the blender out a 10th story window, and kills a passerby with it. Of course if such an incident did occur, an official investigation might reveal all sorts of information of great interest to professional blender designers. That is what I am doing now. In some ways I feel bad for Uber because, as with the victim’s favorite recreational drugs, their internal operations are really none of our business. However, they do operate dangerous machinery in public and a bit of extra insight in this case seems like a fair trade.

The only thing I mentioned about autonomous vehicle technology in part 1 was this extremely provocative suggestion made by the Vehicle Operator.

Section 6f says, "When asked if the vehicle usually identifies and responds to pedestrians, the VO stated that usually, the vehicle was overly sensitive to pedestrians. Sometimes the vehicle would swerve towards a bicycle, but it would react in some way." Well, that seems on topic! At least to me! I believe I was the first uninvolved observer to notice that it seemed like the car steered into the victim. This has now been conclusively confirmed.

Let’s take a look at why I believe that the autonomous car did in fact steer into the victim.

The Vehicle Automation Report was fascinating to read. Autonomous car nerds should note that the radar made the first detection of the victim. It also got her velocity vector estimated immediately as a bonus. Of course it didn’t quite correctly guess that it was a person walking with a bicycle, but it did come up with a safe practical guess of "vehicle". Lidar comes in .4s later with no idea about the object’s nature, speed, or trajectory.

Here is a very important bit in 1.6.1. It’s a bit hard to parse, but read it carefully because — when combined with a grossly negligent safety driver — this is lethal.

However, if the perception system changes the classification of a detected object, the tracking history for that object is no longer considered when generating new trajectories. For such newly reclassified object [sic], the predicted path is dependent on its classification, the object’s goal; for example, a detected object in a travel lane that is newly classified as a bicycle is assigned a goal of moving in the direction of traffic within that lane. However, certain object classifications — other — are not assigned goals. For such objects, their currently detected location is viewed as a static location; unless that location is directly on the path of the automated vehicle, that object is not considered as a possible obstacle. Additionally, pedestrians outside a vicinity of a crosswalk are also not assigned an explicit goal. However, they may be predicted a trajectory based on observed velocities, when continually detected as a pedestrian.

If I’m reading that right, the system clears any historical path data about an object when it is reclassified. This seems crazy to me.

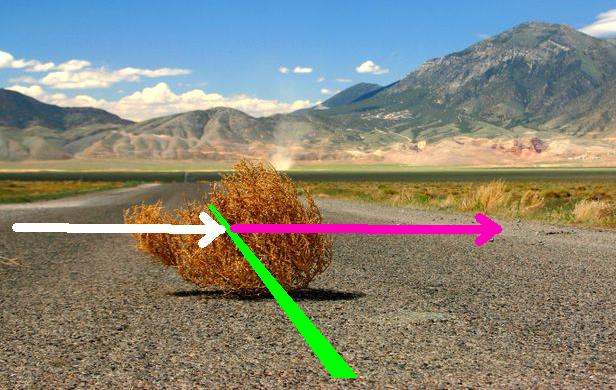

Let’s think this through with an Arizona style example. Let’s say that the perception system detects an object and classifies it as a tumbleweed.

Here the white arrow is the trajectory the system measures and the magenta arrow is the trajectory the system supposes will consequently follow. Because the object appears to be on a path to be out of the way when the system gets to where it is now, it can comfortably just keep heading straight ahead on the path shown in green.

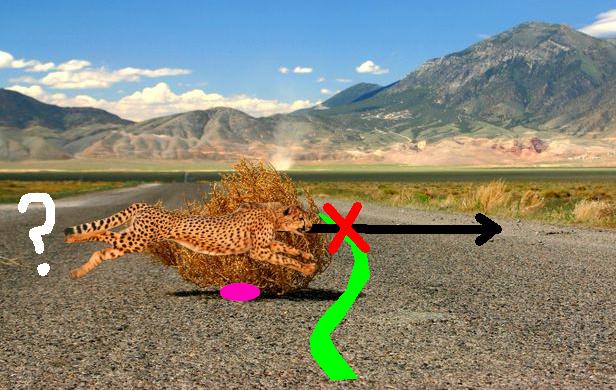

But then it gets a new closer perspective on the object and it now changes its belief to thinking it is a cat.

If I understand this right, the trajectory of the tumbleweed is now discarded (!) and the cat object gets to start over with no trajectory data, indicated in white. Now for a future prediction about where the object is headed (magenta) it only has some preconceived idea of what cats do. And we all know that cats are lazy and mostly lounge around. (At least that’s what the internet is telling me.)

So even if the car could have neatly avoided the tumbleweed/cat by going straight (because it could see that the object was heading out of frame) once it changes its belief to the cat, it might try to swerve (green path) to avoid what it assumes is a lazy lasagne eating cat that it believes is unlikely to be sprinting across the road.

But what if it is a cat but not doing what cats normally do?

If the cat is moving like a tumbleweed — and importantly, not like how it believes cats move — you have a recipe for disaster. The system has thrown away valuable data about the object before it was known to be a cat (white). It believes cats are lazy and sedentary (magenta). It is planning to swerve to a new path to avoid the cat (green). If the cat or whatever it is, in fact, is moving fast as originally perceived (black) the misunderstanding presents an opportunity for a collision (red X). A collision that painfully looks to human reviewers like the car was going out of its way to swerve into the cat.

In the report’s specific example — the subject of the report and a topic I care a lot about — the class of interest is bicycles. If the perception system had a murky feeling that there was something out there but didn’t really know what (e.g. "other"), it would assume that it was stationary, perhaps like the giant crate/ladder/mattress type objects I’ve encountered randomly on the freeway. It may start to believe the object is moving after some time of actually tracking it. It may even have a decent sense of how to predict where things will wind up a few seconds into the future if nobody radically dekes. But if, as the perception system gets closer and re-evaluates things, it decides that it is a bicycle, all that motion data was discarded.

It is the inverse of my example with the same effect — the system decides that what it thought was a stationary object is really an active one, but in reality the stationary assessment was more correct. Once the object is given properties of a typical bike as the best approximation of what to expect, it is out of touch with the true situation. And that is how a pedestrian in an odd location consorting with (but not riding) a bicycle gets wildly misunderstood by a complex software planning system.

With that in mind it’s very chilling to read the event log ( Table 1) which shows the 5 seconds before the crash, when the lidar first became aware of the hazard; the system changed the classification approximately (the word "several" was used which I’m counting for 3) seven times, each time erasing trajectory information.

The report’s table 1 also confirms my analysis of the video that the car’s intended action was to cut inside the "cyclist" in preparation for a right turn. By erasing the victim’s trajectory at every muddled classification, the final guess used to make the best plan it could was that the victim was a cyclist and therefore likely to be going straight. This is exactly as I had predicted.

In my original post I called out this traffic engineering arrangement as the real crime here. Without trajectory data, assuming a cyclist in that location would be riding through the intersection like one of the strongest people in the neighborhood is entirely reasonable. Not having kept the contradictory trajectory data was an unfortunate mistake.

That explains the mistake that led to the fatal situation. But there was much more in the report. Incredibly it is also revealed that…

…the system design did not include a consideration for jaywalking pedestrians. Instead, the system had initially classified her as an other object which are not assigned goals [i.e. treated as stationary].

Damn. It’s easy to see why the classification system had trouble. Had they never seen jaywalking before? Very strange omissions.

So that’s bad. But there’s more badness. Braking.

"ATG ADS [Automated Driving System], as a developmental system is designed with limited automated capabilities in emergency situations — defined as those requiring braking greater than 7m/s2 or a rate of deceleration (jerk) greater than +/-5m/s3 to prevent a collision. The primary countermeasure in such situations is the vehicle operator who is expected to intervene and take control of the vehicle if the circumstances are truly collision-imminent, rather than due to system error/misjudgement.

I find this very strange. We had heard scary reports that Uber had turned off the Volvo’s native safety braking features. I figured that would be sensibly replaced by better ones. (Maybe they still are but…!) To limit braking effectiveness is madness. Yes, I understand that you need to not cause a pile up behind you but if your car needs to stop in an emergency, and it can stop, it should stop. To solve the problem of the mess behind, have rearward tailgating detection watching for that. If the rear is clear, there is no excuse to not stop at full race car driver deceleration when it is needed. I would think that letting the limit be whatever the ABS can handle would be best for stopping ASAP. To put ATG’s limit of 7m/s2 in perspective this paper makes me think that 7.5 is normal hard braking and ABS does about 8, with 9 possible.

To be fair you can see the problem here. The report says that out of 37 crashes in 18 months by Uber ATG vehicles in autonomous mode, 25 were rear end crashes (another 8 were other cars side swiping the AV). Clearly if you’re out with idiot drivers, you need to be watching your backside.

(Let’s round this out because in my original post I wondered if there is "a ton of non-fatal mishaps and mayhem caused by these research cars that goes unreported? Or was this a super unlucky first strike?" The report goes a long way in answering this question. Looks like Uber was very unlucky. The details are interesting: In one striking incident (pun intended) the safety driver had to take control, and swerve into a parked car to avoid an idiot human driver that had crossed over from the oncoming lane. In two more incidents the cars suffered from problems because a pedestrian had vandalized the sensors while it was stopped. WTF? And finally in one single incident that could be blamed on the software, the car struck a bent bicycle lane bollard that partially occupied its lane — we can presume an idiot driver had been there first to bend it.)

But this topic gets weirder.

-

if the collision can be avoided with the maximum allowed braking and jerk, the system executes its plan and engages braking up to the maximum limit,

-

if the collision cannot be avoided with the application of the maximum allowed braking, the system is designed to provide an auditory warning to the vehicle operator while simultaneously initiating gradual vehicle slowdown. In such circumstance, ADS would not apply the maximum braking to only mitigate the collision.

Wow. That is a very interesting all or nothing strategy. "Oh, hey, I couldn’t quite understand that was a bicyclist and it now looks like only computer actuated superhuman braking can avoid a collision — turning control back to you human. Good luck."

Note that this has nothing to do with the false positive problem. I’m assuming only situations where the system is more certain of needing emergency braking than a human would be. To say you want to back off potential braking effectiveness because of false positives is addressing the wrong problem.

The report notes one reason the Volvo ADAS was disabled was to prevent the "high likelihood of misinterpretation of signals between Volvo and ATG radars due to the use of same frequencies." Really? That’s a problem? Do they know what else uses the same frequency as a Volvo’s ADAS radar? Another Volvo! I’m a bit worried if this is a real problem. And the Volvo’s ADAS brake module was disabled because it "had not been designed to assign priority if it were to receive braking commands from both [the Volvo and Uber systems simultaneously]." Again, that’s weird. This calls for an OR, right? If either braking system is, for whatever reason, triggered, shouldn’t there be action?

At least this tragic incident pushed Uber ATG to really fix up their safety posture.

Since the crash, ATG has made changes to the way that the system responds in emergency situations, including the activation of automatic braking for crash mitigation.

Also several stock Volvo ADAS systems now remain active while in automated driving mode. They even changed the radar frequencies to avoid any chance of confusion there. They also upped the braking g force limits. All sensible changes.

I consider the main lethal bug to be the resetting of the trajectory information upon reclassification. Apparently, they duly fixed that too.

Overall I never got the impression that Uber ATG was careless or particularly unsafe. I felt like all of their procedures were pretty reasonable given the fact that they are forging ahead into the unknown of our transportation future. There will be bugs and there will be mistakes. Not properly understanding the full depravity of using a phone in a car may be a special Uber blind spot due to the nature of their primary service. But it really did seem like they tried their best to do things safely and with a good backup plan. These technical failures are merely interesting and should not be taken out of context. Maybe the Uber engineers were testing how some dynamic reacts to a known bad system and they normally use a better one (don’t laugh, I’ve done it personally). But if they were doing something like that or even if their system just had bugs that needed to be found and fixed, their engineering team was counting on their safety drivers for safety. And as with the overwhelming majority of automotive tragedies, it was the human in that plan that let everyone down.