Today we learned of the sad and inevitable news that history had been made—one of the autonomous vehicles being tested killed someone.

This was huge news, like a traffic jam where everyone is gawking at the aftermath of a crash. There were tons of stupid clone articles saying almost nothing ( e.g.)

If you’re curious and want to read more about it you should read Brad’s excellent coverage and follow-up.

As you can see from the title, my first question was what percentage of the other 102 human beings who will get killed by cars today in the US (based on 2016 stats) will have nothing to do with car driving? Or the 12000 Americans that get injured? Today?

I did have some grim amusement reflecting on how if I am lucky enough to be killed by an autonomous car, that the media will speculate about my homelessness. However, when I finally do get killed by an ordinary idiot driver, it will go pretty much unnoticed.

The preliminary reports by the Tempe police chief indicate the car was not at fault. As regular readers know, I’m a bit of a stickler about people brandishing guns or cars, killing someone, and then blaming the victim for doing something "wrong". Let’s set that aside for the moment and respond to the stupid claims that this incident somehow creates a "safety record" (or lack thereof) for autonmous cars and that they are now statistically more dangerous than idiot human driven cars.

Obviously the sample sizes are stupidly weak. But there is a more serious problem with such thinking which can be summed up with the following rhetorical question: What feature of the autonomous control system (which, by the way, was augmented by a professional human sitting there to prevent this exact thing) was contributory to the tragedy? I’ll give you a hint - I don’t know.

Brad and I kind of suspect that this should have been handled better by the car but if this situation was extremely unlikely to be avoided by a human driver in the exact circumstances, it is wrong to attribute the failure to the autonomous driver. In fact, what we have here is a garden variety example of the fact that cars kill people.

If later it comes out that there was some programming or engineering deficiency that should have been addressed that might have prevented the crash, well, that’s not a great sign. But all indications so far look like this poor woman was just one of the 100 who will die today by car because that is just how the whole system is set up.

Another way to look at it is to divide casualties into three categories. Autonomous vehicle at fault, human at fault, and a car crash because cars are fundamentally dangerous (we all, as a civilization, share the blame). I’m thinking this incident goes in the third category further emphasizing the need for the first to exist.

It also struck me as unlucky that it’s a fatality or are there tons of lesser incidents we’re not being exposed to? Maybe animal road kills or hitting mailboxes, etc.? In other words, is there a ton of non-fatal non-reported mishaps and mayhem caused by these research cars that goes unreported? Or was this a super unlucky first strike? I’ve heard of some sensational and vague reports of problems, but who knows? Perhaps this is the tip of the iceberg. All I can say is that when I went to see autonomous cars in the wild, all the ones I personally saw behaved no worse than humans.

Now we turn from the abstract to the peculiarities of this specific incident. Does this poor woman really want to walk 200m out of her way to cross the street? You can easily say in hindsight she should have done that. Bullshit. In hindsight she should have crossed anywhere else or at a different time. This is because crossing at the light is ostensibly safer, but in real life it is not. That’s where you get cars making unpredictable turns, maybe on red, maybe on green yet into the pedestrian "right of way", maybe with turn signals, maybe not. I would have crossed exactly where she did.

And why is that? Why cross at such a weird place? During the 80 minutes of my commute today that was as a pedestrian, I did the same thing crossing the execrable La Jolla Village Drive. Yes, in the dark too. Sometimes ameliorating someone else’s problem by standing there like a dork for several minutes while autonomous traffic cops make brainless wrong decisions about who deserves special priority treatment is just too much to bear. I understand the victim’s plausible state of mind. The fact that there is a "Use Crosswalk" sign exactly at the scene screams "There are compelling reasons to cross here!"

Why is there a weird bike path in the median right where this poor woman was killed? Very strange. Of course the real and only law, which I managed not to break today, is do not get killed.

It turns out that the crash site is an extremely dangerous place for people with bicycles, but not like this. Just crossing the road is relatively risk free. Sure she died, but try doing exactly what the traffic engineering has provided for cyclists! That takes extreme vigilance not to die. This is because the scene of the crime is where the marked bike lane is just given up on. Oh, uh, here is, uh, where I guess cars need to just drive right into your space. Obviously the onus (not legally, but for all serious considerations) is on the soft target on the bike to not get mowed down. That’s getting increasingly difficult with people stroking their phones instead of driving.

Then check out the sign right before the crash site, perfectly placed to warn of exactly what it was warning of.

Some early reports said that the vehicle was speeding, 40mph in a 35mph zone. At first that really bugged me. (Autonomous cars should not speed.) But checking it myself, it does look like the speed limit is actually 45mph. Frankly, the woman probably got hit because she was confused by this. She was probably expecting the car that hit her to be going 60mph like all the other cars. More information will no doubt be interesting.

There are a couple of other points of interest with this sad spectacle. First I’m glad to see that the requirements for bicycle wheel reflectors (§1512.16) has relaxed a bit. This is because wheel reflectors are stupid. Ironically, this situation may have been one of the few where wheel reflectors could have been helpful (had a human been driving; the AV has night loving LIDAR which doesn’t care). But, as was the case here, wheel reflectors would probably not have been seen in time. Alas, this woman had none, hopefully not reigniting the regulatory fervor for bad wheel reflectors. (Reflective strips on tires are much less stupid. Get those.)

I’m not blaming the victim but as an expert in certain two-wheeled topics it might be a good opportunity for me to reiterate how dangerous I think it is to hang swinging cargo to the handlebars of two-wheeled vehicles. Don’t ever do that. Seriously. But nothing indicates it was contributory in this case. In fact, it seems that the victim wasn’t even riding the bike, despite what that news caption implies.

I think that’s about all I can say about this incident. As I said with the first Arizona Uber Autonomous Volvo crash, the good news is that, unlike when I and my bicycle were hit, this incident will not be a mystery. It is no doubt extremely well documented with recorded telemetry and video. If some defect in the control software caused this fatality, I’m sure it will be fixed. Unlike human caused traffic deaths, with autonomous vehicles, in theory, the exact same stupidity never gets repeated twice. And it’s looking quite likely that there was no particular stupidity on the part of the car beyond what normal people are complacent about.

UPDATE 2018-03-22

Video of the crash has been released.

On one hand it’s not obvious that a normal human driver could have easily avoided this. On the other hand, why this car let itself drive into something which was clearly out on the road and on a trajectory for a collision is a mystery and something Uber should be unsettled about.

The victim was wearing black which is LIDAR’s worst case situation. LIDAR doesn’t need/want ambient light but if it can’t pick up this, then it’s not ready for prime time.

Hmm… Brad’s latest update seems to think there is a rumor that the LIDAR was turned off for some kind of testing. It would have been crazy not to keep it on independently as an auxiliary back up. It will be interesting to see what the exact LIDAR situation was.

It does seem like this video footage is crap quality. Shouldn’t there be 10 cameras on a test vehicle? I personally recorded better forward video on my way to work this morning. Maybe this is just a cheap dashcam like mine and not the cameras they use in their control system. Let us hope.

This video is a perfect demonstration for why wheel reflectors are pointless. While they possibly could have helped here, remember that in this situation, she wasn’t even riding the bike. If she were, then she’d have been in and out of the car’s headlight beam so fast that reacting to wheel reflection would simply not be an option. If you still think it makes sense to have wheel reflectors because of this video, then you must advocate mandatory reflectors on shopping carts, golf bags, backpacks, and shoes, etc. Let me also reiterate that reflective strips on bike tires are smart and should always be used. I personally put reflective tape in between spokes on the inside of the rim. But mandatory spoke-mounted wheel reflectors don’t help people actually riding bikes.

My thought on why the pedestrian was in the wrong place at the wrong time is that the AV was doing the speed limit. You can see another car well ahead. The woman probably waited for a bunch of normal (speeding) traffic to go by and started crossing in the break. And then came the straggling (since the last stoplight) Uber car (doing the speed limit correctly) and surprised her. That’s my best guess at the psychology here.

Additionally, because I know what dashcam footage is like and I have cheated death in very similar circumstances hundreds of times, I have some doubt that this particular car did as well as a normal human paying proper attention. The worst possible case here is if the woman thought she had this car’s attention but didn’t. For example, if she thought that the car was going slower than other cars as I speculate, maybe she thought the car had seen her and was slowing for her. Never take this for granted ever! But that may be what she did.

Also if you go through the footage and you note where on the frame the lane line is (I’ve written software which can do this so I’m pretty attuned to it) you’ll see that near the point of collision, rather than swerving away left like a human who had just seen an obstruction would do, the car actually starts shifting right (into her). My strong suspicion here is that the car was already setting up for this right turn and that behavior may have looked weird and unnatural to the victim. The weirdest thing about the NPC AI cars in Euro Truck Simulator is how they turn corners unnaturally. I can’t tell you how many times I’ve been surprised by an AI car turning when I was sure it would be going straight. I have crashed my truck plenty of times because of this. Humans do drive differently in subtle ways.

The biggest difference between humans and AI however is on fine display with the safety driver recording a perfect advertisement for fully autonomous vehicles ASAP. I timed that the safety driver was looking at the road and paying attention to the driving for about 4s in this video; that leaves 9s where the person is looking at something in the car. If it’s not a phone and it’s some legitimate part of the mission, that’s pretty bad. If it is a phone, well, folks, that’s exactly what I’m seeing on the roads every day in cars that haven’t been set up to even steer themselves in a straight line.

The video shows a situation that an autonomous car should have been able to catch better than a human and one day will. It also highlights the problem with our current car oriented civilization. Instead of thinking of this woman as homeless (as widely reported for no good reason, she looks less homeless than I do when on a bike) and wildly speculating about her inebriation, imagine instead that she is returning from cancer treatments and can’t drive because of her medication. Although there are signs that say "don’t cross here", was she supposed to stand there for the rest of time? At that location, she needed to do some kind of crossing somewhere to get off that (very weird) bike path in the median. Don’t be so quick with victim blaming on this one.

It pains me to be a part of a society that universally accepts this kind of lethal force applied so carelessly. In this incident the lethal force actually killed somebody. The next time it could be me or anyone who drives a car doing the killing. Or me or anyone who ever rides a bike or steps off a sidewalk doing the dying. This is the message that I’ve been annoying all my friends with for the past 30 years. All I can say in my defense is that I’m doing everything I can to improve this unfortunate system.

UPDATE 2018-03-23

People are posting dashcam and mobile phone video of the crash site. Here’s one and another. While it’s difficult to get a read on this accurately, my expertise with dashcams and dashing across busy streets with a bike makes me think this is a more serious failing of the Uber car than the original crash video suggests. It is hard to say and I’m just speculating, but my guess is that the victim thought the slower than normal car must surely have seen her, maybe had already slowed for her, and would make the trivial course correction to avoid hitting her, certainly not make a strange course change towards her. I can not stress enough how bad all of those assumptions are! My rule as a VRU is that if a car can mow you down, it will mow you down. If you live by that rule, you possibly might not die by it. That’s just advice to humans. Cars, of course, should not be killing people they can avoid. And if the Uber car could have avoided this, which seems increasingly plausible to me, they’ll have to answer for it.

UPDATE 2018-03-24

This article talks about Uber’s AV woes. The part that I found interesting was this.

Uber also developed an app, mounted on an iPad in the car’s middle console, for drivers to alert engineers to problems. Drivers could use the app anytime without shifting the car out of autonomous mode. Often, drivers would annotate data at a traffic light or a stop, but many did so while the car was moving, said the two people familiar with Uber’s operations.

This is very uncool. We know that the system is bad because the system that Waymo uses, according to the article, is obvious and comparatively safe—recorded audio messages.

It would not shock me at all if Uber abandons its autonomous vehicle program. I can think of dozens of companies who have just as much incentive to be a leader in this technology. I appreciate Uber giving it a go, but I’m not surprised they have run into trouble.

UPDATE 2018-03-26

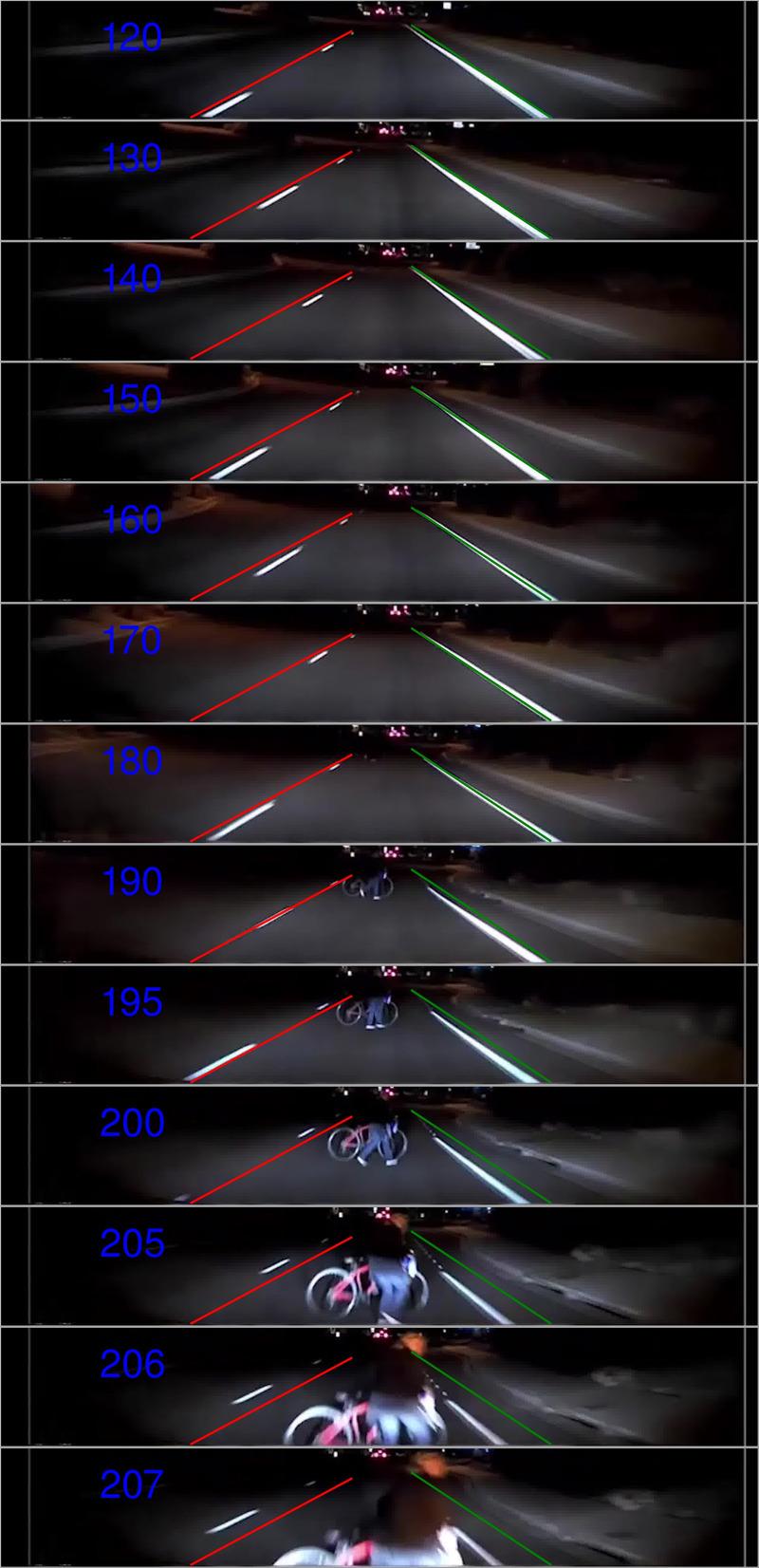

Some people said that they couldn’t see the car drifting from the video. To help make it clear I prepared this by downloading the video, this particuar one, and doing some simple things to each frame. I used ImageMagick to add a static line to show both lane lines and then I cropped it down to just the relevant lane part. I did keep the full width so that it was more clear that lines I added are in the exact same place each time. The idea is that with a fixed camera, they should be indicating where the car is on the road.

This car is clearly veering to the right at the end, into the victim. You can even see in the video that the blinking turn signal is reflecting in the watch-out-for-bikes sign on the right. Hopefully we can get some better answers about this.

Here are the important ImageMagick details if you want to try recreating what I’ve done.

-strokewidth 5 -stroke red -draw "line 480,970 890,750" \

-stroke green -draw "line 1395,970 1040,735" \

-crop 1920x300+0+675 \UPDATE 2018-03-28

Nice of Brad to make a special mention of my right turn hypothesis. And he further explores the idea that Uber still may not know what happened. His data ditching ideas (upload the most recent log backwards immediately after a crash) are the same as I was thinking.

UPDATE 2018-05-07

Brad reports on some rumors that the car’s systems (sensors? detectors? classifiers?) were not "tuned" well. I’m not sure that is new information in any way. Brad seems to think (maybe that someone else thinks) that the car detected something but considered it an ignorable "false" positive. If that’s the case, I’d love to know what the classifier thought it was about to ram into at full speed with no consequences. Something seems wrong with that theory.

UPDATE 2018-05-25

The NTSB has released a preliminary report which contains some pretty important information. Brad also discusses this.

There is some indication that the perception system did detect an obstruction and was even able to successfully classify it as a bicycle. Of course it wasn’t really a bicycle with respect to what the car should have done about it — it should not have treated a pedestrian with a bicycle like a bicycle.

Then we get into the more serious issues. For some reason, the native Volvo crash collision system was turned off. That might have been ok if there was some kind of audible warning but there didn’t appear to be one.

The serious critical failure it seems was that the safety driver wasn’t looking at the road by design. She was not texting or doing some other homicidal mobile phone nonsense. Apparently she was using some kind of display panel which was part of the mission. To me this was a huge design mistake. I feel sorry for the safety driver who will probably feel disproportionate effects of this crash she really couldn’t have prevented.

Then there is the odd trajectory which is helpfully plotted. It is much clearer now that my assertion that the car was veering right was 100% correct. Still unexplained is why exactly? Setting up for the turn or taking evasive action? The fact that the car only veered right and the pedestrian was hit on the front right corner of the car says that if it was taking evasive actions, it did a terrible job.

And finally, I felt a little bit bad previously mentioning the stupid wheel reflectors which are completely irrelevant. But damn if the NTSB didn’t justify my concern about their bicycle wheel reflector obsession by explicitly pointing out that the bike had no side reflectors. Again, it was not a factor. It is never a factor! The interesting thing is how the NTSB has made it an absurd law (to have them) for decades.

UPDATE 2018-06-23

Brad covers reports that the safety driver was watching TV on the job. I have to say, she was smirking a bit too much for boring telemetry.

It seems like Uber could randomly audit the internal camera we know they have on the driver and check some of that footage occasionally (heck do a rough ML classifier to help) to see if the drivers are paying attention. If interior footage of them is found watching TV there should be a zero tolerance policy — fired. It does seem that either Uber is extremely negligent for having their driver look at the telemetry console instead of doing the safety driving OR the driver is extremely negligent for watching TV on the job. Yes Uber could have done better [with the car’s technology], but that’s what they’re presumably researching; dwelling on that is beside the point. What we need to find out is if Uber had bad safety driver policies or a bad safety driver. If the latter, throw her under the bus ASAP.

If Uber does continue with testing, they should definitely talk to the people at Lytx.

UPDATE 2019-11-17

On November 5, 2019, the NTSB released its final report on the crash. I have analyzed it in detail.

The ultimate short summary: autonomous vehicle research is good; idiot human drivers are bad.