I just found this article in MIT’s Technology Review called Audi’s New AI Rig for Driverless Cars Is… a Toy Car.

This article seems like the kind of dumb thing you’d find in some celebrity-filled "news" magazine or something aimed at children. Are readers of the MIT Tech Review really uncertain about the answer to this posed question: "But why is Audi using a toy when there seem to be full-blown autonomous cars zipping around every forward-gazing city?" Seriously?

It’s just full of dumb stuff: "Obviously the model’s abilities can’t be directly ported to a full-size car…" And you know that how? That is a question I take rather seriously. How do you know that models don’t translate to reality?

The first question (why use models?) seems to be the truly obvious thing here. But do models actually help move progress in reality? I used to be confident that there were smart people working on this question; now, less so.

When talking about "models" I’m not just talking about toy cars. I’m talking about some kind of artificial system that exists to replace a real system cheaply, safely, conveniently, etc. I would be shocked and horrified if companies fielding real autonomous cars on real streets weren’t first testing all software in a simulator. The simulator is a model.

So why are these Audi guys not using software simulators? Perhaps they are. The problem that I realized is that how your driving software works in a simulator may not be how it works in real life. How could you possibly relate the two? Well, you could make a real car simulator that simulates real cars on real roads. You could write some software and if it went through the simulator fine you could see if the simulator was high fidelity by unleashing that software on a real car on real roads. But what if you got the simulator wrong? That would be very bad in my opinion.

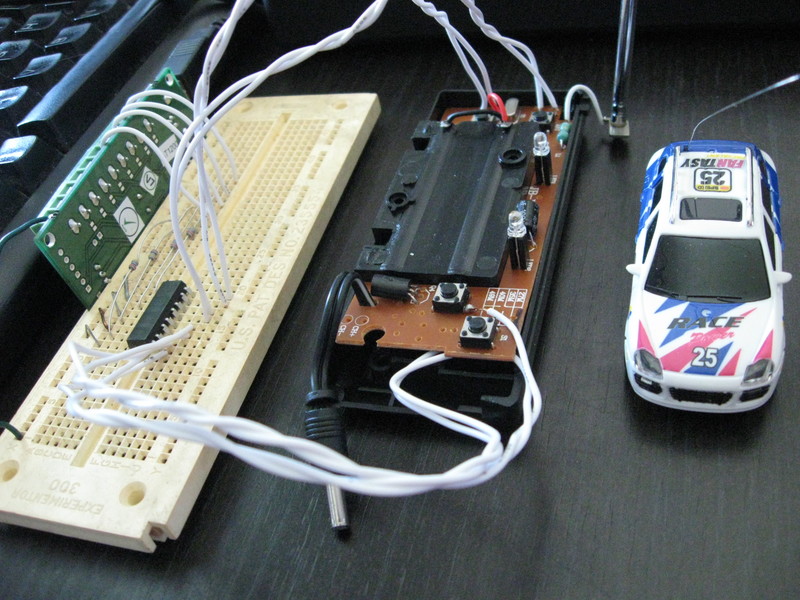

My idea was to write a simulator for a model car environment. Then build the cheap, safe model (toy) car and try out the same driving AI. The objective wouldn’t necessarily be to make good driving AI. In fact, seeing the differences between a software simulator and a scaled reality for terrible driving AI would be illustrative.

I started this project in June of 2014 and I got as far as building the physical car and its drive-by-wire controls. Where I got stuck is how to use the extremely irritating OpenCV to provide the equivalent of sensor feedback. I have the high speed camera. I know the concepts of OpenCV and it’s all doable, but OpenCV is such a mess with its different versions (multiplied by C/Cpp/Python), bloated scope, and impressively scant documentation. One day I hope to return to this project. If some people are taking this kind of thing seriously (more than MITTR is) that would be great.

Here is my project page for my modelling project.

This project isn’t just potentially useful for advancing the state of the art in self-driving cars. It’s also affordable, achievable, and should be quite fun to play with.

Update 2017-01-27

I just found this article where the head of Toyota’s Research Institute, Dr. Gill Pratt, says this interesting bit.

Rod Brooks has this wonderful phrase: "Simulations are doomed to succeed." We’re very well aware of that trap, and so we do a lot of work with our physical testing to validate that the simulator will be good. But inevitably, we’re pushing the simulator into regimes of operation that we don’t do with cars very often, which is where there are crashes, where there are near misses, where all kinds of high-speed things happen.

And he’s also saying practical autonomous cars are not on the foreseeable horizon.

UPDATE 2017-09-09

A good review of AV simulator technology.

And "Right now, you can almost measure the sophistication of an autonomy team—a drone team, a car team—by how seriously they take simulation…" from a long article about AV testing.