When I studied the latest machine learning best practices earlier this year, the experience was like having Sherpas guide me up Mt. Everest. Though that rarefied atmosphere was pretty exhausting, I’m no script-kiddie tourist. I wanted to revisit this mountain unguided and tackle it in my own way.

As you can see, I like metaphors. The first thing I felt needed to happen was to critically scrutinize the primary metaphor of machine learning, neural networks. Every lesson on neural networks starts with a half-hearted neurophysiology lesson which is accompanied by enough hand waving to generate a breeze. The problem, as the instructor makes clear eventually, is that the neural networks of machine learning don’t really have as much to do with the meat in your head as the course name might suggest.

…originally motivated by the goal of having machines that can mimic the brain. …[the reason for learning is] they work really well… and not, certainly not, just because they’re "biologically" [air quotes!] motivated.

Due to all these and many other simplifications, be prepared to hear groaning sounds from anyone with some neuroscience background if you draw analogies between Neural Networks and real brains.

It is often worth understanding models that are known to be wrong (but we must not forget that they are wrong!)

As best I can figure, back when Isaac Asimov started publishing robot stories (about 1950) people got the idea that synthesizing a human-like machine was a credible aspiration. Turing did nothing to dampen such enthusiasm with his famous imitation game test around the same time. The target was drawn and the goal was clear — build a machine that passed for human, cognition included.

As ever more complicated physics continued to prove useful, no phenomena was considered fundamentally unknowable. Why not the human mind? The famous McCulloch and Pitts paper of 1943 is an uncanny predecessor of modern machine learning texts insofar as it starts with vague neuroscience mumbo jumbo and devolves into mathematical glossolalia by the end.

I think it is now simply a sacred tradition to casually mention some meaningless trivia about the human brain before talking about the kinds of thinking that machines might be able to do. This is kind of like a Sherpa Puja blessing before a climb. No harm done, right?

This book cover features an image of the neurons in your head that you

will use to understand machine learning. Nothing more.

This book cover features an image of the neurons in your head that you

will use to understand machine learning. Nothing more.

But does this digression help anything? I say no. I believe pointless nonsense about neurons wastes the mental space that a metaphor truly useful to beginners could occupy. For example, every computer science professor and every student just filing out of their first machine learning lecture knows what an axon and a dendrite is. To me that is completely wasted educational effort.

How should machine learning be introduced? That’s a good question and I don’t pretend to have the optimal answer. All I know is that when I was learning this stuff, I felt cheated by the neuro preliminaries and I struggled to make sense of what was really going on in that context. Later after getting some experience with various neural network architectures, I tried to come up with a better way for beginners to understand what modern "neural" network machine learning is all about.

I have come up with a physical analogy that I think is illustrative and educational even if it is not perfect. It is certainly more helpful than axons and dendrites! By making the system physical I feel like some intuition can be applied. My analogy can take many forms but let’s start with this simple one. It’s a bit silly but bear with me.

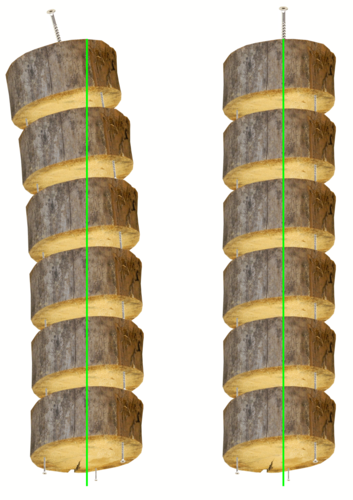

Imagine a telephone pole needs to be replaced. You don’t have a spare straight pole but there is a natural tree-shaped tree nearby that needs to be cleared anyway. A crew cuts down the tree with a chainsaw and then cross cuts the trunk into logs.

You stack the logs vertically end to end at the site of the old pole and when you’re done the new pole is very precarious of course. Let’s not worry about that; we’ll assume it eventually gets wrapped in fiberglass or something. The real problem here is that the top of the pole is not lined up with the bottom of the pole. You disassemble the stack of logs and drive three 40mm screws 20mm deep into the end of each log forming a triangle.

If you’re very accurate with that, you should be able to stack the logs again, resting on the screws now, and a plumb line from the top will not have moved any closer to the base.

If this mechanical system is a metaphor for machine learning, then the thing we’re trying to "learn" is how much adjusting do we need to apply to each of the leveling screws in each log to make the top line up with the bottom.

This is obviously a hard problem. If there are 6 logs then there are 21 screws that can be adjusted. The way any particular screw at any particular level gets adjusted has different effects depending on what level it’s at and what the others are doing. To adjust these screws you really have to disassemble the system from the top.

If you don’t know much about machine learning, this is a good start. The goal is to bring the top of the log tower to a target position (over the base) by changing the settings of some adjustment screws. In machine learning each log would be a "layer" of the network and the more logs, the "deeper" the network. Logs between the top and bottom log are called "hidden". In theory, there are settings where the pole could snake up in a wild curve as long as the top made it back to the same horizontal position as the base. As with machine learning, the pole is built from the bottom up. You know the location you put the first log. That is the "input" and when you’re done stacking, you can check where the top ends up. You then have to disassemble the log tower from the top down to make adjustments to the leveling screws.

This disassembly is the "back propagation" operation in machine learning where it is a bit more complicated. The reason is that in my log example, I don’t know exactly how you would know how to adjust the screws. You’d pretty much have to guess and use intuition, something the mathematical version cleverly avoids. The screws are like the "weights" in machine learning, just adjustable parameters that make the structure do what it’s going to do. In proper machine learning back propagation, the whole point is that you can calculate from the top’s error down each level and figure out how much you should be turning each screw in the system. You do those calculations, make the adjustments, rebuild the pole, and check the new error. Repeat until it’s lined up.

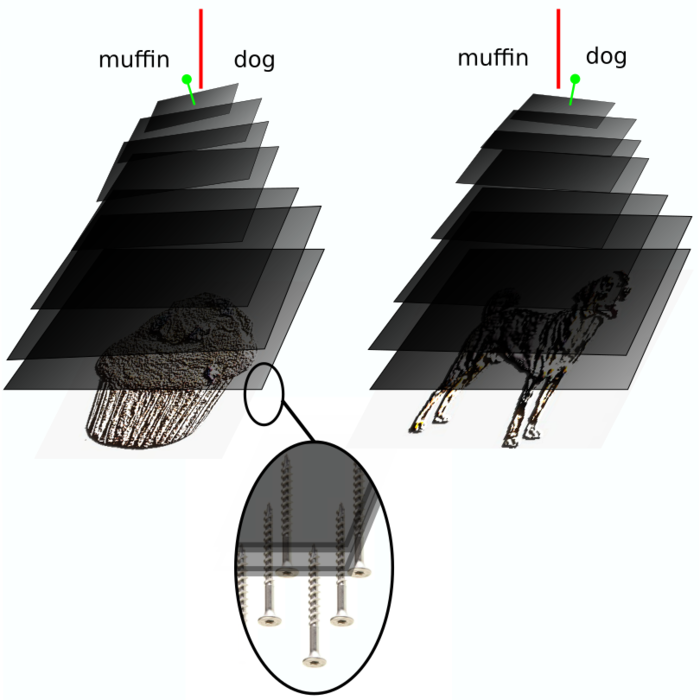

Ok, now that you’ve pictured that, let’s expand that thought experiment. Now imagine that you have a similar system but instead of logs, you have a big sheet of 10mm thick rubber, maybe 1m square. Every 5cm on a 20 by 20 grid you have a leveling screw set into it just like the 3 in the logs. That’s 400 leveling screws that can be adjusted. And on top of that sheet of rubber, you put another one just like it but this one will be set off by the height of a screw set on a grid every 10cm, so 100 screws on this level. Now imagine carrying on like this for another 6 layers or so each time reducing the number of screws and even the area of rubber needed. Finally you get to the top layer and it has a single screw sticking out of it. That final screw is near the ceiling and there is a line painted on the ceiling.

Got all that? Here’s the interesting part. Let’s say you have a bunch of low relief sculptures of either dogs or muffins (or whatever). If you can somehow slide these sculptures one at a time under your big pile of rubber and screws, what you would like to happen is that when a dog carving is under the pile, the top screw points to the right of the line on the ceiling, and when a muffin carving is under the pile the top screw points to the left of a line. That way, if you didn’t know if a relief sculpture was a dog or a muffin, by putting it under this giant mess, you could read the top screw and learn that system’s interpretation. The key trick to machine learning is how the hell are we going to correctly set all those damn screws (thousands, maybe millions!) to pull off this kind of ridiculous miracle. As unlikely as my physical analogy makes it sound, it turns out that when the adjusting screws are mathematically based, the layers are designed just so, and massive GPU power is pumped into calculating the number of turns each screw needs, this kind of crazy contraption works!

I’m not suggesting anyone rush out and try to construct a dog detector out of sheets of rubber! This is just to give you a very rough physical intuition of what the hell is going on with machine learning methods before you tackle the mathematical magic which makes it all possible. You may find this conception of what machine learning is like to be implausible, pointless, and completely erroneous. Well, that’s par for the course here! Once I started thinking of machine learning like this, I was finally able to get a solid understanding of what the math was doing.

For people with no actual interest in applying machine learning themselves, this analogy might be interesting just to see what the AI hype is really all about and the implausible seeming model it’s based on. It may be implausable but it does often make uncanny true predictions. I’m not sure this miraculous lack of complete failure has anything to do with human cognition, but I’ll allow that it may be giving us hints about that too. Let’s just not start with that!

UPDATE 2019-10-23

Reminded of this article while cutting firewood.