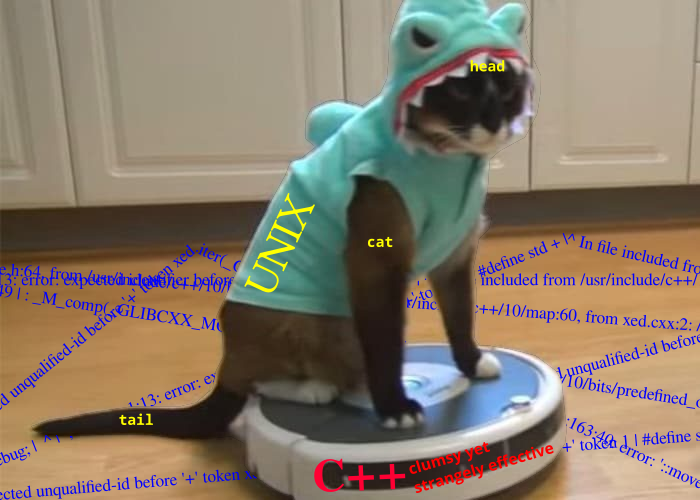

Recently I’ve been hammering away deep in the software mines working on a big project in C++. Today I came across a situation that really was begging for aggressive STL tricks. (Uh, I mean "the library formerly known as STL". Today the Standard Template Library has been wrapped up into the C++ Standard Library proper. But I’m old and started programming C++ before the book I used to learn it from had heard of STL. Modern C++ is fine. Everything is fine.)

Normally I try to avoid the typically gruesome syntax and mind-bending concepts found in C++'s esoteric features du jour, but in this case I really needed a weird container to be sorted in a weird way and then made unique in a different weird way. It turns out that is something the algorithm features are especially good at. I gave it a try and it was really useful, worked very well, and saved me a ton of trouble.

Hmm… Sorting and then removing duplicates… Hmm… Well, as a unix partisan I just couldn’t help but think of how easy this very specific task is to do extemporaneously at a Bash prompt with standard unix utilities. That got me thinking: I wonder how the speed of C++ compares to command line unix? Since I’d just done the hard part of getting it to work in C++, an experiment seemed pretty easy.

First, let’s get some random number test data to sort and de-duplicate.

$ for N in {1..100}; do echo $(( $RANDOM % 999 )) >> /dev/shm/rand_nums100; done

$ for N in {1..1000000}; do echo $(( $RANDOM % 999 )) >> /dev/shm/rand_nums1000000; doneHere I’m making two files, one containing 100 random numbers between 0

and 999, and another file containing one million such numbers. This

way we can check how well this scales. Note the path is /dev/shm/ so

this test data lives in a virtual file system in shared memory; the

goal is to eliminate any hardware latency or access issues like that.

For the C++ version here is the program I created which uses the

std::sort() and std::unique() functions to do the job.

#include <iostream> #include <string> #include <vector> #include <algorithm> using namespace std; int main () { vector<string> lines; // Also tried `list` container. Surprisingly similar. string tmp; while (getline(cin,tmp)) lines.push_back(tmp); // A decent example of linewise standard input reading. sort(lines.begin(),lines.end()); // *Sort*. Count stays the same so no need to seriously rearrange. vector<string>::iterator unique_resize_iter; // This iterator is needed to resize after deletions from the container. unique_resize_iter= unique(lines.begin(),lines.end()); // Remove duplicates using *unique* algorithm function. lines.resize(distance(lines.begin(),unique_resize_iter)); // Repair container so it properly takes effect. for (string s:lines) cout<<s<<endl; return 0; }

What could be simpler, right?

This can be compiled with something like this.

g++ -o stl_vs_unix stl_vs_unix.cxxNow we can see how fast it runs. I’ll send the output to wc just to minimize terminal output.

$ time ./stl_vs_unix < /dev/shm/rand_nums100 | wc -l

94

real 0m0.008sThat’s 8 milliseconds and you can see that from 100 random numbers 6 were duplicates that got removed (leaving 94 counted lines). That’s pretty decent.

How does unix do?

$ time sort /dev/shm/rand_nums100 | uniq | wc -l

94

real 0m0.007sThe first thing to note is that I wasn’t kidding about how easy this is — that’s pretty easy! But the time is respectable too. That’s encouraging.

Ok, what about the bigger data set?

$ time ./stl_vs_unix < /dev/shm/rand_nums1000000 | wc -l

999

real 0m1.598sHere we can see that from a million 3 digit numbers, most were obviously duplicates with all 999 possible random numbers appearing in the output. This one took about 1.6 seconds using the C++ program.

And the unix style of kung fu?

$ time sort /dev/shm/rand_nums1000000 | uniq | wc -l

999

real 0m0.432sThe unix command line approach got through that 3.7x quicker!

In the past I have had trouble convincing people that before getting into software engineering heroics (or worse, buying a new cluster and leaning into global warming), it’s always best to just use simple unix tools first. They are often astonishingly good! I realize that C++ isn’t the absolute superlative speedy approach and using this kind of standard library function probably is not even the most high-performance way to do this job in C++. That said, this is the canonical idiomatic modern C++ way, and C++ is well regarded for performance. For example, a lot of very serious high performance software is written in it — e.g. game engines.

Before seeing the data, I could have been persuaded that the high octane bespoke C++ program would do better as the job size increased; trivial tasks axiomatically take trivial time no matter how you do them. However, it very much looks like my bias favoring the unix command line (e.g.) may sometimes be justified. If a task seems like it would be ideal for solving with unix, it very likely is!

UPDATE 2021-11-26 I’ve just been reminded that there’s another unix

command line way to do this job. Instead of using the uniq command

to de-duplicate, it turns out that the sort command actually has a

built-in functionality that removes duplicates as it sorts. This is

using the sort -u option. That is usually the correct approach. I

just did some tests and it turns out that is 13% faster for the

million items of input. But interestingly it is not necessarily a slam

dunk. While the sort command should be able to quickly do the easy

job of comparing values for de-duplication, we must remember that by

piping any of that task off to another process, the power of unix

allows us to do both tasks in paralllel. It may turn out that for a

large enough input with difficult comparisons that sort|uniq is

actually faster (on a multi-core machine) than sort -u. The

industrial engineering moral of the story is, always do your own tests

with your own tasks! I talk more about some subtleties of these

commands over on my unix notes: http://xed.ch/h/unix#sort and

http://xed.ch/h/unix#uniq — there I demonstrate why having a

separate uniq program is actually necessary in the first place.

And just for fun, here’s a Python version.

#!/usr/bin/python import fileinput data= list() for l in fileinput.input(): data.append(l) for o in sorted(set(data)): print(o,end='')

Here’s that run.

$ time ./sort_uniq.py /tmp/rand_nums1000000 | wc -l

999

real 0m1.055s