I have been interested in Probabilistic Robotics by Sebastian Thrun, Wolfram Burgard, and Dieter Fox since I first heard about it while learning about the rocket science of Kalman filters from Professor Thrun himself in last year’s grand educational experience with Udacity (a company started by, yes, Sebastian Thrun). I was finally able to put my employer’s library to use and borrow this massive and expensive book. I found the topic to be interesting and important enough that I wanted the hardcore experience and this is definitely it!

A good summary of the book’s mission is on page 488:

Classical robotics often assumes that sensors can measure the full state of the environment. If this was always the case, then we would not have written this book! In fact, the contrary appears to be the case. In nearly all interesting real-world robotics problems, sensor limitations are a key factor.

And we learn, not only is it sensors that are not telling the truth—it turns out that actuators don’t actually do exactly what you tell them either. Oh and the maps you have or make are never quite right. These are the problems that this book tries to come to grips with.

Another way to think of it is that the existence of this book explains why a Roomba navigates the way it does (randomly). Or put another way, "stupid" easy navigation may be just as smart as fiendishly difficult hard navigation if you can get away with it. This book is not looking for the easy solution!

A big topic was SLAM which stands for Simultaneous Localization And Mapping (note Professor Thrun’s DARPA Challenge car, Stanley, in the SLAM Wikipedia page). This is where the robot is dropped into a place and has to figure out what’s there and how to reliably not hit it, even when all sensors are a bit wonky. This is fine, but I think there is more to this topic than the book even thought about (despite covering EKF SLAM, GraphSLAM, SEIF SLAM, multi-agent SLAM, etc). SLAM in rooms or controlled indoor environments which the book spent a lot of time on may be necessary for SWAT teams and turning off a serious nuclear reactor malfunction, but for everybody else (and the nuclear plant actually), just mount cameras on the walls! This may not be a terribly hard problem unless you really want it to be! But hey, what do I know?

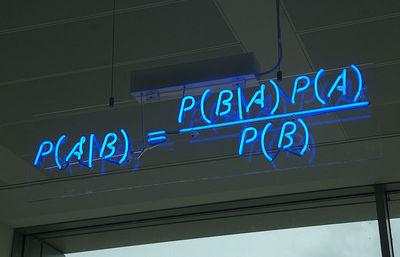

If I had to provide a one word answer to all of the problems this book worries about, I would say: Bayes. Apparently using Bayes Theorem early and often can really provide a lot of help with these tricky problems. How exactly that is done can be tricky.

Page 233 quotes (Cox 1991) by saying, "Localization has been dubbed the most fundamental problem to providing a mobile robot with autonomous capabilities." It is definitely hard, but if they still believe that after doing some work on the autonomous car problem among idiot human drivers, then I think they need to take another look at things.

Every chapter concluded with (some freakishly microscopic print in) a section called "Bibliographical Remarks". I found this interesting because they did a decent job of summarizing the history of this weird little corner of robotics math nerdery. However, many times the saga would build up until the final word on the topic was Thrun et al. Which is fine but I sometimes wondered if I was reading a Thrun biography. On page 144, we are reminded that, "Entire books have been dedicated to filter design using inertial sensors." So it could be even more painfully specialized I suppose than Sebastian’s greatest hits which are genuinely impressive.

I was quite frustrated to read this on page 329, "Little is currently known about the optimal density of landmarks, and researchers often use intuition when selecting specific landmarks." It goes on to say, "When selecting appropriate landmarks, it is essential to maximize the perceptual distinctiveness of landmarks." I’m a big proponent of making these gruesome algorithmic/computation problems as easy as possible. Yet never is the topic of how to eliminate the uncertainty with environmental augmentation mentioned. It would be like fretting over how hard it was to train people to memorize all street names and features because putting up street and road signs would be expensive. But, hey, my little thoughts on how horrifically hard problems might be simply averted with a dirty trick are probably not appreciated by people whose job it is to solve hard problems.

There were some interesting fun bits of knowledge that I had never heard of. For example, the fact that terramechanics is a thing is interesting to me. And I learned that the Aurora CEO Chris Urmson once worked on an autonomous robot to search for meteors in Antarctica which is very cool (in many ways). That reminds me of my concept for autonomous archaeology robots which also would use a lot of the ideas from this book to make very accurate maps of where items were found.

I don’t think that this book was remarkable for a graduate level textbook, but wow, what a crappy way to teach something! The first thing to complain about is that pseudocode equals pseudoquality. As it says on page 596 "This algorithm leaves a number of important implementation questions open, hence it shall only serve as a schematic illustration." The "algorithms" were to me useless. Implementing them from the opaque pseudocode scribbled with frantic Unicode hand waving seemed no easier than thinking of a decent algorithm myself directly in code. It’s like betting someone that you climbed Mt. Everest but instead of just showing them a picture of you on the summit, you say that they’ll need to climb the mountain too to see if there really is proof of the deed up there. Just write real code! This isn’t probabilistic abstract thinking! Everyone who looks at this book will want this technology on a machine that runs software. Showing some real code could highlight good practices throughout, easily demonstrate algorithm effectiveness, and easily prove they even work at all.

I was really not delighted with the gruesome math and just

unnecessarily harsh, but no doubt typical, syntax throughout. It

certainly was great practice for slogging through such muck. I

definitely feel more prepared to read obfuscatory stuff like this in

the future. It was so baroque that it was hard for everyone to keep it

straight. In Table 16.3-7, for example, there is Q(u) = 0 but then

in the text on the next page it talks about it as "all Qu's." Yuck.

I did not spot a rho, nu, iota, zeta, or upsilon — though I could

have overlooked them during my quick census. All other Greek letters

made an appearance, at least half in both forms! Did I mention that

just writing software, a language that all roboticists must speak,

would be much better?

Sometimes even the algorithm outline was not especially encouraging. On page 366, for example, "A good implementation of GraphSLAM will be more refined than our basic implementation discussed here." Gee thanks!

I feel like with the intense level of math, theory, and algorithms that mentioning real world robots at all may be premature. I got the feeling that all of this math would be more intelligently applied to abstract computer models only and talking about real applications just muddles things. I even was reminded of automata curiosities and that is finally explicitly mentioned (referring to Rivest and Schapire 1987a,b) in the final paragraph of the book’s text!

I sure wish I had this book’s TeX source because I would love to search and count the occurrences of these words: "straightforward", "obvious", "of course", "simply", "easily", "clearly", "standard simplification", etc. I would bet $50 that some condescending word like that appears more than 600 times, or on average at least once per page. I’ll leave that as "an exercise for the reader". Ahem. Provide some source code proof that this stuff works and then I’ll start feeling like I’m the dumb one for not having implemented it!

I’ll make a list of errors I found to give you a sense of the production quality in general.

-

p167 "…pre-cashing…"

-

p213 "…represents uncertainty due to uncertainty in the…"

-

p267 "…the type [of] sensor noise…"

-

p281 "…can easily be described [by] 105 or more variables."

-

p370 "The type map collected by the robot…" [type of map?]

-

p388 "…SEIF is an … algorithm…for which the time required is … logarithmic is data association search is involved."

-

p403 "Here mu is a vector of the same form and dimensionality as mu."

-

p411 "…sometimes the combines Markov blanket is insufficient…"

-

p414 "…but it [is] a result…"

-

p419 "Once two features in the map have [been] determined to be equivalent…"

-

p433 "…this techniques…"

-

p433 "…to attain efficient online…"

-

p460 "…advanced data structure[s]…"

-

p480 "…fact that [it] maintains…"

-

p487 "…running the risk of loosing orientation…"

-

p525 "…the vale function V2 with…"

-

p550 "xHb(x)"

-

p554 "…when b_ is a sufficient statistics of b…"

-

p592 "MCL localization" is redundant.

Really, that’s pretty good for such a massive tome (in English by German dudes, also Hut ab).

I’m glad I read this. It was definitely an experience and I feel more like grad students who have been hazed in this way, but if you really want to learn this stuff for practical applications, I’d just pay Sebastian for Term 2 of the Advanced SDCarND program and save yourself a lot of trouble. And get some working code instead of just a mental workout!