Today UCSD’s Contextual Robotics Institute hosted a conference called "Intelligent Vehicles 2025" and I luckily was able to sign up and attend. Yea! What a great experience!

Here’s Intel asking the exact same question I keep asking.

Yes, that is me in the front row.

I have long been interested in TuSimple which is not only pursuing an intelligent strategy similar to that of Otto, but they are also doing so from San Diego (and China and Arizona). I not only saw an excellent presentation by their CTO, Xiaodi Hou, but I also got a chance to have an excellent discussion with him in person. I learned that they have about 170 people in the company, about 50 in San Diego. The fact that they have strong ties to Chinese companies and backers did sound pretty shrewd from a global perspective. I’ve seen nothing to make me believe that TuSimple is lagging behind Otto.

Qualcomm was a major sponsor and I enjoyed the talk by their R&D Engineering VP. I didn’t hear anything too surprising about their approach. It all seemed like textbook Udacity material. They like semantic segmentation a lot (as do I) and are proud of some proprietary deconvolution architectures.

Pretty much every mention of "San Diego" on my http://xed.ch/av page had someone present to participate. This included a more detailed explanation of that crazy stuff with the car seat costume. I even got to see the car seat costume in person. I learned that UCSD has recently started to build up its robotics reputation. I have generally been of the opinion that when someone at UCSD used the word robot, it was pretty much complete nonsense. But that seems to be changing. In 2016 Henrik Christensen was poached from Georgia Tech where he was the founding director of the Institute for Robotics and Intelligent machines (IRIM).

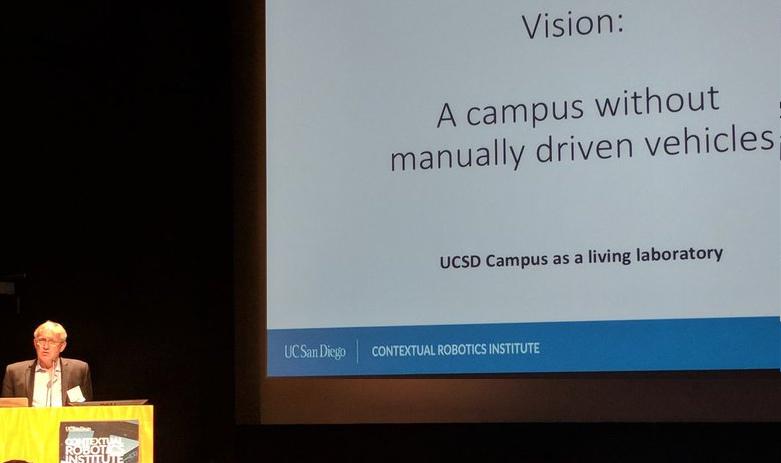

I learned that #UCSanDiegoRobotics is now a thing. It is specifically the Contextual Robotics Institute which hosted this event. But wait, it gets better. I was blown away to see Henrik announce this!

Wow! There’s actually a real plan for autonomous vehicles on UCSD’s campus! I’d sort of given up on San Diego for non-lethal machinery but perhaps I should reconsider.

The most technical talk turned out to be the most exciting to those in the know. Gabriel Rebeiz is a researcher involved in the extreme arcana of radio wave magic. He designs chips and antennas and chips containing antennas. He cheerfully revealed the good news to us that his research into phase array radar is going well. Very well. Basically a phase array radar (which I predict you will be hearing more about) is a radar that you can aim with no moving parts. Currently most lidar must spin around with a motor to shoot the laser all the places the laser needs to check. But with this kind of solid state approach, the radar sensor package is not just extremely robust, but it can be aimed. This means that the radar can now scan for proximity just like lidar. In many other ways, it is superior. It works in bad weather and bright light and glare and fog, etc. He said that their prototypes have been able to identify human forms at a distance comparable to what lidar can do. He pointed out that this sensor could count multiple humans at this range. He then hinted that it probably could count a duck and her baby ducks. He said the current research is to work on aiming the beam target in two dimensions so instead of scanning horizontal arcs, an entire 3d map of the surrounding empty space could be scanned with one chip and no moving or exposed parts. He hinted this chip would cost about $.15 to produce (non-aiming automotive radar units with the same kind of package are already less than $100).

That was a lot of good news today!