On November 5, 2019 the National Transportation Safety Board released it’s final report on the March 19, 2018 fatal collision of an Uber Advanced Technology Group (ATG) research autonomous vehicle and a pedestrian. The report can be found here. I have written extensively about this important incident in a post with critical updates last year.

With the new information it has been interesting to revisit this important historical event. Let’s review the questions we then wanted to have answers for that we now do. Quoting myself from my 2018-03-22 update…

The biggest difference between humans and AI however is on fine display with the safety driver recording a perfect advertisement for fully autonomous vehicles ASAP. I timed that the safety driver was looking at the road and paying attention to the driving for about 4s in [the limited driver facing] video; that leaves 9s where the person is looking at something in the car. If it’s not a phone and it’s some legitimate part of the mission, that’s pretty bad. If it is a phone, well, folks, that’s exactly what I’m seeing on the roads every day in cars that haven’t been set up to even steer themselves in a straight line.

In my update (2018-06-23) responding to reports that the driver was watching videos I said this.

It does seem that either Uber is extremely negligent for having their driver look at the telemetry console instead of doing the safety driving OR the driver is extremely negligent for watching TV on the job. Yes Uber could have done better [with the car’s technology], but that’s what they’re presumably researching; dwelling on that is beside the point. What we need to find out is if Uber had bad safety driver policies or a bad safety driver. If the latter, throw her under the bus ASAP.

As this is the critical question with literally millions of lives (to be saved by autonomous vehicles) at stake, I was hopeful that the NTSB report would conclusively provide an answer. It did not. It did however present a lot of very strong evidence.

You and I are effectively jurors deciding this case, the outcome of which will guide our thinking about autonomous vehicle development. The defendant is Uber ATG’s autonomous vehicle research. Were the management and engineering guilty of wrongdoing which caused this fatal outcome? From the news publicity, it doesn’t look good for Uber. Let’s review the case more carefully.

(Travis Kalanick is very happy that) the report says, "ATG’s decision to go from having two VOs in the vehicle to just one corresponded with the change in Uber CEOs." The acronym "VO", for "vehicle operator", pops up a lot and is central to the case. The facts are that ATG previously used two safety drivers (VOs) per car and reduced that to one prior to and including the fatal incident. This is bad. With two safety drivers I am confident that this crash would not have happened. But it is also understandable. Why not three VOs per car? At some point there are diminishing returns and if they think the car is near ready to go with zero humans, using one could seem adequate. It’s not like Tesla’s autopilot requires a passenger to be present.

Next on the list of very damning evidence is the engineered distraction for the VO. The Vehicle Automation Report says, "…ATG equipped the vehicle with … a tablet … that affords interaction between the vehicle operator and the [Automated Driving System]." Basically ATG replaced the Volvo’s infotainment touchscreen system with something similar but specific to the mission. Requiring a VO attend to a screen while a 4800lb death machine runs amok in public is categorically a bad idea. We know this because Waymo uses a voice system to accomplish the same goals. However, almost all of the evidence led me to conclude that the system was actually no more distracting than the stock Volvo system. Note that I’m not absolving that! But it clearly wasn’t radically worse than what the public accepts in general.

I said "almost" all of the evidence suggested that the onboard computers were compatible with a safe outcome. Some of the most interesting evidence is from the investigation interview with the VO. Who obviously has a lot to gain by establishing that VOs were put in an untenable situation by design.

Indeed, according to the interview, the VO felt like the incident issue tracking system itself had "issues" and "…believed it was because the linux [sic] system did not work well with the Apple device."

Let’s look at a passage from the interview report that focuses on this engineered distraction.

6g. She stated that prior to the crash, multiple errors had popped up and she had been looking at the error list — getting a running diagnostic. 6h. She stated that when a CTC alert error occurs, she must tag and label the event. If the ipad doesn’t go out of autonomy then she has to tag and label the event. 6i. Her latest training indicated that she may look at the ipad for 5 seconds and spend 3 seconds tagging and labeling (she wasn’t certain this was stated in written materials from ATG).

She wasn’t certain it was officially documented because that is madness! Anyway, she’s painting a picture of a distracting technical interface associated with the project. That would definitely be bad.

It gets worse. Section 6f says, "When asked if the vehicle usually identifies and responds to pedestrians, the VO stated that usually, the vehicle was overly sensitive to pedestrians. Sometimes the vehicle would swerve towards a bicycle, but it would react in some way." Well, that seems on topic! At least to me! I believe I was the first uninvolved observer to notice that it seemed like the car steered into the victim. This has now been conclusively confirmed. It certainly doesn’t seem good for Uber.

The interview report makes no mention — not even questions that linger — about the VO doing some distracted stuff on her phone. It in fact accepts for the record in 6k that "…she had placed her personal phone in her purse behind her prior to driving the vehicle. Her ATG phone (company provided phone) was on the front passenger seat next to the [official mission related] laptop." Phone records showed that there were no calls or texts on the VO’s personal phone while on duty.

The report concluded that the VO does not drink alcohol and police found no reason to suspect intoxication of any kind. (The NTSB’s preliminary report couldn’t help but point out that, "Toxicology test results for the pedestrian were positive for [stuff which is none of our damn business]." This is how it should be. Should this impaired person have been driving? No. But she paid for that good judgement with her life.)

Uber ATG seems to have set up a bad system designed to impair a sober driver’s ability to respond to problems. The fact that I could not find in the report a vigorous defense of these systems, nor could I find any of the logged event data from the operator’s interface, was not encouraging. At this point in the story, it’s looking bad for Uber.

However! Whodunit mystery stories can change direction quickly. Often the guilty party is actively trying to cast suspicion on a scapegoat.

I said that I did not find a vigorous defense by ATG, but I did find this in the report, "For the remainder of the crash trip, the operator did not interact with the [official ATG vehicle management] tablet, and the HMI [human machine interface] did not present any information that required the operator’s input." I’m presuming that the NTSB were provided with logs and data to support this pretty definite conclusion. If this is true, it certainly makes the driver video, where she is looking at something, much more suspicious. It does leave open the possibility that the system was producing optional messages that, while not "requiring the operator’s input", were distracting.

The Washington Post reported back in June 2018 that local police say the VO was watching "The Voice" on her phone at the time of the incident. I commented on that report at the time, but it felt unofficial. I was hoping that this NTSB report would have better details to make this evidence incontrovertible.

In the Human Performance Group Chairman’s Factual Report section 1.3, "According to the police report, … she picked up a gray bag and removed a cell phone with a black case. She exited the facility and parked in the adjoining lot, where she then focused most of her attention on the center console of the SDV, where an Uber ATG-installed tablet computer was mounted. At 9:17 p.m., the VO appeared to reach toward an item in the lower center console area, near her right knee, out of site of the dashcam." They then go on to show photos of where they surmise an entertainment telephone may have been positioned.

Frustratingly the tone of the NTSB report lacks certainty about where the VOs attention was during the mission that ended in the crash. However, they do not contradict the June reports of video watching. In section 1.6, "Search warrants were prepared and sent to the three service providers. Data obtained from Hulu showed that the account had been continuously streaming video from 9:16 p.m. until 9:59 p.m. (the crash occurred at aproximately 9:58 [9:58:46.5])."

And that’s where the facts mostly end.

The vehicle operator was distracted and an undistracted operator would have prevented the fatality.

-

Uber ATG says their systems, which presumably are accurately logged, were not doing anything requiring distraction.

-

The VO says her personal distraction machine was safely in her purse, presumably butt-dialing Hulu to play The Voice.

I was unsatisfied with the plausibility, however remote it might seem to you, of two contradictory explanations. There is a lot at stake here. In the end, we have to make a decision about the most likely version of the truth.

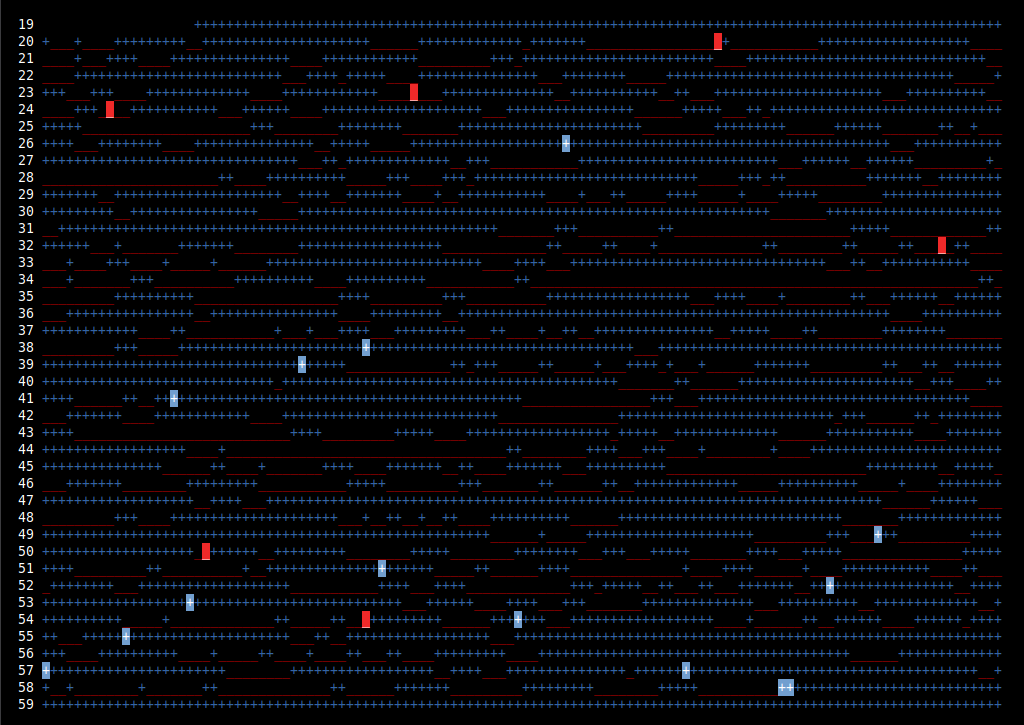

There is one more source of evidence that we can look at. The NTSB helpfully provided a data file of human annotated labels of where the VO’s attention was during each half second for the 40 minutes prior to the crash. The plot of the VOs attention in the report was muddled and unhelpful. I wanted to have a proper look at it so I figured out how I could neatly visualize the state of each data point — no summarizing statistics, just the entire raw data as provided. As with many of life’s problems, the answer was ascii art. In this plot an "_" means the VO was looking down at something in the car and a "+" means that they were looking up at the road properly. The number is the minute after 21h00 on 2018-03-18 and each of 120 characters per row represent every half second of that minute.

We can see from these investigator annotations that the VO is horrendously distracted! The question we must all answer for ourselves is this: Does the character of this distraction suggest interaction with a technical system designed to log unusual mission events, or watching TV?

To make sure we integrate all knowledge possible, I have included highlights at the points where the Onboard Image & Data Recorder Group Chairman’s Factual Report makes additional specific text annotations about the video of the operator. Those annotations are the following.

20:42.0 |

"VO drank from soda-like bottle." |

23:23.0 |

"VO drank from soda-like bottle." |

24:04.0 |

"VO smirked." |

26:32.5 |

"VO turned head and smirked at another Uber Technologies vehicle while stopped at a stop sign." |

32:56.0 |

"VO smirked." |

38:20.0 |

"VO yawned." |

39:16.0 |

"VO yawned." |

41:08.0 |

"VO laughed." |

49:52.0 |

"VO smirked." |

50:10.0 |

"VO appeared to be singing." |

51:21.0 |

"VO appeared to be singing." |

52:49.0 |

"VO yawned." |

53:09.0 |

"VO was singing in the manner of an outburst." |

54:20.0 |

"VO nodded no and then yes." |

54:29.5 |

"VO nodded no." |

55:05.0 |

"VO yawned and then smirked." |

57:00.0 |

"VO yawned." |

57:40.0 |

"VO drank from a soda-like bottle." |

58:46.2 |

"VO reacted abruptly while looking forward of the vehicle." |

58:46.5 |

"Impact occurred." |

Everyone needs to make their own judgement, but my conclusion is that the vehicle operator was watching TV. In the report Uber ATG documents many commendable safety improvements, but solving the nonexistent problem of distracting the driver for 49.4% of the time is not one of them! I believe that is because it is a spurious false problem. Uber’s claim that their logging system did not log any events or distract the driver prior to the crash seems more reliable than the implicit claim by the VO that the video streaming to her phone was not being watched and smirked at. (Interestingly I used the exact word "smirk" last year in my review of the limited final 13 seconds of pre-crash driver footage released to the public.)

I don’t need this woman to be burned at the stake or even go to jail. What I need her to do, and that’s why I’m doing this, is to exonerate autonomous vehicle research efforts. This all too typical idiot driver was unlucky enough to explicitly kill a vulnerable road user. That of course bothers me, but I’m more concerned about the thousands she may yet kill, statistically, by retarding progress toward a world devoid of idiot drivers like her. She is, as I mentioned previously, a perfect advertisement for exactly why autonomous vehicle research is so important.

UPDATE 2019-11-17

Part 1 explains the fatality. It was caused the same way that most automotive fatalities are caused — by an idiot human driver. For a look inside the specific autonomous technology problems the Uber engineers are working on, see my part 2 which goes into some more detail about the technical challenges.

UPDATE 2019-12-12

If you’re interested in this kind of thing, the next step is to check out Brad Templeton’s very thoughtful article on self-driving vehicle ethics. Those of us who have studied the Uber crash know the cause was a negligent human driver. What are the broader questions society needs to answer about risks involved with ultimately lowering overall traffic risk? Brad, as usual, is very sensible on the topic.