In a strange but fascinating dog and pony show that seemed staged to take the edge off of 2019Q1’s $700 million loss Tesla gave a masterclass on how to do computer science in the style of Tesla. This video was several hours long and astonishingly technical. As someone in the business of autonomous vehicles, I found much of it fascinating. This talk featured a complete computer vision lecture by super genius Andrej Karpathy which reminded me of his computer science lectures.

Let’s remember what I said about Andrej Karpathy two years ago when he was just a lowly Stanford CS professor:

Not only does Karpathy have the goods to do miracles with RNNs and then write lucidly about it, he provides source code to try it yourself. I must stop here and acknowledge that there are a lot of idiots in the world and this includes an astonishingly high percentage of computer programmers. I am typically pretty horrified with the code of other people, but I want to point out that I am capable of appreciating brilliant code when it makes its fleeting appearances. And Karpathy is a genius. Check out this brilliant program. Short, clear, and requiring no annoying hipster dependencies. Not only that, but even I got it working!

I hope that makes it clear that if Andrej Karpathy says something about my vocation, I listen carefully and take it seriously.

Elon Musk on the other hand… Well, he’s a definitely an interesting guy and a colorful character; this seems to be what made the biggest impresssion. Specifically, Musk literally made headlines by openly mocking his competitors.

"Lidar is lame. Lidar is lame. Lame." "Frigging stupid, expensive hardware that is worthless on the car."

Musk is clearly a smart guy and he has accomplished a lot that I approve of but he is clearly not infallible. Let me go on record right now, for example, and predict that out of the flurry of projections Musk made in the video, not a single one will be completed by the stated schedule. People who keep an eye on Musk will know that it is not especially bold to say such a thing.

It is bold for a CEO to openly deride the technology of competitors. This is especially true when you’re basically on the frontier of technology doing primary research. You can have a hunch your competitors are wrong, but it wouldn’t be research if you were really sure. It’s also weird and risky to disparage people who are clearly very, very smart. Of course I’m weird and risky and I do exactly that all the time so maybe Musk has a worthwhile point to make.

This is my blog so before digging into the technical details, I want to share some personal background that establishes how important this topic is to me. A few years before Andrej Karpathy was busy being born, I owned the first touchpad called the KoalaPad. With very excruciating patience and dexterity, one could almost trace features in a photograph and thereby bring a tiny slice of the visual world into a computer.

This was in the days before digital photography when digital images were lovingly crafted pixel by pixel by hand. A few years later in my early career as an engineer, I had a 12x12 Calcomp tablet which strangely seems to still be for sale exactly as I remember them 25 years ago.

It was common to use these to digitize blueprints. I did not do that because with blueprints you know the ground truth (that is what the document is trying to communicate) so I would routinely resynthesize blue prints as proper accurate CAD models numerically. That made it clear to me what the tablet was good for which was artwork.

As a hobby, I was very interested in fine art sculpture at the time and professionally I was writing 3d contouring software for CNC milling machines. Combining those things, I felt that carving figurative sculpture out of solid steel was an interesting new possibility. The problem, which seems backwards today, was how could one get a fine enough computer model of the subject? I gave this problem a lot of thought. I remember using my Calcomp tablet to outline the profiles of objects taken from many different angled photographs which I then could reconstruct into a 3d model. This technique worked perfectly for convex shapes such as a model sailboat keel bulb I machined. This fails, however, for concave shapes such as eye sockets recessed into a face.

With that background I hope you can appreciate the plausibility of me asking and answering the following question before the advent of consumer digital photography.

Given the information of a 2d image, is it theoretically possible to mathematically reconstruct a 3d model of the scene?

And the answer that I asserted (in 1993) was yes. Today I have been pretty much proven correct but it is important to review why that assertion then was quite unintuitive. At that time, there was absolutely no conceivable technical path to making this transformation so it was reasonable to believe that it might well be impossible. I based my answer on my knowledge of sculpture — if a human sculptor can look at a photograph and create a sculpture from it, then it can not be impossible. I knew that we just merely lacked the imagination to think of a technical mechanism.

Karpathy makes the exact same argument in the presentation when he points out that humans can drive using (predominantly) visual sensory information, therefore it must not be impossible. Today, however, there are conceivable and even practical mechanisms. This miracle of our physiology, sculpting by sight, can now be recreated algorithmically using many techniques. I have been delighted to watch the progress of this technology mature — stereophotogrammetry, mocap, catadioptric imaging, structured light, structure from motion, CNN-based single image depth estimation, etc. Check out Tesla’s brilliant 3d reconstrcution from 2d video in the presentation at 2h19m42s.

My hope is that by understanding my motivation to solve this problem decades ago we can have a useful analogy for the important topic today. Ok, some talented humans can look at a photograph and then sculpt it, so what? Well, let’s ask what an alternative method would be. What if the sculptor had access to the live subject? One technique that suits less talented sculptors (like me) is to get out calipers and a tape measure and simply directly measure all of the important geometry and make sure the sculpted work matches.

The brain of an autonomous car must basically "sculpt" a little model diorama of the world around it and then use that toy to play out different possibilities so that it can confidently choose the best outcome. If the car’s toy model is very inaccurate, decisions it makes will likely be suboptimal. There are two competing theories about how that model should be created. The Tesla approach is to be like a virtuoso sculptor who can look at a scene and sculpt it perfectly. We know that sort of thing is possible, but note that even the most talented sculptors don’t ridicule direct measurement and often use it. The other approach, used by Waymo/Google and pretty much the entire field, is this direct measurement approach (in addition to vision). Some object looks 10 meters away; can we drive 10 meters before hitting it? Tesla will eyeball it and decide. Other players favor extending their measuring sticks and actually explicitly prodding and exploring the intermediate space before driving into it.

That measuring stick is lidar.

Musk did allow that some exotic applications require lidar, for example his own rocket docking adventures. Here’s a photo of me installing a lidar sensor for old-fashioned dock docking (a photo which will give nightmares to anyone who has ever accidentally dropped and lost a small piece of hardware while assembling something).

As someone who professionally studies this sensor, I have some basic knowledge about lidar. Lidar is very much like a measuring stick — you extend it out and when it hits something you check how far the stick is extended and that’s how far away it is. Or you could think of it like how a cane is used by a blind person. How does a blind person know where to direct their single cane to navigate through a complex world? I have no idea but it seems like a very sophisticated skill. Lidars avoid the need for that skill by using a shotgun approach — they send out measurement probes basically in all directions, all the time. The details are obviously more nuanced but that is a reasonable way to think of it.

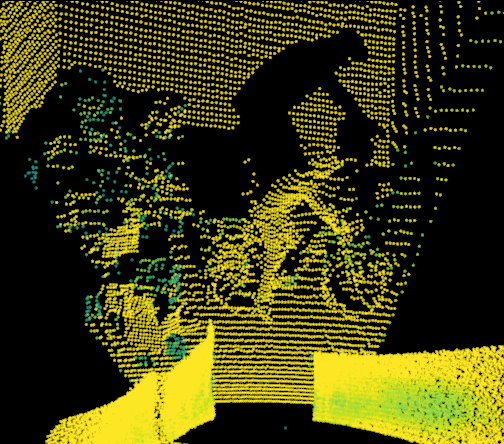

Here is a visualization of the 3d lidar data, the explicit coordinates found by laser probes, of me sitting on my bike in a room outside my office.

The lidar is inside my office, its walls are at the bottom, and the interesting part of the scene is sensed through the open doorway. This image looks quite rough but remember, this data is not about how things look. It is about knowing how things are. It is about knowing with very high certainty what space is occupied by something that blocks lasers. What this means and what I think the most important point here is this: if the lidar says something is present then do not drive into it! There are some very minor exceptions like puffs of exhaust (which frankly, I’m fine with avoiding out of an abundance of caution) but generally, if the lidar says that something is in the way, you need to avoid hitting it!

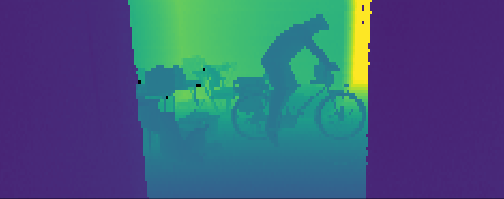

And just to give lidar a bit more credit, consider this image mathematically constructed from the lidar’s 3d data points.

That is very helpful for humans who do not quite understand the world in explicit numerical coordinates. What’s remarkable about this image is that it is coded on depth changes which are very likely to be accurate.

Let’s now consider the alternative to a sensor that can reach out and explicitly check if something is in the way. You don’t hold your hands out in front of you when you walk through a doorway in case the door is closed (actually I do this every night in the dark). Tesla imagines the same confidence we have with our eyes being valid with computer analysis of camera feeds. This may be completely feasible and within spec for driving but let’s take a quick look at how computer vision can fail.

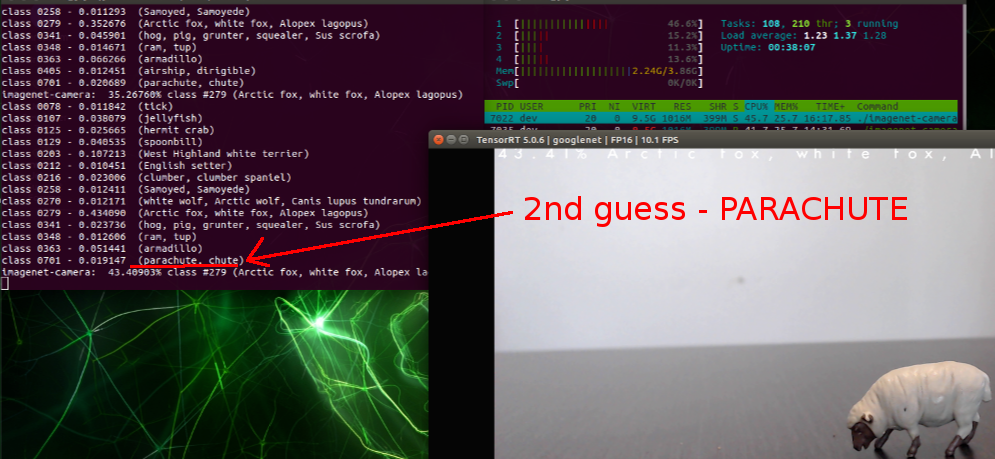

Here is a well-known general classifier I was playing with yesterday while testing a Jetson Nano (as described on the Computer Vision Hustler’s excellent website).

The best guess was a "white fox" which is pretty damn good given that "toy sheep" probably is not one of its known categories. But what struck me was the fact that "parachute" was number two. All the other low probability guesses were animals but why a parachute? Then I finally saw it in my mind. I’ve mocked it up so you don’t have to use your warped computer vision imagination.

Of course Tesla’s interesting vision claims include guessing depth values, not just object classifiers. What I’m trying to stress here is that neural networks do pretty well with the known unknowns but sometimes they produce unknown unknowns that are quite surreal. Basically computers have their own optical illusions and in life or death situations this is bad. It’s not an insurmountable problem. Neural net image processing can be really focused and leave much less room for error than human vision processing. However, it is something to be aware of and I think Tesla is.

I’m mostly giving you my personal reflections here, but if you want a good proper analysis of the issues, my always sensible resource for this technology, Brad Templeton, has a perfectly good article discussing the subtle points of lidar applied to autonomous vehicles. And to demonstrate his qualifications, here’s an article on the topic that is still quite useful from 2013.

Arguments Against Tesla’s Strategy

-

The daily earth eclipse that blocks out all light from the sun for a dozen or so hours each day. Lidars don’t even notice the ambient light level, changing shadows, etc. Tesla needs neural nets trained in all kinds of light regimes while lidar is more constant.

-

For any research vehicles, to not have every plausibly useful data sensor is lame, lame, lame. Maybe your research turns up the fact that a sensor is redundant and you can drop it in the production version, but for research, if you’re not using a lidar on your autonomous vehicle in these early stages then you’re doing it wrong. For production vehicles, things are different. Tesla is trying to straddle the line between the two.

-

Tesla talks about robotaxis and in the same talk reminds us that average cars today sit idle 90% of the time. This means a car doing 24/7 taxi service will last 10% of the time that an average car lasts now. This makes the economics of a super heavy duty 24/7 robotaxi more reasonable for lidars.

-

Lidar is a perfect compliment technology for improving the quality of data — for example, perfect pixel level segmentation. Andrej nails it at 2:20:00 talking about radar but fails to mention that lidar would do this even better. Don’t you think Google is using their good lidar data to train depth from vision too?

-

The massive data source argument seems weak if they have to hand annotate (as mentioned around 2h00m50s and 2h06m00s and 2h09m50s). Google’s captchas are crushing this if you want to talk about leveraging your "customers" to do your machine learning dirty work. Though Tesla has machine learning to "source data from the fleet" but then they must (apparently) manually annotate it somehow. The behavioral cloning of steering wheel data, by contrast, seems like a a good self-labeling resource.

-

Musk disparages GPS and high precision maps too (2h43m00s). This makes a bit more sense, but if you have a fleet, that’s also the solution to that problem so which approach you pick seems arbitrary to me. In other words, why would you not have super maps if you have 400k super sensing robots out there going exactly where cars like to go.

-

Fleet learning the behavior of other drivers leads to an interesting thought experiment of what happens as the humans leave the system. If this vision based inference system is receiving subtle input from the car ahead and that car is also doing the same thing, then what? Well, I guess the master argument still theoretically holds — humans manage it.

-

At this early stage of the technology, redundant systems for cross checking seem smart. Tesla calls lidar a "crutch". So be it. If your leg is weak, maybe you can walk on it, maybe not — a crutch isn’t the worst idea in the world. When you’re running around with clearly healthy strong legs, then lose the crutches. We’re not there yet. I prefer to think of the lidar not as a crutch but as a safety harness — if you’re working on the roof, you hope you won’t need it but if you do, you’ll be glad you had it on. Tesla claims redundancy is achieved with multiple cameras. (2h55m00s) (Then they quickly non sequitur off topic to predictive planning instead of sensing.) That is questionable methodology to say the least. It’s like telling your doctor that you’d like a second opinion and she says, "Ok, make an appointment with me tomorrow."

Arguments For Tesla’s Strategy

-

Lidars are currently expensive. Though they are getting cheaper and better very quickly. Musk didn’t do any favors to self-driving cars by slowing this down slightly with bad publicity.

-

Lidars that I have used have terrible form factors. For Velodyne, the mounting arrangement is so bad and unsafe that it calls into question the entire product. For example, note my corrective design for the mounting in the photo.

-

Andrej Karpathy believes lidar is unnecessary. That alone is pretty convincing for me. Karpathy’s says the following at 2h23m45s.

"Lidar is really a shortcut. It sidesteps the fundamental problems, the important problem of visual recognition that is necessary for autonomy and so it gives a false sense of progress and is ultimately a crutch. (It does give really fast demos.)"

-

Andrej says that roads are "designed for human visual consumption". I would quibble that they were really designed to move Roman ox carts — we just persist with that style. Anyone struggling to read a sign behind a tractor trailer knows that roads were designed to primarily support the transiting of vehicles with all other considerations secondary.

-

Lidars see 360 degrees. That would seem to be an advantage but it makes them awkward on car form factors causing distress over mounting and poor aerodynamics.

-

(Like the Tesla presentation) I didn’t mention radar much. Tesla thinks the radar provides some of the missing information that lidar provides (as best I can tell). So it’s not really cameras versus lidar, but more radar versus radar and lidar. Radar is Tesla’s white cane. Does it do the job well enough? It’s possible, but I think it’s close at this time.

-

It does seem like as the technology and applications mature there could be cars that could comfortably get away with no lidars. It is hard to say if we’re there yet. I am doubtful. Of course next generation cars will be able to integrate next generation lidars which will be smaller and cheaper and better.

-

Bespoke chip hardware and optimized everything because of a million cars sweetens the value of computer vision which requires more processing in traditional architectures. Cutting the cruft, as Tesla has admirably done, gives us a glimpse of what is possible.

-

Having personally designed systems to keep lidar happy in very stern weather, it is a bit harder to do than with cameras IMO. But thinking back to the bulk and fragility of the first digital cameras, this is not inevitable. Lidars help my company’s adventurous endeavors by naturally melting ice but this built in heater (~20W) isn’t always so welcome. Still, it’s hard to keep up with how fast lidars are improving in all ways.

-

Musk’s approach is a nice way to have customers pay for a prodigious test fleet. Nothing wrong with that. It gets more activity and buzz for self-driving cars which is generally good. My hope is that it hastens the acceptance of the entire concept of autonomous driving. Idiot drivers kill 100 people per hour so we’re in a hurry with this. It is an emergency.

In summary I am completely aligned with Brad who offers this opinion. "I predict that cameras will always be present, and that their role will increase over time, but the lidars will not go away for a long time." That seems very fair. A lot will depend on just how much improvement lidars see. I can easily imagine a small $50 device that can tell you with 99% certainty high level things like the presence of a dog or motorcycle and their exact coordinates. And for that unknown 1% there would be a 99.99% chance that it is something you really, really should not hit with a car. We’ll see.

I was impressed with the Tesla presentation and strategy. It definitely seems like Musk has a fair amount of hubris, but I guess people who don’t have strong opinions are more comfortable killing each other in their status-quo cars. Tesla’s methodology is very clever and interesting but not necessarily correct. It might just work though. Time will tell.

I wish them the best of luck of course. You can’t say Musk is sitting back doing nothing. He’s definitely got a great team energized to give it their best effort. Although I disagree about the following comment made by Musk (only because consumers' demands are so disappointing) it is righteous enough for me to overlook any Tesla style faux pas. (3h23m50s)

"This is not me proscribing a point of view about the world. This is me predicting what consumers will demand. Consumers will demand in the future that people are not allowed to drive these two ton death machines."

If you can make that happen, Elon, you are my savior.